by Joche Ojeda | Jan 8, 2026 | netframework

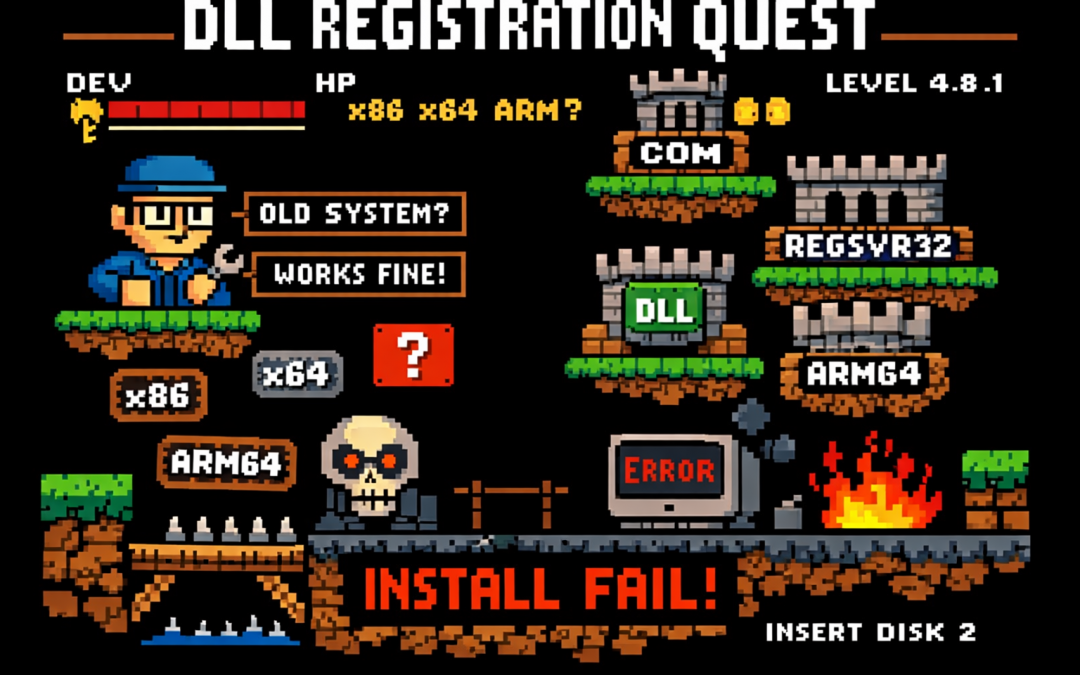

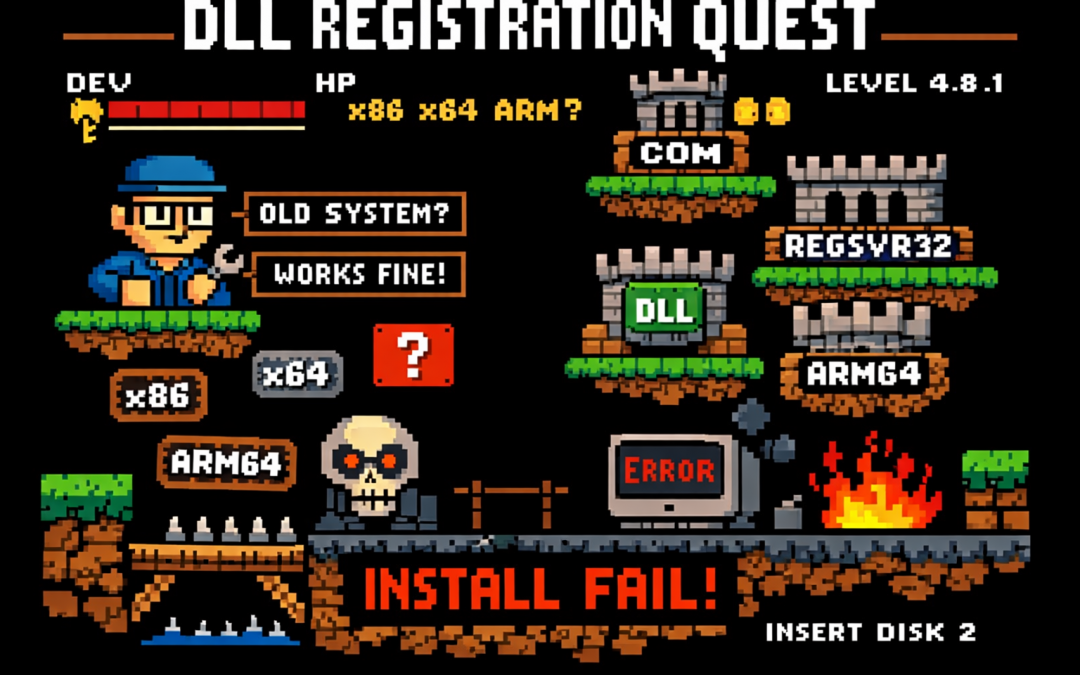

If you’ve ever worked on a traditional .NET Framework application — the kind that predates .NET Core and .NET 5+ — this story may feel painfully familiar.

I’m talking about classic .NET Framework 4.x applications (4.0, 4.5, 4.5.1, 4.5.2, 4.6, 4.6.1, 4.6.2, 4.7, 4.7.1, 4.7.2, 4.8, and the final release 4.8.1). These systems often live long, productive lives… and accumulate interesting technical debt along the way.

This particular system is written in C# and relies heavily on COM components to render video, audio, and PDF content. Under the hood, many of these components are based on technologies like DirectShow filters, ActiveX controls, or other native COM DLLs.

And that’s where the story begins.

The Setup: COM, DirectShow, and Registration

Unlike managed .NET assemblies, COM components don’t just live quietly next to your executable. They need to be registered in the system registry so Windows knows:

- What CLSID they expose

- Which DLL implements that CLSID

- Whether it’s 32-bit or 64-bit

- How it should be activated

For DirectShow-based components (very common for video/audio playback in legacy apps), registration is usually done manually during development using regsvr32.

Example:

regsvr32 MyVideoFilter.dll

To unregister:

regsvr32 /u MyVideoFilter.dll

Important detail that bites a lot of people:

- 32-bit DLLs must be registered using:

C:\Windows\SysWOW64\regsvr32.exe My32BitFilter.dll

- 64-bit DLLs must be registered using:

C:\Windows\System32\regsvr32.exe My64BitFilter.dll

Yes — the folder names are historically confusing.

Development Works… Until It Doesn’t

So here’s the usual development flow:

- You register all required COM DLLs on your development machine

- Visual Studio runs the app

- Video plays, audio works, PDFs render

- Everyone is happy

Then comes the next step.

“Let’s build an installer.”

The Installer Paradox

This is where the real battle story begins.

Your application installer (MSI, InstallShield, WiX, Inno Setup — pick your poison) now needs to:

- Copy the COM DLLs

- Register them during installation

- Unregister them during uninstall

This seems reasonable… until you test it.

The Loop From Hell

Here’s what happens in practice:

- You install your app for testing

- The installer registers its own copies of the COM DLLs

- Your development environment was using different copies (maybe newer, maybe local builds)

- Suddenly:

- Your source build stops working

- Visual Studio debugging breaks

- Another app on your machine mysteriously fails

Then you:

- Uninstall the app

- The installer unregisters the DLLs

- Now nothing works anymore

So you re-register the DLLs manually for development…

…and the cycle repeats.

The Battle Story: It Only Worked… Until It Didn’t

For a long time, this system appeared to work just fine.

Video played. Audio rendered. PDFs opened. No obvious errors.

What we didn’t realize at first was a dangerous hidden assumption:

The system only worked on machines where a previous version had already been installed.

Those older installations had left COM DLLs registered in the system — quietly, globally, and invisibly.

So when we deployed a new version without removing the old one:

- Everything looked fine

- No one suspected missing registrations

- The system passed casual testing

The illusion broke the moment we tried a clean installation.

On a fresh machine — no previous version, no leftover registry entries — the application suddenly failed:

- Components didn’t initialize

- Media rendering silently broke

- COM activation errors appeared only in Event Viewer

The installer claimed it was registering the DLLs.

In reality, it wasn’t doing it correctly — or at least not in the way the application actually needed.

That’s when we realized we were standing on years of accidental state.

Why This Happens

The core problem is simple but brutal:

COM registration is global and mutable.

There is:

- One registry

- One CLSID mapping

- One “active” DLL per COM component

Your development environment, your installed application, and your installer are all fighting over the same global state.

.NET Framework itself isn’t the villain here — it’s just sitting on top of an old Windows integration model that predates modern isolation concepts.

A New Player Enters: ARM64

Just when we thought the problem space was limited to x86 vs x64, another variable entered the scene.

One of the development machines was ARM64.

Modern Windows on ARM adds a new layer of complexity:

- ARM64 native processes

- x64 emulation

- x86 emulation on top of ARM64

From the outside, everything looks like it’s running on x64.

Under the hood, it’s not that simple.

Why This Makes COM Registration Worse

COM registration is architecture-specific:

- x86 DLLs register under one view of the registry

- x64 DLLs register under another

- ARM64 introduces yet another execution context

On Windows ARM:

System32 contains ARM64 binariesSysWOW64 contains x86 binaries- x64 binaries often run through emulation layers

So now the questions multiply:

- Which

regsvr32 did the installer call?

- Was it ARM64, x64, or x86?

- Did the app run natively, or under emulation?

- Did the COM DLL match the process architecture?

The result is a system where:

- Some things work on Intel machines

- Some things work on ARM machines

- Some things only work if another version was installed first

At this point, debugging stops being logical and starts being archaeological.

Why This Is So Common in .NET Framework 4.x Apps

Many enterprise and media-heavy applications built on:

- .NET Framework 4.0–4.8.1

- WinForms or WPF

- DirectShow or ActiveX components

were designed in an era where:

- Global COM registration was normal

- Side-by-side isolation was rare

- “Just register the DLL” was accepted practice

These systems work, but they’re fragile — especially on developer machines.

Where the Article Is Going Next

In the rest of this article series, we’ll look at:

- Why install-time registration is often a mistake

- How to isolate development vs runtime environments

- Techniques like:

- Dedicated dev VMs

- Registration-free COM (where possible)

- App-local COM deployment

- Clear ownership rules for installers

- How to survive (and maintain) legacy .NET Framework systems without losing your sanity

If you’ve ever broken your own development environment just by testing your installer — you’re not alone.

This is the cost of living at the intersection of managed code and unmanaged history.

by Joche Ojeda | Jan 8, 2026 | C#, XAF

Async/await in C# is often described as “non-blocking,” but that description hides an important detail:

await is not just about waiting — it is about where execution continues afterward.

Understanding that single idea explains:

- why deadlocks happen,

- why

ConfigureAwait(false) exists,

- and why it *reduces* damage without fixing the root cause.

This article is not just theory. It’s written because this exact class of problem showed up again in real production code during the first week of 2026 — and it took a context-level fix to resolve it.

The Hidden Mechanism: Context Capture

When you await a task, C# does two things:

- It pauses the current method until the awaited task completes.

- It captures the current execution context (if one exists) so the continuation can resume there.

That context might be:

- a UI thread (WPF, WinForms, MAUI),

- a request context (classic ASP.NET),

- or no special context at all (ASP.NET Core, console apps).

This default behavior is intentional. It allows code like this to work safely:

var data = await LoadAsync();

MyLabel.Text = data.Name; // UI-safe continuation

But that same mechanism becomes dangerous when async code is blocked synchronously.

The Root Problem: Blocking on Async

Deadlocks typically appear when async code is forced into a synchronous shape:

var result = GetDataAsync().Result; // or .Wait()

What happens next:

- The calling thread blocks, waiting for the async method to finish.

- The async method completes its awaited operation.

- The continuation tries to resume on the original context.

- That context is blocked.

- Nothing can proceed.

💥 Deadlock.

This is not an async bug. This is a context dependency cycle.

The Blast Radius Concept

Blocking on async is the explosion.

The blast radius is how much of the system is taken down with it.

Full blast (default await)

- Continuation *requires* the blocked context

- The async operation cannot complete

- The caller never unblocks

- Everything stops

Reduced blast (ConfigureAwait(false))

- Continuation does not require the original context

- It resumes on a thread pool thread

- The async operation completes

- The blocking call unblocks

The original mistake still exists — but the damage is contained.

The real fix is “don’t block on async,”

but ConfigureAwait(false) reduces the blast radius when someone does.

What ConfigureAwait(false) Actually Does

await SomeAsyncOperation().ConfigureAwait(false);

This tells the runtime:

“I don’t need to resume on the captured context. Continue wherever it’s safe to do so.”

Important clarifications:

- It does not make code faster by default

- It does not make code parallel

- It does not remove the need for proper async flow

- It only removes context dependency

Why This Matters in Real Code

Async code rarely exists in isolation.

A method often awaits another method, which awaits another:

await AAsync();

await BAsync();

await CAsync();

If any method in that chain requires a specific context, the entire chain becomes context-bound.

That is why:

- library code must be careful,

- deep infrastructure layers must avoid context assumptions,

- and UI layers must be explicit about where context is required.

When ConfigureAwait(false) Is the Right Tool

Use it when all of the following are true:

- The method does not interact with UI state

- The method does not depend on a request context

- The method is infrastructure, library, or backend logic

- The continuation does not care which thread resumes it

This is especially true for:

- NuGet packages

- shared libraries

- data access layers

- network and IO pipelines

What It Is Not

ConfigureAwait(false) is not:

- a fix for bad async usage

- a substitute for proper async flow

- a reason to block on tasks

- something to blindly apply everywhere

It is a damage-control tool, not a cure.

A Real Incident: When None of the Usual Fixes Worked

First week of 2026.

The first task I had with the programmers in my office was to investigate a problem in a trading block. The symptoms looked like a classic async issue: timing bugs, inconsistent behavior, and freezes that felt “await-shaped.”

We did what experienced .NET teams typically do when async gets weird:

- Reviewed the full async/await chain end-to-end

- Double-checked the source code carefully (everything looked fine)

- Tried the usual “tools people reach for” under pressure:

.Wait().GetAwaiter().GetResult()- wrapping in

Task.Run(...)

- adding

ConfigureAwait(false)

- mixing combinations of those approaches

None of it reliably fixed the problem.

At that point it stopped being a “missing await” story. It became a “the model is right but reality disagrees” story.

One of the programmers, Daniel, and I went deeper. I found myself mentally replaying every async pattern I know — especially because I’ve written async-heavy code myself, including library work like SyncFramework, where I synchronize databases and deal with long-running operations.

That’s the moment where this mental model matters: it forces you to stop treating await like syntax and start treating it like mechanics.

The Actual Root Cause: It Was the Context

In the end, the culprit wasn’t which pattern we used — it was where the continuation was allowed to run.

This application was built on DevExpress XAF. In this environment, the “correct” continuation behavior is often tied to XAF’s own scheduling and application lifecycle rules. XAF provides a mechanism to run code in its synchronization context — for example using BlazorApplication.InvokeAsync, which ensures that continuations run where the framework expects.

Once we executed the problematic pipeline through XAF’s synchronization context, the issue was solved.

No clever pattern. No magical await. No extra parallelism.

Just: the right context.

And this is not unique to XAF. Similar ideas exist in:

- Windows Forms (UI thread affinity + SynchronizationContext)

- WPF (Dispatcher context)

- Any framework that requires work to resume on a specific thread/context

Why I’m Writing This

What I wanted from this experience is simple: don’t forget it.

Because what makes this kind of incident dangerous is that it looks like a normal async bug — and the internet is full of “four fixes” people cycle through:

- add/restore missing

await

- use

.Wait() / .Result

- wrap in

Task.Run()

- use

ConfigureAwait(false)

Sometimes those are relevant. Sometimes they’re harmful. And sometimes… they’re all beside the point.

In our case, the missing piece was framework context — and once you see that, you realize why the “blast radius” framing is so useful:

- Blocking is the explosion.

ConfigureAwait(false) contains damage when someone blocks.- If a framework requires a specific synchronization context, the fix may be to supply the correct context explicitly.

That’s what happened here. And that’s why I’m capturing it as live knowledge, not just documentation.

The Mental Model to Keep

- Async bugs are often context bugs

- Blocking creates the explosion

- Context capture determines the blast radius

ConfigureAwait(false) limits the damage- Proper async flow prevents the explosion entirely

- Frameworks may require their own synchronization context

- Correct async code can still fail in the wrong context

Async is not just about tasks. It’s about where your code is allowed to continue.

by Joche Ojeda | Jan 5, 2026 | Uncategorized

How I stopped my multilingual activity stream from turning RAG into chaos

In the previous article (RAG with PostgreSQL and C# (pros and cons) | Joche Ojeda) I explained how naïve RAG breaks when you run it over an activity stream.

Same UI language.

Totally unpredictable content language.

Spanish, Russian, Italian… sometimes all in the same message.

Humans handle that fine.

Vector retrieval… not so much.

This is the “silent failure” scenario: retrieval looks plausible, the LLM sounds confident, and you ship nonsense.

So I had to change the game.

The Idea: Structured RAG

Structured RAG means you don’t embed raw text and pray.

You add a step before retrieval:

- Extract a structured representation from each activity record

- Store it as metadata (JSON)

- Use that metadata to filter, route, and rank

- Then do vector similarity on a cleaner, more stable representation

Think of it like this:

Unstructured text is what users write.

Structured metadata is what your RAG system can trust.

Why This Fix Works for Mixed Languages

The core problem with activity streams is not “language”.

The core problem is: you have no stable shape.

When the shape is missing, everything becomes fuzzy:

- Who is speaking?

- What is this about?

- Which entities are involved?

- Is this a reply, a reaction, a mention, a task update?

- What language(s) are in here?

Structured RAG forces you to answer those questions once, at write-time, and save the answers.

PostgreSQL: Add a JSONB Column (and Keep pgvector)

We keep the previous approach (pgvector) but we add a JSONB column for structured metadata.

ALTER TABLE activities

ADD COLUMN rag_meta jsonb NOT NULL DEFAULT '{}'::jsonb;

-- Optional: if you store embeddings per activity/chunk

-- you keep your existing embedding column(s) or chunk table.

Then index it.

CREATE INDEX activities_rag_meta_gin

ON activities

USING gin (rag_meta);

Now you can filter with JSON queries before you ever touch vector similarity.

A Proposed Schema (JSON Shape You Control)

The exact schema depends on your product, but for activity streams I want at least:

- language: detected languages + confidence

- actors: who did it

- subjects: what object is involved (ticket, order, user, document)

- topics: normalized tags

- relationships: reply-to, mentions, references

- summary: short canonical summary (ideally in one pivot language)

- signals: sentiment/intent/type if you need it

Example JSON for one activity record:

{

"schemaVersion": 1,

"languages": [

{ "code": "es", "confidence": 0.92 },

{ "code": "ru", "confidence": 0.41 }

],

"actor": {

"id": "user:42",

"displayName": "Joche"

},

"subjects": [

{ "type": "ticket", "id": "ticket:9831" }

],

"topics": ["billing", "invoice", "error"],

"relationships": {

"replyTo": "activity:9912001",

"mentions": ["user:7", "user:13"]

},

"intent": "support_request",

"summary": {

"pivotLanguage": "en",

"text": "User reports an invoice calculation error and asks for help."

}

}

Notice what happened here: the raw multilingual chaos got converted into a stable structure.

Write-Time Pipeline (The Part That Feels Expensive, But Saves You)

Structured RAG shifts work to ingestion time.

Yes, it costs tokens.

Yes, it adds steps.

But it gives you something you never had before: predictable retrieval.

Here’s the pipeline I recommend:

- Store raw activity (as-is, don’t lose the original)

- Detect language(s) (fast heuristic + LLM confirmation if needed)

- Extract structured metadata into your JSON schema

- Generate a canonical “summary” in a pivot language (often English)

- Embed the summary + key fields (not the raw messy text)

- Save JSON + embedding

The key decision: embed the stable representation, not the raw stream text.

C# Conceptual Implementation

I’m going to keep the code focused on the architecture. Provider details are swappable.

Entities

public sealed class Activity

{

public long Id { get; set; }

public string RawText { get; set; } = "";

public string UiLanguage { get; set; } = "en";

// JSONB column in Postgres

public string RagMetaJson { get; set; } = "{}";

// Vector (pgvector) - store via your pgvector mapping or raw SQL

public float[] RagEmbedding { get; set; } = Array.Empty<float>();

public DateTimeOffset CreatedAt { get; set; }

}

Metadata Contract (Strongly Typed in Code, Stored as JSONB)

public sealed class RagMeta

{

public int SchemaVersion { get; set; } = 1;

public List<DetectedLanguage> Languages { get; set; } = new();

public ActorMeta Actor { get; set; } = new();

public List<SubjectMeta> Subjects { get; set; } = new();

public List<string> Topics { get; set; } = new();

public RelationshipMeta Relationships { get; set; } = new();

public string Intent { get; set; } = "unknown";

public SummaryMeta Summary { get; set; } = new();

}

public sealed class DetectedLanguage

{

public string Code { get; set; } = "und";

public double Confidence { get; set; }

}

public sealed class ActorMeta

{

public string Id { get; set; } = "";

public string DisplayName { get; set; } = "";

}

public sealed class SubjectMeta

{

public string Type { get; set; } = "";

public string Id { get; set; } = "";

}

public sealed class RelationshipMeta

{

public string? ReplyTo { get; set; }

public List<string> Mentions { get; set; } = new();

}

public sealed class SummaryMeta

{

public string PivotLanguage { get; set; } = "en";

public string Text { get; set; } = "";

}

Extractor + Embeddings

You need two services:

- Metadata extraction (LLM fills the schema)

- Embeddings (Microsoft.Extensions.AI) for the stable text

public interface IRagMetaExtractor

{

Task<RagMeta> ExtractAsync(Activity activity, CancellationToken ct);

}

Then the ingestion pipeline:

using System.Text.Json;

using Microsoft.Extensions.AI;

public sealed class StructuredRagIngestor

{

private readonly IRagMetaExtractor _extractor;

private readonly IEmbeddingGenerator<string, Embedding<float>> _embeddings;

public StructuredRagIngestor(

IRagMetaExtractor extractor,

IEmbeddingGenerator<string, Embedding<float>> embeddings)

{

_extractor = extractor;

_embeddings = embeddings;

}

public async Task ProcessAsync(Activity activity, CancellationToken ct)

{

// 1) Extract structured JSON

RagMeta meta = await _extractor.ExtractAsync(activity, ct);

// 2) Create stable text for embeddings (summary + keywords)

string stableText =

$"{meta.Summary.Text}\n" +

$"Topics: {string.Join(", ", meta.Topics)}\n" +

$"Intent: {meta.Intent}";

// 3) Embed stable text

var emb = await _embeddings.GenerateAsync(new[] { stableText }, ct);

float[] vector = emb.First().Vector.ToArray();

// 4) Save into activity record

activity.RagMetaJson = JsonSerializer.Serialize(meta);

activity.RagEmbedding = vector;

// db.SaveChangesAsync(ct) happens outside (unit of work)

}

}

This is the core move: you stop embedding chaos and start embedding structure.

Query Pipeline: JSON First, Vectors Second

When querying, you don’t jump into similarity search immediately.

You do:

- Parse the user question

- Decide filters (actor, subject type, topic)

- Filter with JSONB (fast narrowing)

- Then do vector similarity on the remaining set

Example: filter by topic + intent using JSONB:

SELECT id, raw_text

FROM activities

WHERE rag_meta @> '{"intent":"support_request"}'::jsonb

AND rag_meta->'topics' ? 'invoice'

ORDER BY rag_embedding <=> @query_embedding

LIMIT 20;

That “JSON first” step is what keeps multilingual streams from poisoning your retrieval.

Tradeoffs (Because Nothing Is Free)

Structured RAG costs more at write-time:

- more tokens

- more latency

- more moving parts

But it saves you at query-time:

- less noise

- better precision

- more predictable answers

- debuggable failures (because you can inspect metadata)

In real systems, I’ll take predictable and debuggable over “cheap but random” every day.

Final Thought

RAG over activity streams is hard because activity streams are messy by design.

If you want RAG to behave, you need structure.

Structured RAG is how you make retrieval boring again.

And boring retrieval is exactly what you want.

In the next article, I’ll go deeper into the exact pipeline details: language routing, mixed-language detection, pivot summaries, chunk policies, and how I made this production-friendly without turning it into a token-burning machine.

Let the year begin 🚀

“`

by Joche Ojeda | Jan 5, 2026 | A.I, Postgres

RAG with PostgreSQL and C#

Happy New Year 2026 — let the year begin

Happy New Year 2026 🎉

Let’s start the year with something honest.

This article exists because something broke.

I wasn’t trying to build a demo.

I was building an activity stream — the kind of thing every social or collaborative system eventually needs.

Posts.

Comments.

Reactions.

Short messages.

Long messages.

Noise.

At some point, the obvious question appeared:

“Can I do RAG over this?”

That question turned into this article.

The Original Problem: RAG over an Activity Stream

An activity stream looks simple until you actually use it as input.

In my case:

- The UI language was English

- The content language was… everything else

Users were writing:

- Spanish

- Russian

- Italian

- English

- Sometimes all of them in the same message

Perfectly normal for humans.

Absolutely brutal for naïve RAG.

I tried the obvious approach:

- embed everything

- store vectors

- retrieve similar content

- augment the prompt

And very quickly, RAG went crazy.

Why It Failed (And Why This Matters)

The failure wasn’t dramatic.

No exceptions.

No errors.

Just… wrong answers.

Confident answers.

Fluent answers.

Wrong answers.

The problem was subtle:

- Same concept, different languages

- Mixed-language sentences

- Short, informal activity messages

- No guarantee of language consistency

In an activity stream:

- You don’t control the language

- You don’t control the structure

- You don’t even control what a “document” is

And RAG assumes you do.

That’s when I stopped and realized:

RAG is not “plug-and-play” once your data becomes messy.

So… What Is RAG Really?

RAG stands for Retrieval-Augmented Generation.

The idea is simple:

Retrieve relevant data first, then let the model reason over it.

Instead of asking the model to remember everything, you let it look things up.

Search first.

Generate second.

Sounds obvious.

Still easy to get wrong.

The Real RAG Pipeline (No Marketing)

A real RAG system looks like this:

- Your data lives in a database

- Text is split into chunks

- Each chunk becomes an embedding

- Embeddings are stored as vectors

- A user asks a question

- The question is embedded

- The closest vectors are retrieved

- Retrieved content is injected into the prompt

- The model answers

Every step can fail silently.

Tokenization & Chunking (The First Trap)

Models don’t read text.

They read tokens.

This matters because:

- prompts have hard limits

- activity streams are noisy

- short messages lose context fast

You usually don’t tokenize manually, but you do choose:

- chunk size

- overlap

- grouping strategy

In activity streams, chunking is already a compromise — and multilingual content makes it worse.

Embeddings in .NET (Microsoft.Extensions.AI)

In .NET, embeddings are generated using Microsoft.Extensions.AI.

The important abstraction is:

IEmbeddingGenerator<TInput, TEmbedding>

This keeps your architecture:

- provider-agnostic

- DI-friendly

- survivable over time

Minimal Setup

dotnet add package Microsoft.Extensions.AI

dotnet add package Microsoft.Extensions.AI.OpenAI

Creating an Embedding Generator

using OpenAI;

using Microsoft.Extensions.AI;

using Microsoft.Extensions.AI.OpenAI;

var client = new OpenAIClient("YOUR_API_KEY");

IEmbeddingGenerator<string, Embedding<float>> embeddings =

client.AsEmbeddingGenerator("text-embedding-3-small");

Generating a Vector

var result = await embeddings.GenerateAsync(

new[] { "Some activity text" });

float[] vector = result.First().Vector.ToArray();

That vector is what drives everything that follows.

⚠️ Embeddings Are Model-Locked (And Language Makes It Worse)

Embeddings are model-locked.

Meaning:

Vectors from different embedding models cannot be compared.

Even if:

- the dimension matches

- the text is identical

- the provider is the same

Each model defines its own universe.

But here’s the kicker I learned the hard way:

Multilingual content amplifies this problem.

Even with multilingual-capable models:

- language mixing shifts vector space

- short messages lose semantic anchors

- similarity becomes noisy

In an activity stream:

- English UI

- Spanish content

- Russian replies

- Emoji everywhere

Vector distance starts to mean “kind of related, maybe”.

That’s not good enough.

PostgreSQL + pgvector (Still the Right Choice)

Despite all that, PostgreSQL with pgvector is still the right foundation.

Enable pgvector

CREATE EXTENSION IF NOT EXISTS vector;

Chunk-Based Table

CREATE TABLE doc_chunks (

id bigserial PRIMARY KEY,

document_id bigint NOT NULL,

chunk_index int NOT NULL,

content text NOT NULL,

embedding vector(1536) NOT NULL,

created_at timestamptz NOT NULL DEFAULT now()

);

Technically correct.

Architecturally incomplete — as I later discovered.

Retrieval: Where Things Quietly Go Wrong

SELECT content

FROM doc_chunks

ORDER BY embedding <=> @query_embedding

LIMIT 5;

This query decides:

- what the model sees

- what it ignores

- how wrong the answer will be

When language is mixed, retrieval looks correct — but isn’t.

Classic example: Moscow

So for a Spanish speaker, “Mosca” looks like it should mean insect (which it does), but it’s also the Italian name for Moscow.

Why RAG Failed in This Scenario

Let’s be honest:

- Similar ≠ relevant

- Multilingual ≠ multilingual-safe

- Short activity messages ≠ documents

- Noise ≠ knowledge

RAG didn’t fail because the model was bad.

It failed because the data had no structure.

Why This Article Exists

This article exists because:

- I tried RAG on a real system

- With real users

- Writing in real languages

- In real combinations

And the naïve RAG approach didn’t survive.

What Comes Next

The next article will not be about:

It will be about structured RAG.

How I fixed this by:

- introducing structure into the activity stream

- separating concerns in the pipeline

- controlling language before retrieval

- reducing semantic noise

- making RAG predictable again

In other words:

How to make RAG work after it breaks.

Final Thought

RAG is not magic.

It’s:

search + structure + discipline

If your data is chaotic, RAG will faithfully reflect that chaos — just with confidence.

Happy New Year 2026 🎆

If you’re reading this:

Happy New Year 2026.

Let’s make this the year we stop trusting demos

and start trusting systems that survived reality.

Let the year begin 🚀

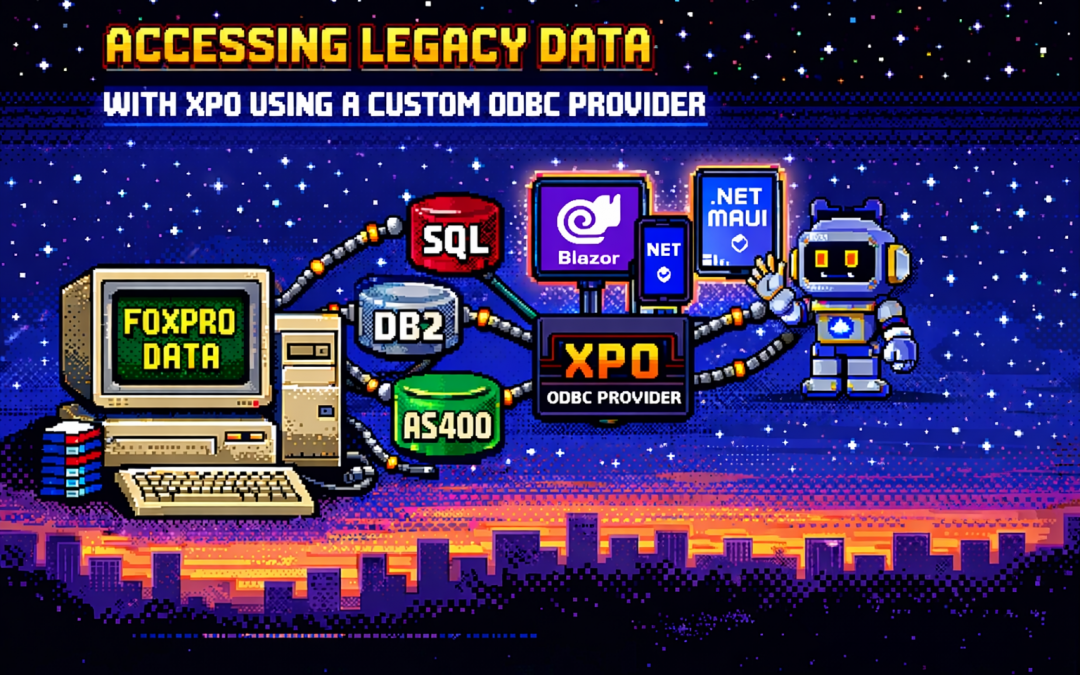

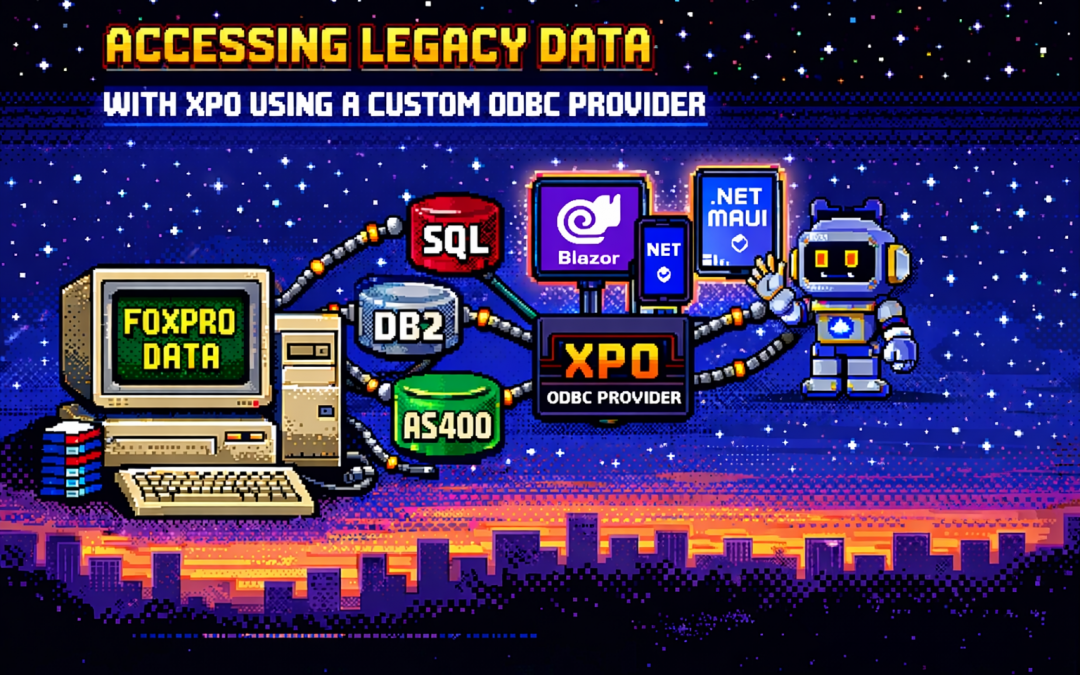

by Joche Ojeda | Dec 23, 2025 | ADO, ADO.NET, XPO

One of the recurring challenges in real-world systems is not building new software — it’s

integrating with software that already exists.

Legacy systems don’t disappear just because newer technologies are available. They survive because they work,

because they hold critical business data, and because replacing them is often risky, expensive, or simply not allowed.

This article explores a practical approach to accessing legacy data using XPO by leveraging ODBC,

not as a universal abstraction, but as a bridge when no modern provider exists.

The Reality of Legacy Systems

Many organizations still rely on systems built on technologies such as:

- FoxPro tables

- AS400 platforms

- DB2-based systems

- Proprietary or vendor-abandoned databases

In these scenarios, it’s common to find that:

- There is no modern .NET provider

- There is no ORM support

- There is an ODBC driver

That last point is crucial. ODBC often remains available long after official SDKs and providers have disappeared.

It becomes the last viable access path to critical data.

Why ORMs Struggle with Legacy Data

Modern ORMs assume a relatively friendly environment: a supported database engine, a known SQL dialect,

a compatible type system, and an actively maintained provider.

Legacy databases rarely meet those assumptions. As a result, teams are often forced to:

- Drop down to raw SQL

- Build ad-hoc data access layers

- Treat legacy data as a second-class citizen

This becomes especially painful in systems that already rely heavily on DevExpress XPO for persistence,

transactions, and domain modeling.

ODBC Is Not Magic — and That’s the Point

ODBC is often misunderstood.

Using ODBC does not mean:

- One provider works for every database

- SQL becomes standardized

- Type systems become compatible

Each ODBC-accessible database still has:

- Its own SQL dialect

- Its own limitations

- Its own data types

- Its own behavioral quirks

ODBC simply gives you a way in. It is a transport mechanism, not a universal language.

What an XPO ODBC Provider Really Is

When you implement an XPO provider on top of ODBC, you are not building a generic solution for all databases.

You are building a targeted adapter for a specific legacy system that happens to be reachable via ODBC.

This matters because ODBC is used here as a pragmatic trick:

- To connect to something you otherwise couldn’t

- To reuse an existing, stable access path

- To avoid rewriting or destabilizing legacy systems

The database still dictates the SQL dialect, supported features, and type system. Your provider must respect those constraints.

Why XPO Makes This Possible

XPO is not just an ORM — it is a provider-based persistence framework.

All SQL-capable XPO providers are built on top of a shared foundation, most notably:

ConnectionProviderSql

https://docs.devexpress.com/CoreLibraries/DevExpress.Xpo.DB.ConnectionProviderSql

This architecture allows you to reuse XPO’s core benefits:

- Object model

- Sessions and units of work

- Transaction handling

- Integration with domain logic

While customizing what legacy systems require:

- SQL generation

- Command execution

- Schema discovery

- Type mapping

Dialects and Type Systems Still Matter

Even when accessed through ODBC:

- FoxPro is not SQL Server

- DB2 is not PostgreSQL

- AS400 is not Oracle

Each system has its own:

- Date and time semantics

- Numeric precision rules

- String handling behavior

- Constraints and limits

An XPO ODBC provider must explicitly map database types, handle dialect-specific SQL,

and avoid assumptions about “standard SQL.” ODBC opens the door — it does not normalize what’s inside.

Real-World Experience: AS400 and DB2 in Production

This approach is not theoretical. Last year, we implemented a custom XPO provider using ODBC for

AS400 and DB2 systems in Mexico, where:

- No viable modern .NET provider existed

- The systems were deeply embedded in business operations

- ODBC was the only stable integration path

By introducing an XPO provider on top of ODBC, we were able to integrate legacy data into a modern .NET architecture,

preserve domain models and transactional behavior, and avoid rewriting or destabilizing existing systems.

The Hidden Advantage: Modern UI and AI Access

Once legacy data is exposed through XPO, something powerful happens: that data becomes immediately available to modern platforms.

- Blazor applications

- .NET MAUI mobile and desktop apps

- Background services

- Integration APIs

- AI agents and assistants

And you get this without rewriting the database, migrating the data, or changing the legacy system.

XPO becomes the adapter that allows decades-old data to participate in modern UI stacks, automated workflows,

and AI-driven experiences.

Why Not Just Use Raw ODBC?

Raw ODBC gives you rows, columns, and primitive values. XPO gives you domain objects, identity tracking,

relationships, transactions, and a consistent persistence model.

The goal is not to modernize the database. The goal is to modernize access to legacy data

so it can safely participate in modern architectures.

Closing Thought

An XPO ODBC provider is not a silver bullet. It will not magically unify SQL dialects, type systems, or database behavior.

But when used intentionally, it becomes a powerful bridge between systems that cannot be changed

and architectures that still need to evolve.

ODBC is the trick that lets you connect.

XPO is what makes that connection usable — everywhere, from Blazor UIs to AI agents.