by Joche Ojeda | Feb 11, 2026 | A.I

My last two articles have been about one idea: closing the loop with AI.

Not “AI-assisted coding.” Not “AI that helps you write functions.”

I’m talking about something else entirely.

I’m talking about building systems where the agent writes the code, tests the code, evaluates the result,

fixes the code, and repeats — without me sitting in the middle acting like a tired QA engineer.

Because honestly, that middle position is the worst place to be.

You get exhausted. You lose objectivity. And eventually you look at the project and think:

everything here is garbage.

So the goal is simple:

Remove the human from the middle of the loop.

Place the human at the end of the loop.

The human should only confirm: “Is this what I asked for?”

Not manually test every button.

The Real Question: How Do You Close the Loop?

There isn’t a single answer. It depends on the technology stack and the type of application you’re building.

So far, I’ve been experimenting with three environments:

- Console applications

- Web applications

- Windows Forms applications (still a work in progress)

Each one requires a slightly different strategy.

But the core principle is always the same:

The agent must be able to observe what it did.

If the agent cannot see logs, outputs, state, or results — the loop stays open.

Console Applications: The Easiest Loop to Close

Console apps are the simplest place to start.

My setup is minimal and extremely effective:

- Serilog writing structured logs

- Logs written to the file system

- Output written to the console

Why both?

Because the agent (GitHub Copilot in VS Code) can run the app, read console output, inspect log files,

decide what to fix, and repeat.

No UI. No browser. No complex state.

Just input → execution → output → evaluation.

If you want to experiment with autonomous loops, start here. Console apps are the cleanest lab environment you’ll ever get.

Web Applications: Where Things Get Interesting

Web apps are more complex, but also more powerful.

My current toolset:

- Serilog for structured logging

- Logs written to filesystem

- SQLite for loop-friendly database inspection

- Playwright for automated UI testing

Even if production uses PostgreSQL or SQL Server, I use SQLite during loop testing.

Not for production. For iteration.

The SQLite CLI makes inspection trivial.

The agent can call the API, trigger workflows, query SQLite directly, verify results, and continue fixing.

That’s a full feedback loop. No human required.

Playwright: Giving the Agent Eyes

For UI testing, Playwright is the key.

You can run it headless (fully autonomous) or with UI visible (my preferred mode).

Yes, I could remove myself completely. But I don’t.

Right now I sit outside the loop as an observer.

Not a tester. Not a debugger. Just watching.

If something goes completely off the rails, I interrupt.

Otherwise, I let the loop run.

This is an important transition:

From participant → to observer.

The Windows Forms Problem

Now comes the tricky part: Windows Forms.

Console apps are easy. Web apps have Playwright.

But desktop UI automation is messy.

Possible directions I’m exploring:

- UI Automation APIs

- WinAppDriver

- Logging + state inspection hybrid approach

- Screenshot-based verification

- Accessibility tree inspection

The goal remains the same: the agent must be able to verify what happened without me.

Once that happens, the loop closes.

What I’ve Learned So Far

1) Logs Are Everything

If the agent cannot read what happened, it cannot improve. Structured logs > pretty logs. Always.

2) SQLite Is the Perfect Loop Database

Not for production. For iteration. The ability to query state instantly from CLI makes autonomous debugging possible.

3) Agents Need Observability, Not Prompts

Most people focus on prompt engineering. I focus on observability engineering.

Give the agent visibility into logs, state, outputs, errors, and the database. Then iteration becomes natural.

4) Humans Should Validate Outcomes — Not Steps

The human should only answer: “Is this what I asked for?” That’s what the agent is for.

My Current Loop Architecture (Simplified)

Specification → Agent writes code → Agent runs app → Agent tests → Agent reads logs/db →

Agent fixes → Repeat → Human validates outcome

If the loop works, progress becomes exponential.

If the loop is broken, everything slows down.

My Question to You

This is still evolving. I’m refining the process daily, and I’m convinced this is how development will work from now on:

agents running closed feedback loops with humans validating outcomes at the end.

So I’m curious:

- What tooling are you using?

- How are you creating feedback loops?

- Are you still inside the loop — or already outside watching it run?

Because once you close the loop…

you don’t want to go back.

by Joche Ojeda | Oct 8, 2025 | Oqtane, Tenants

OK — it’s time for today’s Oqtane blog post!

Yesterday, I wrote about changing the runtime mode in Oqtane and how that allows you to switch between

Blazor Server and Blazor WebAssembly functionality.

Today, I decided to explore how multi-tenancy works — specifically, how Oqtane manages multiple sites within the same

installation.

Originally, I wanted to cover the entire administrative panel and all of its modules in one post, but that would’ve been too big.

So, I’m breaking it down into smaller topics. This post focuses only on site functionality and how

multi-tenancy works from the administrative side — basically, how to set up tenants in Oqtane.

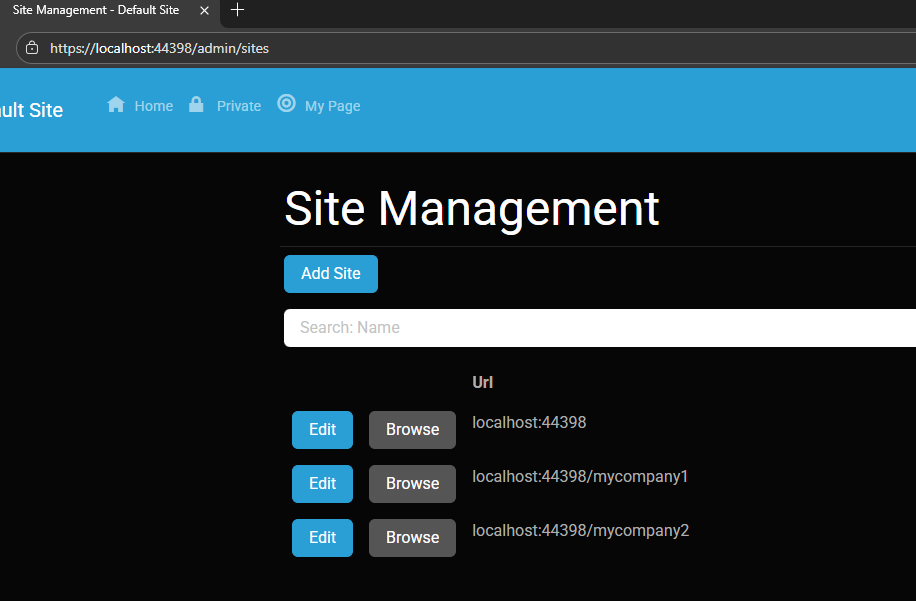

Setting Up a Multi-Tenant Oqtane Installation

To make these experiments easy to replicate, I decided to use SQLite as my database.

I created a new .NET Oqtane application using the official templates and added it to a

GitHub repository.

Here’s what I did:

- Set up the host configuration directly in

appsettings.json.

- Ran the app, went to the admin panel, and created two additional sites.

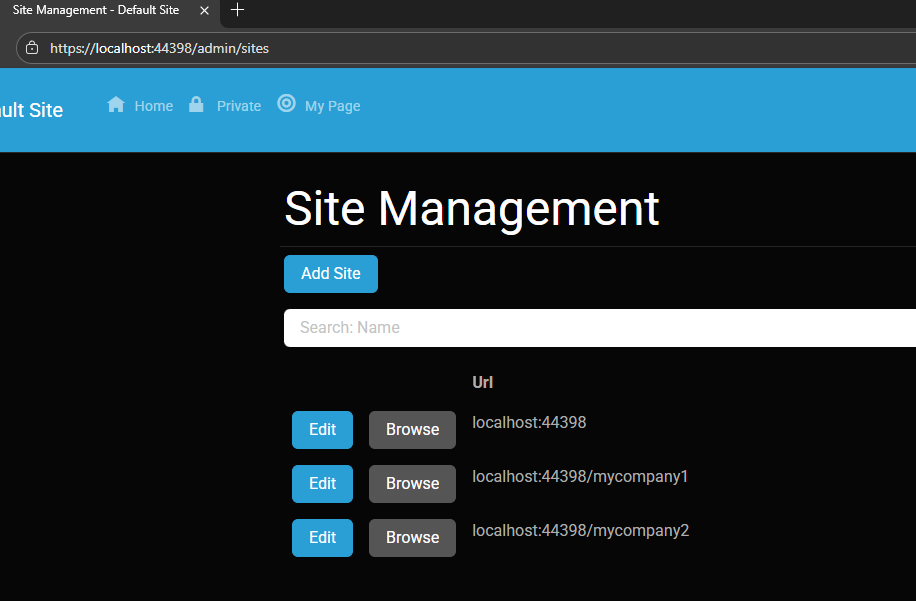

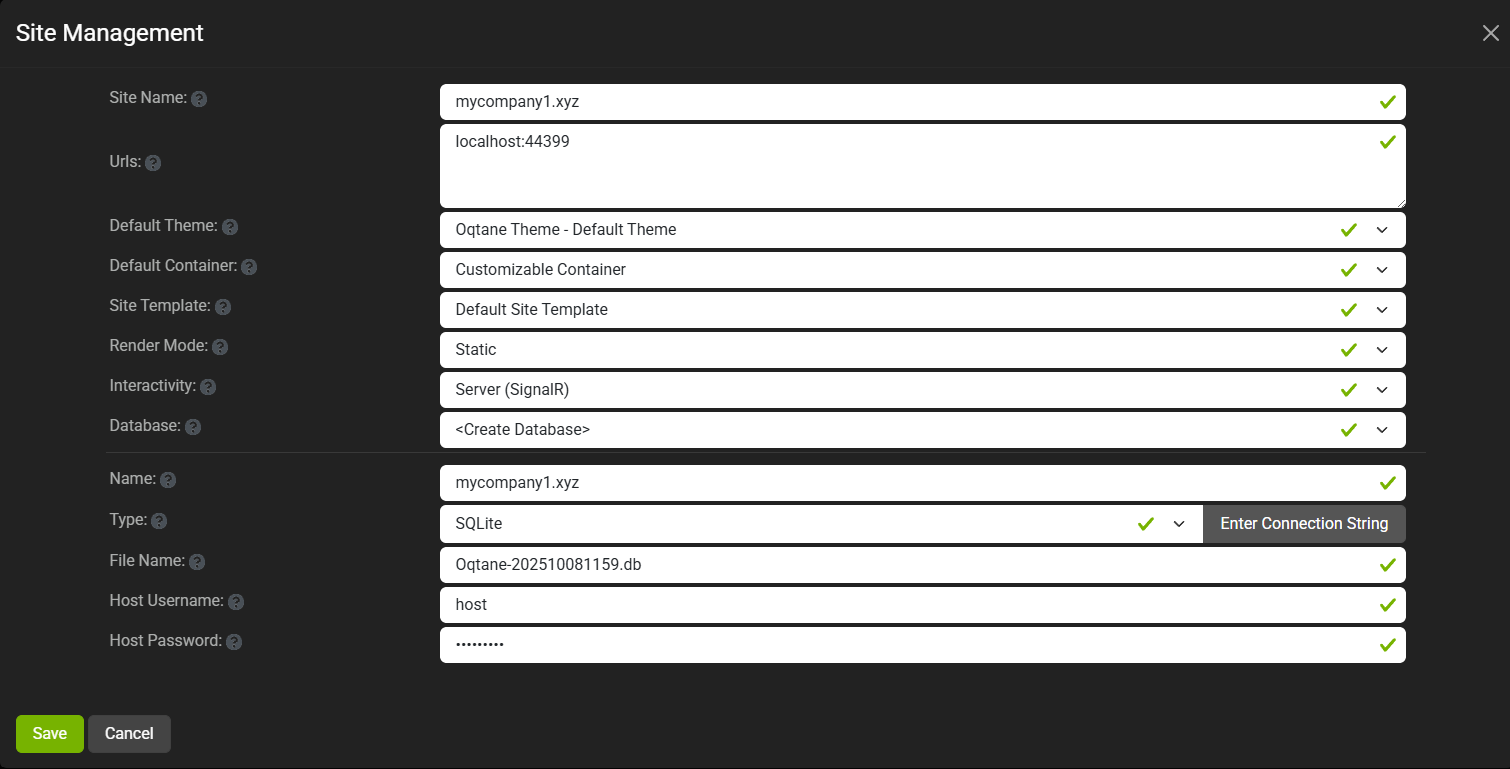

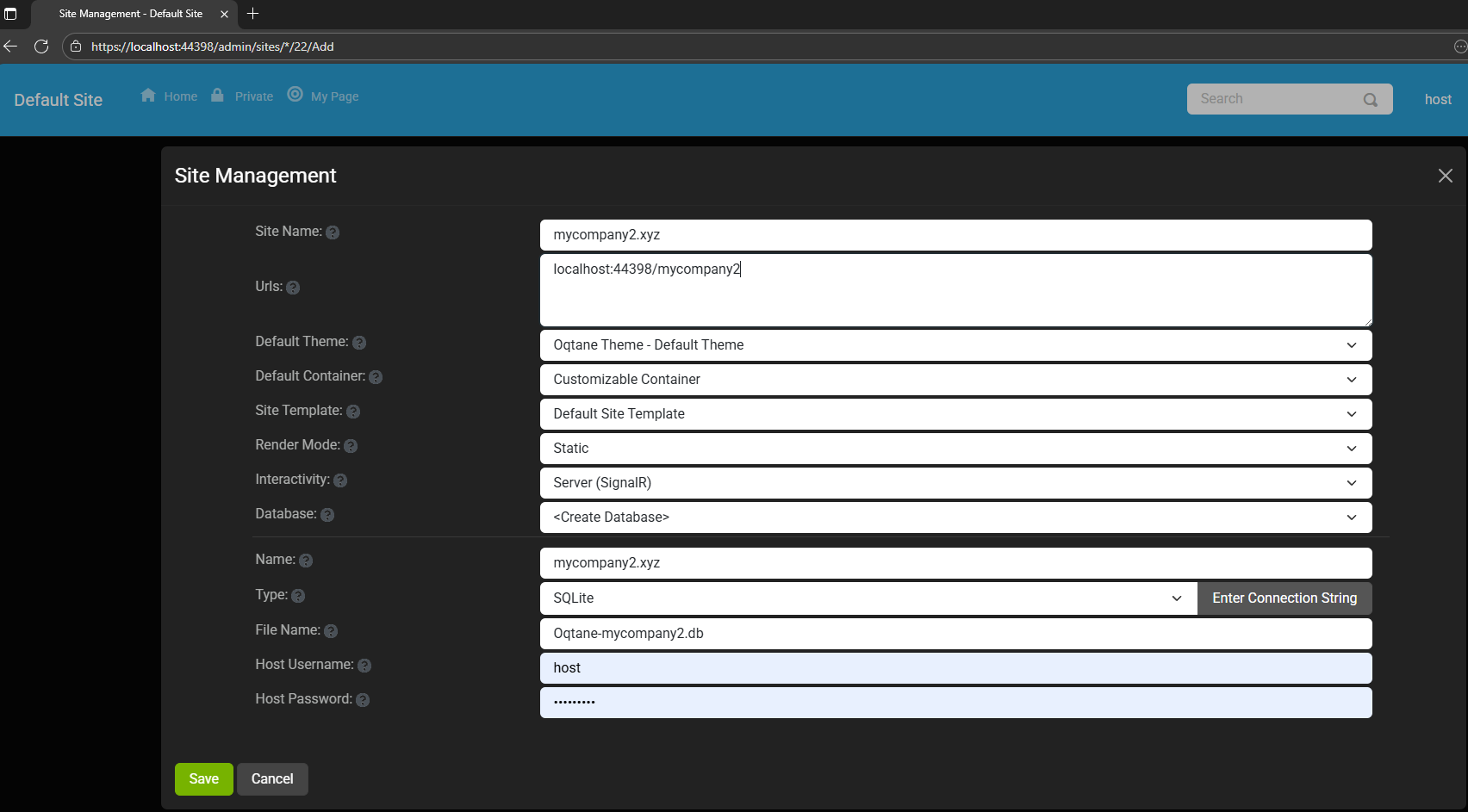

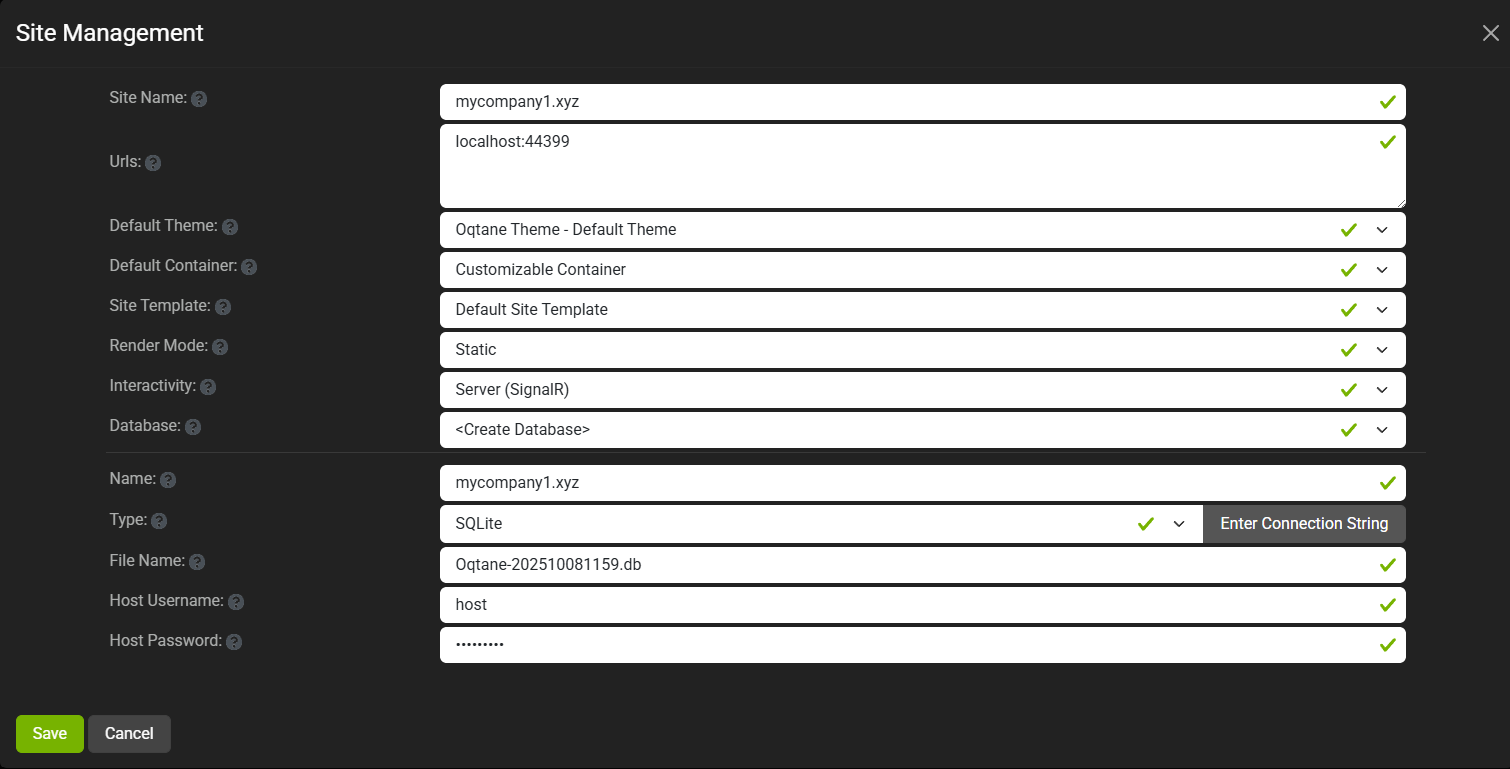

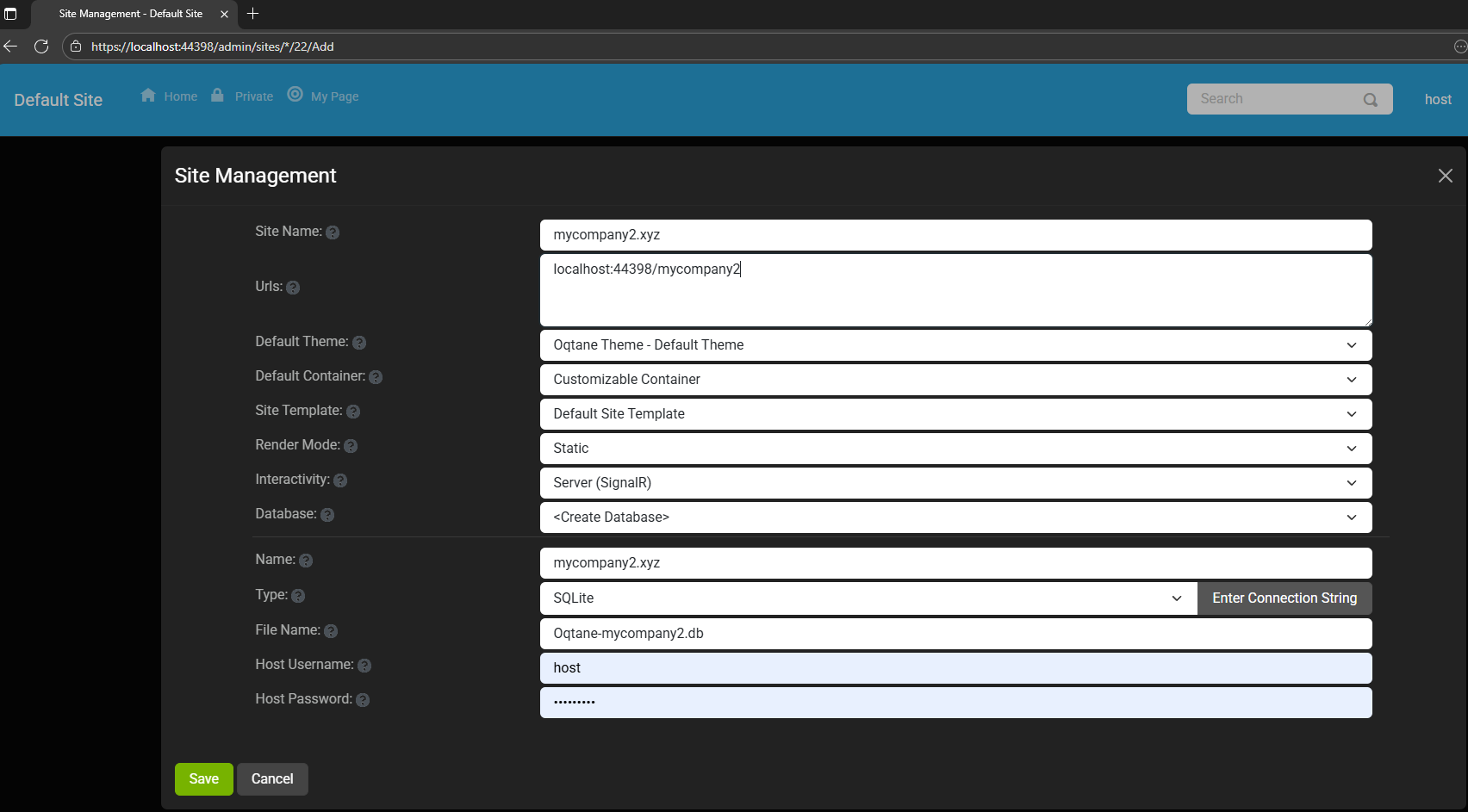

You can see the screenshots below showing the settings for each site.

At first, it was a bit confusing — I thought I could simply use different ports for each site (like 8081,

8082, etc.), but that’s not how Oqtane works. Everything runs in the same process, so all tenants

share the same port.

Instead of changing ports, you configure different URL paths or folders. For example:

http://localhost:8080/ → the main hosthttp://localhost:8080/mycompany1 → first tenanthttp://localhost:8080/mycompany2 → second tenant

Site Managment

Site MyCompany1

Site MyCompany2

Each tenant can:

- Use a separate database or share the same one as the host

- Have its own theme

- Maintain independent site settings

In the short GIF animation I attached to the repository, you can see how each site has its

own unique visual theme — it’s really neat to watch.

When you add a new site, its connection string is also stored automatically in the application settings. So, if you download

the repository and run it locally, you’ll be able to access all the sites and see how the URLs and configurations work.

Here is the repository egarim/OqtaneMultiTenant: an example of how to use multi tenant in oqtane

Why I’m Doing These Posts

These blog entries are like my personal research notes — documenting what I discover while working with Oqtane.

I’m keeping each experiment small and reproducible so I can:

- Share them with others easily

- Download them later and reproduce the same setup, including data and configuration

What’s Next

In the next post, I’ll cover virtual hosting — how to use domain names that forward to specific

URLs or tenants. I’ve already done some research on that, but I don’t want to overload this post with too many topics.

For now, I’ll just attach the screenshots showing the different site configurations and URLs, along with a link to the GitHub

repository so you can try it yourself.

If you have any questions, feel free to reach out! I’ll keep documenting everything as I go.

One of the great things about Oqtane is that it’s open source — you can always dive into the code, or if you’re

stuck, open a GitHub issue. Shaun Walker and the community are incredibly helpful, so don’t hesitate to ask.

Thanks again to the Oqtane team for building such an amazing framework.

by Joche Ojeda | Oct 5, 2025 | Oqtane, ORM

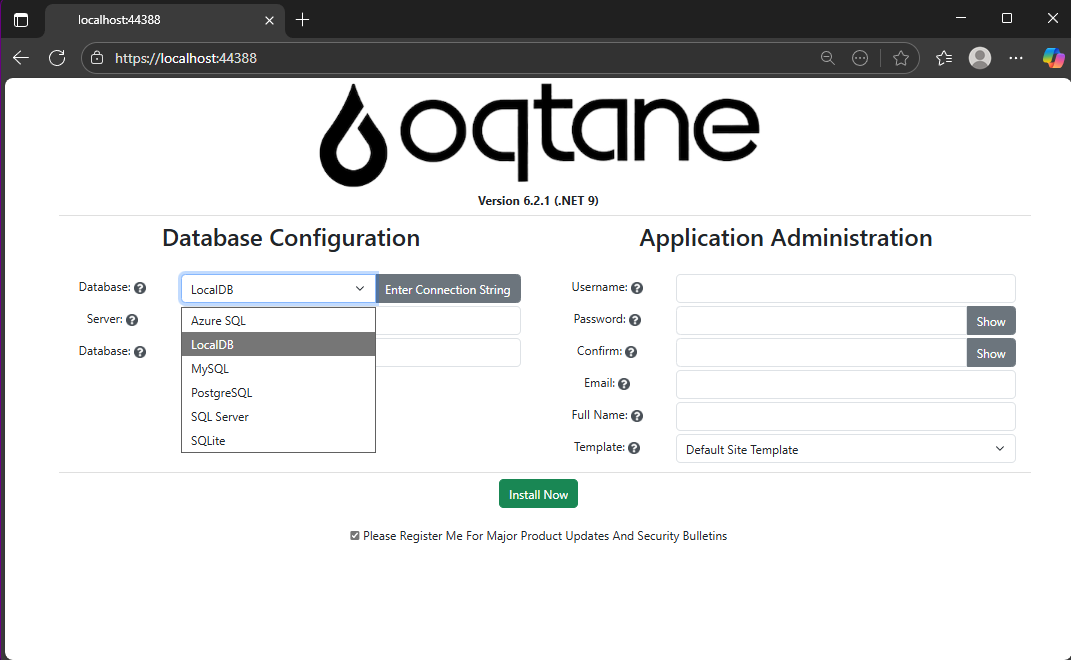

In this article, I’ll show you what to do after you’ve obtained and opened an Oqtane solution. Specifically, we’ll go through two different ways to set up your database for the first time.

- Using the setup wizard — this option appears automatically the first time you run the application.

- Configuring it manually — by directly editing the

appsettings.json file to skip the wizard.

Both methods achieve the same result. The only difference is that, if you configure the database manually, you won’t see the setup wizard during startup.

Step 1: Running the Application for the First Time

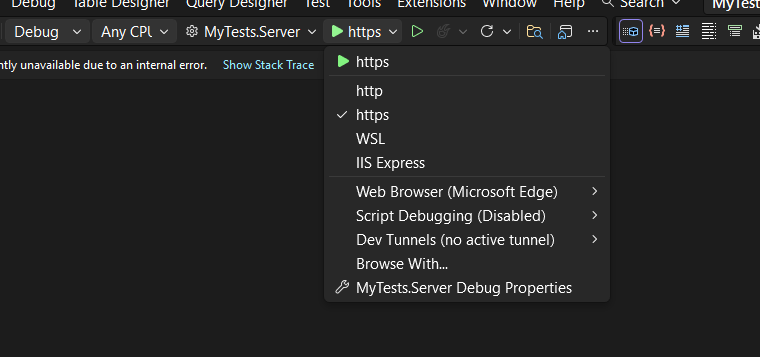

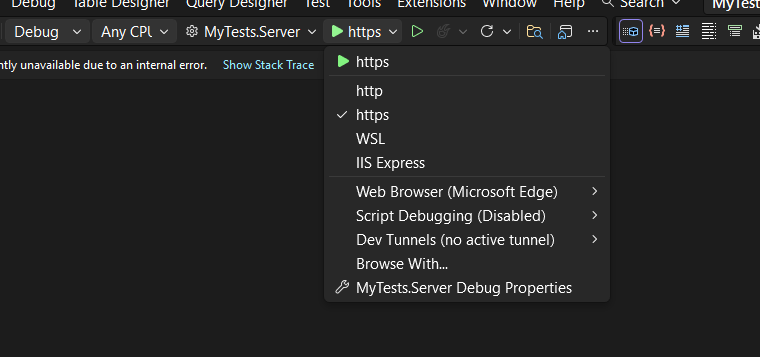

Once your solution is open in Visual Studio, set the Server project as the startup project. Then run it just as you would with any ASP.NET Core application.

You’ll notice several run options — I recommend using the HTTPS version instead of IIS Express (I stopped using IIS Express because it doesn’t work well on ARM-based computers).

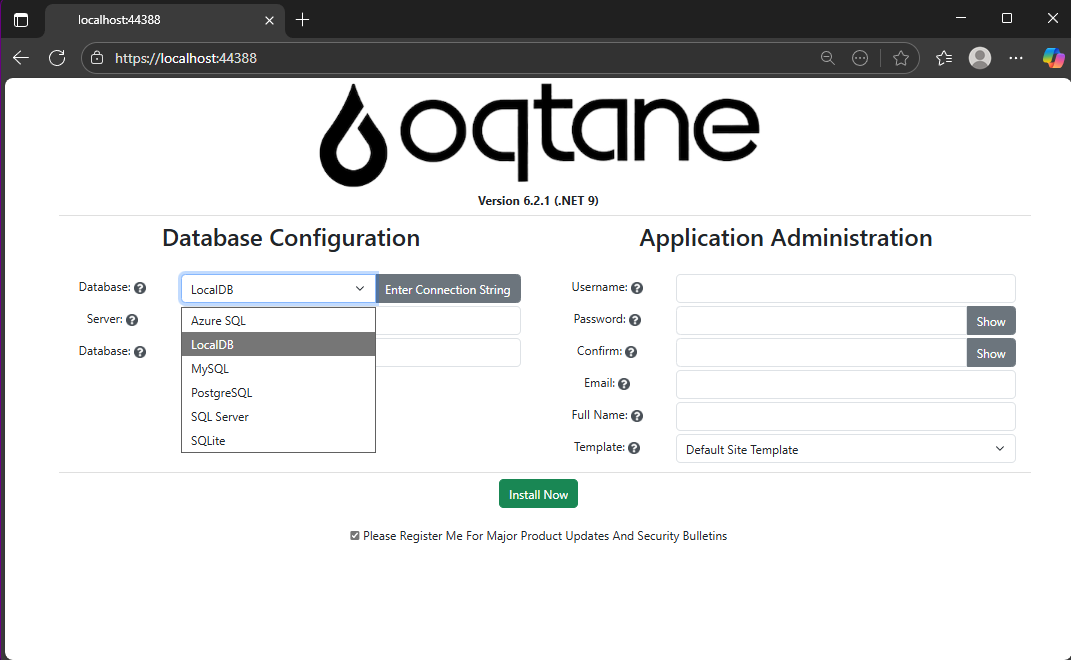

When you run the application for the first time and your settings file is still empty, you’ll see the Database Setup Wizard. As shown in the image, the wizard allows you to select a database provider and configure it through a form.

There’s also an option to paste your connection string directly. Make sure it’s a valid Entity Framework Core connection string.

After that, fill in the admin user’s details — username, email, and password — and you’re done. Once this process completes, you’ll have a working Oqtane installation.

Step 2: Setting Up the Database Manually

If you prefer to skip the wizard, you can configure the database manually. To do this, open the appsettings.json file and add the following parameters:

{

"DefaultDBType": "Oqtane.Database.Sqlite.SqliteDatabase, Oqtane.Server",

"ConnectionStrings": {

"DefaultConnection": "Data Source=Oqtane-202510052045.db;"

},

"Installation": {

"DefaultAlias": "https://localhost:44388",

"HostPassword": "MyPasswor25!",

"HostEmail": "joche@myemail.com",

"SiteTemplate": "",

"DefaultTheme": "",

"DefaultContainer": ""

}

}

Here you need to specify:

- The database provider type (e.g., SQLite, SQL Server, PostgreSQL, etc.)

- The connection string

- The admin email and password for the first user — known as the host user (essentially the root or super admin).

This is the method I usually use now since I’ve set up Oqtane so many times recently that I’ve grown tired of the wizard. However, if you’re new to Oqtane, the wizard is a great way to get started.

Wrapping Up

That’s it for this setup guide! By now, you should have a running Oqtane installation configured either through the setup wizard or manually via the configuration file. Both methods give you a solid foundation to start exploring what Oqtane can do.

In the next article, we’ll dive into the Oqtane backend, exploring how the framework handles modules, data, and the underlying architecture that makes it flexible and powerful. Stay tuned — things are about to get interesting!

by Joche Ojeda | Jun 26, 2025 | EfCore

What is the N+1 Problem?

Imagine you’re running a blog website and want to display a list of all blogs along with how many posts each one has. The N+1 problem is a common database performance issue that happens when your application makes way too many database trips to get this simple information.

Our Test Database Setup

Our test suite creates a realistic blog scenario with:

- 3 different blogs

- Multiple posts for each blog

- Comments on posts

- Tags associated with blogs

This mirrors real-world applications where data is interconnected and needs to be loaded efficiently.

Test Case 1: The Classic N+1 Problem (Lazy Loading)

What it does: This test demonstrates how “lazy loading” can accidentally create the N+1 problem. Lazy loading sounds helpful – it automatically fetches related data when you need it. But this convenience comes with a hidden cost.

The Code:

[Test]

public void Test_N_Plus_One_Problem_With_Lazy_Loading()

{

var blogs = _context.Blogs.ToList(); // Query 1: Load blogs

foreach (var blog in blogs)

{

var postCount = blog.Posts.Count; // Each access triggers a query!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {postCount}");

}

}

The SQL Queries Generated:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load posts for Blog 1 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Load posts for Blog 2 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- Query 4: Load posts for Blog 3 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 3

The Problem: 4 total queries (1 + 3) – Each time you access blog.Posts.Count, lazy loading triggers a separate database trip.

Test Case 2: Alternative N+1 Demonstration

What it does: This test manually recreates the N+1 pattern to show exactly what’s happening, even if lazy loading isn’t working properly.

The Code:

[Test]

public void Test_N_Plus_One_Problem_Alternative_Approach()

{

var blogs = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogs)

{

// This explicitly loads posts for THIS blog only (simulates lazy loading)

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loaded {posts.Count} posts for blog {blog.Id}");

}

}

The Lesson: This explicitly demonstrates the N+1 pattern with manual queries. The result is identical to lazy loading – one query per blog plus the initial blogs query.

Test Case 3: N+1 vs Include() – Side by Side Comparison

What it does: This is the money shot – a direct comparison showing the dramatic difference between the problematic approach and the solution.

The Bad Code (N+1):

// BAD: N+1 Problem

var blogsN1 = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogsN1)

{

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList(); // Queries 2,3,4...

}

The Good Code (Include):

// GOOD: Include() Solution

var blogsInclude = _context.Blogs

.Include(b => b.Posts)

.ToList(); // Single query with JOIN

foreach (var blog in blogsInclude)

{

// No additional queries needed - data is already loaded!

var postCount = blog.Posts.Count;

}

The SQL Queries:

Bad Approach (Multiple Queries):

-- Same 4 separate queries as shown in Test Case 1

Good Approach (Single Query):

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

Results from our test:

- Bad approach: 4 total queries (1 + 3)

- Good approach: 1 total query

- Performance improvement: 75% fewer database round trips!

Test Case 4: Guaranteed N+1 Problem

What it does: This test removes any doubt by explicitly demonstrating the N+1 pattern with clear step-by-step output.

The Code:

[Test]

public void Test_Guaranteed_N_Plus_One_Problem()

{

var blogs = _context.Blogs.ToList(); // Query 1

int queryCount = 1;

foreach (var blog in blogs)

{

queryCount++;

// This explicitly demonstrates the N+1 pattern

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loading posts for blog '{blog.Title}' (Query #{queryCount})");

}

}

Why it’s useful: This ensures we can always see the problem clearly by manually executing the problematic pattern, making it impossible to miss.

Test Case 5: Eager Loading with Include()

What it does: Shows the correct way to load related data upfront using Include().

The Code:

[Test]

public void Test_Eager_Loading_With_Include()

{

var blogsWithPosts = _context.Blogs

.Include(b => b.Posts)

.ToList();

foreach (var blog in blogsWithPosts)

{

// No additional queries - data already loaded!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {blog.Posts.Count}");

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

The Benefit: One database trip loads everything. When you access blog.Posts.Count, the data is already there.

Test Case 6: Multiple Includes with ThenInclude()

What it does: Demonstrates loading deeply nested data – blogs → posts → comments – all in one query.

The Code:

[Test]

public void Test_Multiple_Includes_With_ThenInclude()

{

var blogsWithPostsAndComments = _context.Blogs

.Include(b => b.Posts)

.ThenInclude(p => p.Comments)

.ToList();

foreach (var blog in blogsWithPostsAndComments)

{

foreach (var post in blog.Posts)

{

// All data loaded in one query!

TestLogger.WriteLine($"Post: {post.Title} - Comments: {post.Comments.Count}");

}

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title",

"c"."Id", "c"."Author", "c"."Content", "c"."CreatedDate", "c"."PostId"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

LEFT JOIN "Comments" AS "c" ON "p"."Id" = "c"."PostId"

ORDER BY "b"."Id", "p"."Id"

The Challenge: Loading three levels of data in one optimized query instead of potentially hundreds of separate queries.

Test Case 7: Projection with Select()

What it does: Shows how to load only the specific data you actually need instead of entire objects.

The Code:

[Test]

public void Test_Projection_With_Select()

{

var blogData = _context.Blogs

.Select(b => new

{

BlogTitle = b.Title,

PostCount = b.Posts.Count(),

RecentPosts = b.Posts

.OrderByDescending(p => p.PublishedDate)

.Take(2)

.Select(p => new { p.Title, p.PublishedDate })

})

.ToList();

}

The SQL Query (from our test output):

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId"), "b"."Id", "t0"."Title", "t0"."PublishedDate", "t0"."Id"

FROM "Blogs" AS "b"

LEFT JOIN (

SELECT "t"."Title", "t"."PublishedDate", "t"."Id", "t"."BlogId"

FROM (

SELECT "p0"."Title", "p0"."PublishedDate", "p0"."Id", "p0"."BlogId",

ROW_NUMBER() OVER(PARTITION BY "p0"."BlogId" ORDER BY "p0"."PublishedDate" DESC) AS "row"

FROM "Posts" AS "p0"

) AS "t"

WHERE "t"."row" <= 2

) AS "t0" ON "b"."Id" = "t0"."BlogId"

ORDER BY "b"."Id", "t0"."BlogId", "t0"."PublishedDate" DESC

Why it matters: This query only loads the specific fields needed, uses window functions for efficiency, and calculates counts in the database rather than loading full objects.

Test Case 8: Split Query Strategy

What it does: Demonstrates an alternative approach where one large JOIN is split into multiple optimized queries.

The Code:

[Test]

public void Test_Split_Query()

{

var blogs = _context.Blogs

.AsSplitQuery()

.Include(b => b.Posts)

.Include(b => b.Tags)

.ToList();

}

The SQL Queries (from our test output):

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

ORDER BY "b"."Id"

-- Query 2: Load posts (automatically generated)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

-- Query 3: Load tags (automatically generated)

SELECT "t"."Id", "t"."Name", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "BlogTag" AS "bt" ON "b"."Id" = "bt"."BlogsId"

INNER JOIN "Tags" AS "t" ON "bt"."TagsId" = "t"."Id"

ORDER BY "b"."Id"

When to use it: When JOINing lots of related data creates one massive, slow query. Split queries break this into several smaller, faster queries.

Test Case 9: Filtered Include()

What it does: Shows how to load only specific related data – in this case, only recent posts from the last 15 days.

The Code:

[Test]

public void Test_Filtered_Include()

{

var cutoffDate = DateTime.Now.AddDays(-15);

var blogsWithRecentPosts = _context.Blogs

.Include(b => b.Posts.Where(p => p.PublishedDate > cutoffDate))

.ToList();

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId" AND "p"."PublishedDate" > @cutoffDate

ORDER BY "b"."Id"

The Efficiency: Only loads posts that meet the criteria, reducing data transfer and memory usage.

Test Case 10: Explicit Loading

What it does: Demonstrates manually controlling when related data gets loaded.

The Code:

[Test]

public void Test_Explicit_Loading()

{

var blogs = _context.Blogs.ToList(); // Load blogs only

// Now explicitly load posts for all blogs

foreach (var blog in blogs)

{

_context.Entry(blog)

.Collection(b => b.Posts)

.Load();

}

}

The SQL Queries:

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Explicitly load posts for blog 1

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Explicitly load posts for blog 2

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- ... and so on

When useful: When you sometimes need related data and sometimes don’t. You control exactly when the additional database trip happens.

Test Case 11: Batch Loading Pattern

What it does: Shows a clever technique to avoid N+1 by loading all related data in one query, then organizing it in memory.

The Code:

[Test]

public void Test_Batch_Loading_Pattern()

{

var blogs = _context.Blogs.ToList(); // Query 1

var blogIds = blogs.Select(b => b.Id).ToList();

// Single query to get all posts for all blogs

var posts = _context.Posts

.Where(p => blogIds.Contains(p.BlogId))

.ToList(); // Query 2

// Group posts by blog in memory

var postsByBlog = posts.GroupBy(p => p.BlogId).ToDictionary(g => g.Key, g => g.ToList());

}

The SQL Queries:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load ALL posts for ALL blogs in one query

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" IN (1, 2, 3)

The Result: Just 2 queries total, regardless of how many blogs you have. Data organization happens in memory.

Test Case 12: Performance Comparison

What it does: Puts all the approaches head-to-head to show their relative performance.

The Code:

[Test]

public void Test_Performance_Comparison()

{

// N+1 Problem (Multiple Queries)

var blogs1 = _context.Blogs.ToList();

foreach (var blog in blogs1)

{

var count = blog.Posts.Count(); // Triggers separate query

}

// Eager Loading (Single Query)

var blogs2 = _context.Blogs

.Include(b => b.Posts)

.ToList();

// Projection (Minimal Data)

var blogSummaries = _context.Blogs

.Select(b => new { b.Title, PostCount = b.Posts.Count() })

.ToList();

}

The SQL Queries Generated:

N+1 Problem: 4 separate queries (as shown in previous examples)

Eager Loading: 1 JOIN query (as shown in Test Case 5)

Projection: 1 optimized query with subquery:

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId") AS "PostCount"

FROM "Blogs" AS "b"

Real-World Performance Impact

Let’s scale this up to see why it matters:

Small Application (10 blogs):

- N+1 approach: 11 queries (≈110ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 100ms

Medium Application (100 blogs):

- N+1 approach: 101 queries (≈1,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 1 second

Large Application (1000 blogs):

- N+1 approach: 1001 queries (≈10,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 10 seconds

Key Takeaways

- The N+1 problem gets exponentially worse as your data grows

- Lazy loading is convenient but dangerous – it can hide performance problems

- Include() is your friend for loading related data efficiently

- Projection is powerful when you only need specific fields

- Different problems need different solutions – there’s no one-size-fits-all approach

- SQL query inspection is crucial – always check what queries your ORM generates

The Bottom Line

This test suite shows that small changes in how you write database queries can transform a slow, database-heavy operation into a fast, efficient one. The difference between a frustrated user waiting 10 seconds for a page to load and a happy user getting instant results often comes down to understanding and avoiding the N+1 problem.

The beauty of these tests is that they use real database queries with actual SQL output, so you can see exactly what’s happening under the hood. Understanding these patterns will make you a more effective developer and help you build applications that stay fast as they grow.

You can find the source for this article in my here