by Joche Ojeda | Jun 26, 2025 | EfCore

What is the N+1 Problem?

Imagine you’re running a blog website and want to display a list of all blogs along with how many posts each one has. The N+1 problem is a common database performance issue that happens when your application makes way too many database trips to get this simple information.

Our Test Database Setup

Our test suite creates a realistic blog scenario with:

- 3 different blogs

- Multiple posts for each blog

- Comments on posts

- Tags associated with blogs

This mirrors real-world applications where data is interconnected and needs to be loaded efficiently.

Test Case 1: The Classic N+1 Problem (Lazy Loading)

What it does: This test demonstrates how “lazy loading” can accidentally create the N+1 problem. Lazy loading sounds helpful – it automatically fetches related data when you need it. But this convenience comes with a hidden cost.

The Code:

[Test]

public void Test_N_Plus_One_Problem_With_Lazy_Loading()

{

var blogs = _context.Blogs.ToList(); // Query 1: Load blogs

foreach (var blog in blogs)

{

var postCount = blog.Posts.Count; // Each access triggers a query!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {postCount}");

}

}

The SQL Queries Generated:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load posts for Blog 1 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Load posts for Blog 2 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- Query 4: Load posts for Blog 3 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 3

The Problem: 4 total queries (1 + 3) – Each time you access blog.Posts.Count, lazy loading triggers a separate database trip.

Test Case 2: Alternative N+1 Demonstration

What it does: This test manually recreates the N+1 pattern to show exactly what’s happening, even if lazy loading isn’t working properly.

The Code:

[Test]

public void Test_N_Plus_One_Problem_Alternative_Approach()

{

var blogs = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogs)

{

// This explicitly loads posts for THIS blog only (simulates lazy loading)

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loaded {posts.Count} posts for blog {blog.Id}");

}

}

The Lesson: This explicitly demonstrates the N+1 pattern with manual queries. The result is identical to lazy loading – one query per blog plus the initial blogs query.

Test Case 3: N+1 vs Include() – Side by Side Comparison

What it does: This is the money shot – a direct comparison showing the dramatic difference between the problematic approach and the solution.

The Bad Code (N+1):

// BAD: N+1 Problem

var blogsN1 = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogsN1)

{

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList(); // Queries 2,3,4...

}

The Good Code (Include):

// GOOD: Include() Solution

var blogsInclude = _context.Blogs

.Include(b => b.Posts)

.ToList(); // Single query with JOIN

foreach (var blog in blogsInclude)

{

// No additional queries needed - data is already loaded!

var postCount = blog.Posts.Count;

}

The SQL Queries:

Bad Approach (Multiple Queries):

-- Same 4 separate queries as shown in Test Case 1

Good Approach (Single Query):

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

Results from our test:

- Bad approach: 4 total queries (1 + 3)

- Good approach: 1 total query

- Performance improvement: 75% fewer database round trips!

Test Case 4: Guaranteed N+1 Problem

What it does: This test removes any doubt by explicitly demonstrating the N+1 pattern with clear step-by-step output.

The Code:

[Test]

public void Test_Guaranteed_N_Plus_One_Problem()

{

var blogs = _context.Blogs.ToList(); // Query 1

int queryCount = 1;

foreach (var blog in blogs)

{

queryCount++;

// This explicitly demonstrates the N+1 pattern

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loading posts for blog '{blog.Title}' (Query #{queryCount})");

}

}

Why it’s useful: This ensures we can always see the problem clearly by manually executing the problematic pattern, making it impossible to miss.

Test Case 5: Eager Loading with Include()

What it does: Shows the correct way to load related data upfront using Include().

The Code:

[Test]

public void Test_Eager_Loading_With_Include()

{

var blogsWithPosts = _context.Blogs

.Include(b => b.Posts)

.ToList();

foreach (var blog in blogsWithPosts)

{

// No additional queries - data already loaded!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {blog.Posts.Count}");

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

The Benefit: One database trip loads everything. When you access blog.Posts.Count, the data is already there.

Test Case 6: Multiple Includes with ThenInclude()

What it does: Demonstrates loading deeply nested data – blogs → posts → comments – all in one query.

The Code:

[Test]

public void Test_Multiple_Includes_With_ThenInclude()

{

var blogsWithPostsAndComments = _context.Blogs

.Include(b => b.Posts)

.ThenInclude(p => p.Comments)

.ToList();

foreach (var blog in blogsWithPostsAndComments)

{

foreach (var post in blog.Posts)

{

// All data loaded in one query!

TestLogger.WriteLine($"Post: {post.Title} - Comments: {post.Comments.Count}");

}

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title",

"c"."Id", "c"."Author", "c"."Content", "c"."CreatedDate", "c"."PostId"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

LEFT JOIN "Comments" AS "c" ON "p"."Id" = "c"."PostId"

ORDER BY "b"."Id", "p"."Id"

The Challenge: Loading three levels of data in one optimized query instead of potentially hundreds of separate queries.

Test Case 7: Projection with Select()

What it does: Shows how to load only the specific data you actually need instead of entire objects.

The Code:

[Test]

public void Test_Projection_With_Select()

{

var blogData = _context.Blogs

.Select(b => new

{

BlogTitle = b.Title,

PostCount = b.Posts.Count(),

RecentPosts = b.Posts

.OrderByDescending(p => p.PublishedDate)

.Take(2)

.Select(p => new { p.Title, p.PublishedDate })

})

.ToList();

}

The SQL Query (from our test output):

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId"), "b"."Id", "t0"."Title", "t0"."PublishedDate", "t0"."Id"

FROM "Blogs" AS "b"

LEFT JOIN (

SELECT "t"."Title", "t"."PublishedDate", "t"."Id", "t"."BlogId"

FROM (

SELECT "p0"."Title", "p0"."PublishedDate", "p0"."Id", "p0"."BlogId",

ROW_NUMBER() OVER(PARTITION BY "p0"."BlogId" ORDER BY "p0"."PublishedDate" DESC) AS "row"

FROM "Posts" AS "p0"

) AS "t"

WHERE "t"."row" <= 2

) AS "t0" ON "b"."Id" = "t0"."BlogId"

ORDER BY "b"."Id", "t0"."BlogId", "t0"."PublishedDate" DESC

Why it matters: This query only loads the specific fields needed, uses window functions for efficiency, and calculates counts in the database rather than loading full objects.

Test Case 8: Split Query Strategy

What it does: Demonstrates an alternative approach where one large JOIN is split into multiple optimized queries.

The Code:

[Test]

public void Test_Split_Query()

{

var blogs = _context.Blogs

.AsSplitQuery()

.Include(b => b.Posts)

.Include(b => b.Tags)

.ToList();

}

The SQL Queries (from our test output):

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

ORDER BY "b"."Id"

-- Query 2: Load posts (automatically generated)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

-- Query 3: Load tags (automatically generated)

SELECT "t"."Id", "t"."Name", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "BlogTag" AS "bt" ON "b"."Id" = "bt"."BlogsId"

INNER JOIN "Tags" AS "t" ON "bt"."TagsId" = "t"."Id"

ORDER BY "b"."Id"

When to use it: When JOINing lots of related data creates one massive, slow query. Split queries break this into several smaller, faster queries.

Test Case 9: Filtered Include()

What it does: Shows how to load only specific related data – in this case, only recent posts from the last 15 days.

The Code:

[Test]

public void Test_Filtered_Include()

{

var cutoffDate = DateTime.Now.AddDays(-15);

var blogsWithRecentPosts = _context.Blogs

.Include(b => b.Posts.Where(p => p.PublishedDate > cutoffDate))

.ToList();

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId" AND "p"."PublishedDate" > @cutoffDate

ORDER BY "b"."Id"

The Efficiency: Only loads posts that meet the criteria, reducing data transfer and memory usage.

Test Case 10: Explicit Loading

What it does: Demonstrates manually controlling when related data gets loaded.

The Code:

[Test]

public void Test_Explicit_Loading()

{

var blogs = _context.Blogs.ToList(); // Load blogs only

// Now explicitly load posts for all blogs

foreach (var blog in blogs)

{

_context.Entry(blog)

.Collection(b => b.Posts)

.Load();

}

}

The SQL Queries:

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Explicitly load posts for blog 1

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Explicitly load posts for blog 2

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- ... and so on

When useful: When you sometimes need related data and sometimes don’t. You control exactly when the additional database trip happens.

Test Case 11: Batch Loading Pattern

What it does: Shows a clever technique to avoid N+1 by loading all related data in one query, then organizing it in memory.

The Code:

[Test]

public void Test_Batch_Loading_Pattern()

{

var blogs = _context.Blogs.ToList(); // Query 1

var blogIds = blogs.Select(b => b.Id).ToList();

// Single query to get all posts for all blogs

var posts = _context.Posts

.Where(p => blogIds.Contains(p.BlogId))

.ToList(); // Query 2

// Group posts by blog in memory

var postsByBlog = posts.GroupBy(p => p.BlogId).ToDictionary(g => g.Key, g => g.ToList());

}

The SQL Queries:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load ALL posts for ALL blogs in one query

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" IN (1, 2, 3)

The Result: Just 2 queries total, regardless of how many blogs you have. Data organization happens in memory.

Test Case 12: Performance Comparison

What it does: Puts all the approaches head-to-head to show their relative performance.

The Code:

[Test]

public void Test_Performance_Comparison()

{

// N+1 Problem (Multiple Queries)

var blogs1 = _context.Blogs.ToList();

foreach (var blog in blogs1)

{

var count = blog.Posts.Count(); // Triggers separate query

}

// Eager Loading (Single Query)

var blogs2 = _context.Blogs

.Include(b => b.Posts)

.ToList();

// Projection (Minimal Data)

var blogSummaries = _context.Blogs

.Select(b => new { b.Title, PostCount = b.Posts.Count() })

.ToList();

}

The SQL Queries Generated:

N+1 Problem: 4 separate queries (as shown in previous examples)

Eager Loading: 1 JOIN query (as shown in Test Case 5)

Projection: 1 optimized query with subquery:

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId") AS "PostCount"

FROM "Blogs" AS "b"

Real-World Performance Impact

Let’s scale this up to see why it matters:

Small Application (10 blogs):

- N+1 approach: 11 queries (≈110ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 100ms

Medium Application (100 blogs):

- N+1 approach: 101 queries (≈1,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 1 second

Large Application (1000 blogs):

- N+1 approach: 1001 queries (≈10,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 10 seconds

Key Takeaways

- The N+1 problem gets exponentially worse as your data grows

- Lazy loading is convenient but dangerous – it can hide performance problems

- Include() is your friend for loading related data efficiently

- Projection is powerful when you only need specific fields

- Different problems need different solutions – there’s no one-size-fits-all approach

- SQL query inspection is crucial – always check what queries your ORM generates

The Bottom Line

This test suite shows that small changes in how you write database queries can transform a slow, database-heavy operation into a fast, efficient one. The difference between a frustrated user waiting 10 seconds for a page to load and a happy user getting instant results often comes down to understanding and avoiding the N+1 problem.

The beauty of these tests is that they use real database queries with actual SQL output, so you can see exactly what’s happening under the hood. Understanding these patterns will make you a more effective developer and help you build applications that stay fast as they grow.

You can find the source for this article in my here

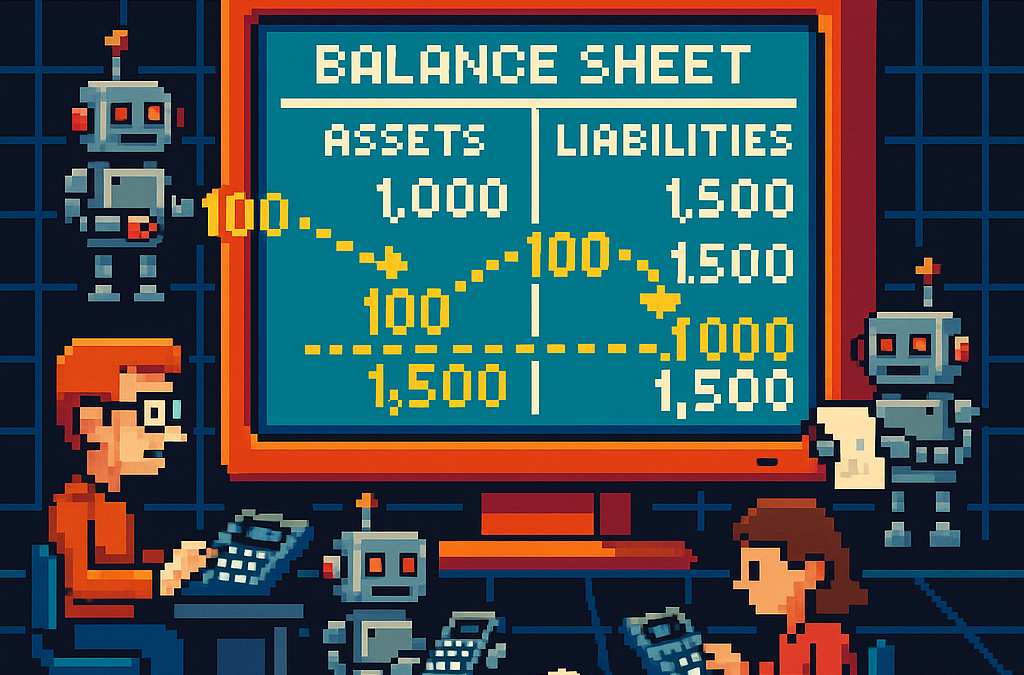

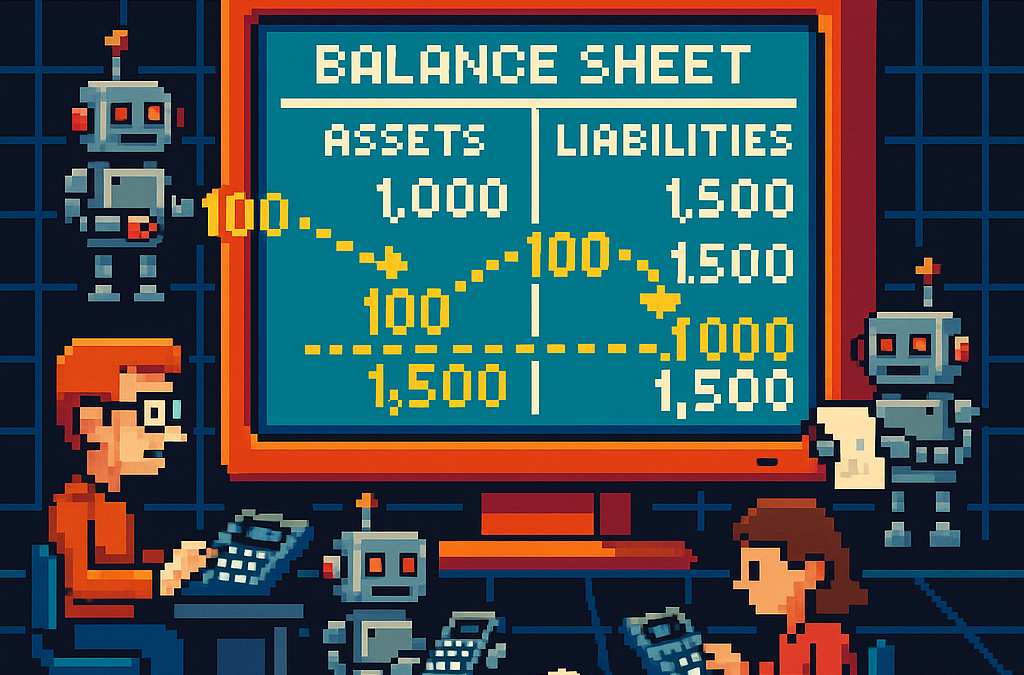

by Joche Ojeda | May 5, 2025 | Uncategorized

Integration testing is a critical phase in software development where individual modules are combined and tested as a group. In our accounting system, we’ve created a robust integration test that demonstrates how the Document module and Chart of Accounts module interact to form a functional accounting system. In this post, I’ll explain the components and workflow of our integration test.

The Architecture of Our Integration Test

Our integration test simulates a small retail business’s accounting operations. Let’s break down the key components:

Test Fixture Setup

The AccountingIntegrationTests class contains all our test methods and is decorated with the [TestFixture] attribute to identify it as a NUnit test fixture. The Setup method initializes our services and data structures:

[SetUp]

public async Task Setup()

{

// Initialize services

_auditService = new AuditService();

_documentService = new DocumentService(_auditService);

_transactionService = new TransactionService();

_accountValidator = new AccountValidator();

_accountBalanceCalculator = new AccountBalanceCalculator();

// Initialize storage

_accounts = new Dictionary<string, AccountDto>();

_documents = new Dictionary<string, IDocument>();

_transactions = new Dictionary<string, ITransaction>();

// Create Chart of Accounts

await SetupChartOfAccounts();

}

This method:

- Creates instances of our services

- Sets up in-memory storage for our entities

- Calls

SetupChartOfAccounts() to create our initial chart of accounts

Chart of Accounts Setup

The SetupChartOfAccounts method creates a basic chart of accounts for our retail business:

private async Task SetupChartOfAccounts()

{

// Clear accounts dictionary in case this method is called multiple times

_accounts.Clear();

// Assets (1xxxx)

await CreateAccount("Cash", "10100", AccountType.Asset, "Cash on hand and in banks");

await CreateAccount("Accounts Receivable", "11000", AccountType.Asset, "Amounts owed by customers");

// ... more accounts

// Verify all accounts are valid

foreach (var account in _accounts.Values)

{

bool isValid = _accountValidator.ValidateAccount(account);

Assert.That(isValid, Is.True, $"Account {account.AccountName} validation failed");

}

// Verify expected number of accounts

Assert.That(_accounts.Count, Is.EqualTo(17), "Expected 17 accounts in chart of accounts");

}

This method:

- Creates accounts for each category (Assets, Liabilities, Equity, Revenue, and Expenses)

- Validates each account using our

AccountValidator

- Ensures we have the expected number of accounts

Individual Transaction Tests

We have separate test methods for specific transaction types:

Purchase of Inventory

CanRecordPurchaseOfInventory demonstrates recording a supplier invoice:

[Test]

public async Task CanRecordPurchaseOfInventory()

{

// Arrange - Create document

var document = new DocumentDto { /* properties */ };

// Act - Create document, transaction, and entries

var createdDocument = await _documentService.CreateDocumentAsync(document, TEST_USER);

// ... create transaction and entries

// Validate transaction

var isValid = await _transactionService.ValidateTransactionAsync(

createdTransaction.Id, ledgerEntries);

// Assert

Assert.That(isValid, Is.True, "Transaction should be balanced");

}

This test:

- Creates a document for our inventory purchase

- Creates a transaction linked to that document

- Creates ledger entries (debiting Inventory, crediting Accounts Payable)

- Validates that the transaction is balanced (debits = credits)

Sale to Customer

CanRecordSaleToCustomer demonstrates recording a customer sale:

[Test]

public async Task CanRecordSaleToCustomer()

{

// Similar pattern to inventory purchase, but with sale-specific entries

// ...

// Create ledger entries - a more complex transaction with multiple entries

var ledgerEntries = new List<ILedgerEntry>

{

// Cash received

// Sales revenue

// Cost of goods sold

// Reduce inventory

};

// Validate transaction

// ...

}

This test is more complex, recording both the revenue side (debit Cash, credit Sales Revenue) and the cost side (debit Cost of Goods Sold, credit Inventory) of a sale.

Full Accounting Cycle Test

The CanExecuteFullAccountingCycle method ties everything together:

[Test]

public async Task CanExecuteFullAccountingCycle()

{

// Run these in a defined order, with clean account setup first

_accounts.Clear();

_documents.Clear();

_transactions.Clear();

await SetupChartOfAccounts();

// 1. Record inventory purchase

await RecordPurchaseOfInventory();

// 2. Record sale to customer

await RecordSaleToCustomer();

// 3. Record utility expense

await RecordBusinessExpense();

// 4. Create a payment to supplier

await RecordPaymentToSupplier();

// 5. Verify account balances

await VerifyAccountBalances();

}

This test:

- Starts with a clean state

- Records a sequence of business operations

- Verifies the final account balances

Mock Account Balance Calculator

The MockAccountBalanceCalculator is a crucial part of our test that simulates how a real database would work:

public class MockAccountBalanceCalculator : AccountBalanceCalculator

{

private readonly Dictionary<string, AccountDto> _accounts;

private readonly Dictionary<Guid, List<LedgerEntryDto>> _ledgerEntriesByTransaction = new();

private readonly Dictionary<Guid, decimal> _accountBalances = new();

public MockAccountBalanceCalculator(

Dictionary<string, AccountDto> accounts,

Dictionary<string, ITransaction> transactions)

{

_accounts = accounts;

// Create mock ledger entries for each transaction

InitializeLedgerEntries(transactions);

// Calculate account balances based on ledger entries

CalculateAllBalances();

}

// Methods to initialize and calculate

// ...

}

This class:

- Takes our accounts and transactions as inputs

- Creates a collection of ledger entries for each transaction

- Calculates account balances based on these entries

- Provides methods to query account balances and ledger entries

The InitializeLedgerEntries method creates a collection of ledger entries for each transaction:

private void InitializeLedgerEntries(Dictionary<string, ITransaction> transactions)

{

// For inventory purchase

if (transactions.TryGetValue("InventoryPurchase", out var inventoryPurchase))

{

var entries = new List<LedgerEntryDto>

{

// Create entries for this transaction

// ...

};

_ledgerEntriesByTransaction[inventoryPurchase.Id] = entries;

}

// For other transactions

// ...

}

The CalculateAllBalances method processes these entries to calculate account balances:

private void CalculateAllBalances()

{

// Initialize all account balances to zero

foreach (var account in _accounts.Values)

{

_accountBalances[account.Id] = 0m;

}

// Process each transaction's ledger entries

foreach (var entries in _ledgerEntriesByTransaction.Values)

{

foreach (var entry in entries)

{

if (entry.EntryType == EntryType.Debit)

{

_accountBalances[entry.AccountId] += entry.Amount;

}

else // Credit

{

_accountBalances[entry.AccountId] -= entry.Amount;

}

}

}

}

This approach closely mirrors how a real accounting system would work with a database:

- Ledger entries are stored in collections (similar to database tables)

- Account balances are calculated by processing all relevant entries

- The calculator provides methods to query this data (similar to a repository)

Balance Verification

The VerifyAccountBalances method uses our mock calculator to verify account balances:

private async Task VerifyAccountBalances()

{

// Create mock balance calculator

var mockBalanceCalculator = new MockAccountBalanceCalculator(_accounts, _transactions);

// Verify individual account balances

decimal cashBalance = mockBalanceCalculator.CalculateAccountBalance(

_accounts["Cash"].Id,

_testDate.AddDays(15)

);

Assert.That(cashBalance, Is.EqualTo(-2750m), "Cash balance is incorrect");

// ... verify other account balances

// Also verify the accounting equation

// ...

}

The Benefits of Our Collection-Based Approach

Our redesigned MockAccountBalanceCalculator offers several advantages:

- Data-Driven: All calculations are based on collections of data, not hardcoded values.

- Flexible: New transactions can be added easily without changing calculation logic.

- Maintainable: If transaction amounts change, we only need to update them in one place.

- Realistic: This approach closely mirrors how a real database-backed accounting system would work.

- Extensible: We can add support for more complex queries like filtering by date range.

The Goals of Our Integration Test

Our integration test serves several important purposes:

- Verify Module Integration: Ensures that the Document module and Chart of Accounts module work correctly together.

- Validate Business Workflows: Confirms that standard accounting workflows (purchasing, sales, expenses, payments) function as expected.

- Ensure Data Integrity: Verifies that all transactions maintain balance (debits = credits) and that account balances are accurate.

- Test Double-Entry Accounting: Confirms that our system properly implements double-entry accounting principles where every transaction affects at least two accounts.

- Validate Accounting Equation: Ensures that the fundamental accounting equation (Assets = Liabilities + Equity + (Revenues – Expenses)) remains balanced.

Conclusion

This integration test demonstrates the core functionality of our accounting system using a data-driven approach that closely mimics a real database. By simulating a retail business’s transactions and storing them in collections, we’ve created a realistic test environment for our double-entry accounting system.

The collection-based approach in our MockAccountBalanceCalculator allows us to test complex accounting logic without an actual database, while still ensuring that our calculations are accurate and our accounting principles are sound.

While this test uses in-memory collections rather than a database, it provides a strong foundation for testing the business logic of our accounting system in a way that would translate easily to a real-world implementation.

Repo

egarim/SivarErp: Open Source ERP

About Us

YouTube

https://www.youtube.com/c/JocheOjedaXAFXAMARINC

Our sites

Let’s discuss your XAF

This call/zoom will give you the opportunity to define the roadblocks in your current XAF solution. We can talk about performance, deployment or custom implementations. Together we will review you pain points and leave you with recommendations to get your app back in track

https://calendly.com/bitframeworks/bitframeworks-free-xaf-support-hour

Our free A.I courses on Udemy

by Joche Ojeda | May 5, 2025 | Uncategorized

The chart of accounts module is a critical component of any financial accounting system, serving as the organizational structure that categorizes financial transactions. As a software developer working on accounting applications, understanding how to properly implement a chart of accounts module is essential for creating robust and effective financial management solutions.

What is a Chart of Accounts?

Before diving into the implementation details, let’s clarify what a chart of accounts is. In accounting, the chart of accounts is a structured list of all accounts used by an organization to record financial transactions. These accounts are categorized by type (assets, liabilities, equity, revenue, and expenses) and typically follow a numbering system to facilitate organization and reporting.

Core Components of a Chart of Accounts Module

Based on best practices in financial software development, a well-designed chart of accounts module should include:

1. Account Entity

The fundamental entity in the module is the account itself. A properly designed account entity should include:

- A unique identifier (typically a GUID in modern systems)

- Account name

- Account type (asset, liability, equity, revenue, expense)

- Official account code (often used for regulatory reporting)

- Reference to financial statement lines

- Audit information (who created/modified the account and when)

- Archiving capability (for soft deletion)

2. Account Type Enumeration

Account types are typically implemented as an enumeration:

public enum AccountType

{

Asset = 1,

Liability = 2,

Equity = 3,

Revenue = 4,

Expense = 5

}

This enumeration serves as more than just a label—it determines critical business logic, such as whether an account normally has a debit or credit balance.

3. Account Validation

A robust chart of accounts module includes validation logic for accounts:

- Ensuring account codes follow the required format (typically numeric)

- Verifying that account codes align with their account types (e.g., asset accounts starting with “1”)

- Validating consistency between account types and financial statement lines

- Checking that account names are not empty and are unique

4. Balance Calculation

One of the most important functions of the chart of accounts module is calculating account balances:

- Point-in-time balance calculations (as of a specific date)

- Period turnover calculations (debit and credit movement within a date range)

- Determining if an account has any transactions

Implementation Best Practices

When implementing a chart of accounts module, consider these best practices:

1. Use Interface-Based Design

Implement interfaces like IAccount to define the contract for account entities:

public interface IAccount : IEntity, IAuditable, IArchivable

{

Guid? BalanceAndIncomeLineId { get; set; }

string AccountName { get; set; }

AccountType AccountType { get; set; }

string OfficialCode { get; set; }

}

2. Apply SOLID Principles

- Single Responsibility: Separate account validation, balance calculation, and persistence

- Open-Closed: Design for extension without modification (e.g., for custom account types)

- Liskov Substitution: Ensure derived implementations can substitute base interfaces

- Interface Segregation: Create focused interfaces for different concerns

- Dependency Inversion: Depend on abstractions rather than concrete implementations

3. Implement Comprehensive Validation

Account validation should be thorough to prevent data inconsistencies:

public bool ValidateAccountCode(string accountCode, AccountType accountType)

{

if (string.IsNullOrWhiteSpace(accountCode))

return false;

// Account code should be numeric

if (!accountCode.All(char.IsDigit))

return false;

// Check that account code prefix matches account type

char expectedPrefix = GetExpectedPrefix(accountType);

return accountCode.Length > 0 && accountCode[0] == expectedPrefix;

}

4. Integrate with Financial Reporting

The chart of accounts should map accounts to financial statement lines for reporting:

- Balance sheet lines

- Income statement lines

- Cash flow statement lines

- Equity statement lines

Testing the Chart of Accounts Module

Comprehensive testing is crucial for a chart of accounts module:

- Unit Tests: Test individual components like account validation and balance calculation

- Integration Tests: Verify that components work together properly

- Business Rule Tests: Ensure business rules like “assets have debit balances” are enforced

- Persistence Tests: Confirm correct database interaction

Common Challenges and Solutions

When working with a chart of accounts module, you might encounter:

1. Account Code Standardization

Challenge: Different jurisdictions may have different account coding requirements.

Solution: Implement a flexible validation system that can be configured for different accounting standards.

2. Balance Calculation Performance

Challenge: Balance calculations for accounts with many transactions can be slow.

Solution: Implement caching strategies and consider storing period-end balances for faster reporting.

3. Account Hierarchies

Challenge: Supporting account hierarchies for reporting.

Solution: Implement a nested set model or closure table for efficient hierarchy querying.

Conclusion

A well-designed chart of accounts module is the foundation of a reliable accounting system. By following these implementation guidelines and understanding the core concepts, you can create a flexible, maintainable, and powerful chart of accounts that will serve as the backbone of your financial accounting application.

Remember that the chart of accounts is not just a technical construct—it should reflect the business needs and reporting requirements of the organization using the system. Taking time to properly design this module will pay dividends throughout the life of your application.

Repo

egarim/SivarErp: Open Source ERP

About Us

YouTube

https://www.youtube.com/c/JocheOjedaXAFXAMARINC

Our sites

Let’s discuss your XAF

This call/zoom will give you the opportunity to define the roadblocks in your current XAF solution. We can talk about performance, deployment or custom implementations. Together we will review you pain points and leave you with recommendations to get your app back in track

https://calendly.com/bitframeworks/bitframeworks-free-xaf-support-hour

Our free A.I courses on Udemy

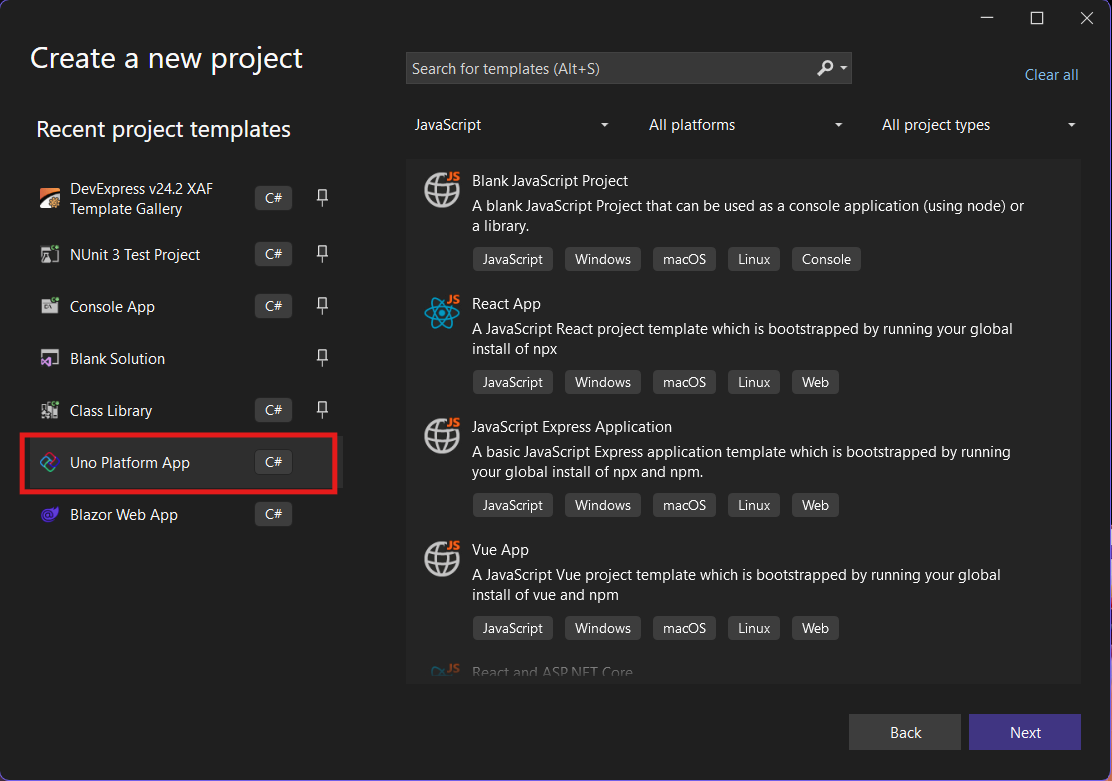

by Joche Ojeda | Mar 13, 2025 | netcore, Uno Platform

For the past two weeks, I’ve been experimenting with the Uno Platform in two ways: creating small prototypes to explore features I’m curious about and downloading example applications from the Uno Gallery. In this article, I’ll explain the first steps you need to take when creating an Uno Platform application, the decisions you’ll face, and what I’ve found useful so far in my journey.

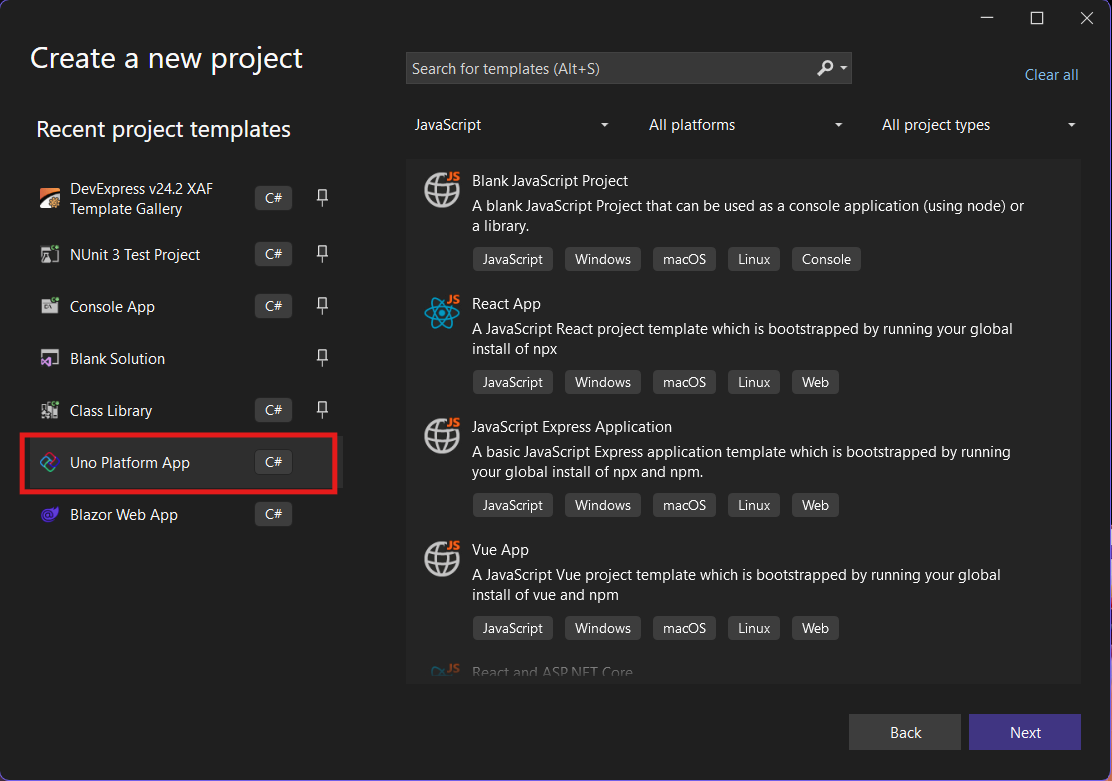

Step 1: Create a New Project

I’m using Visual Studio 2022, though the extensions and templates work well with previous versions too. I have both studio versions installed, and Uno Platform works well in both.

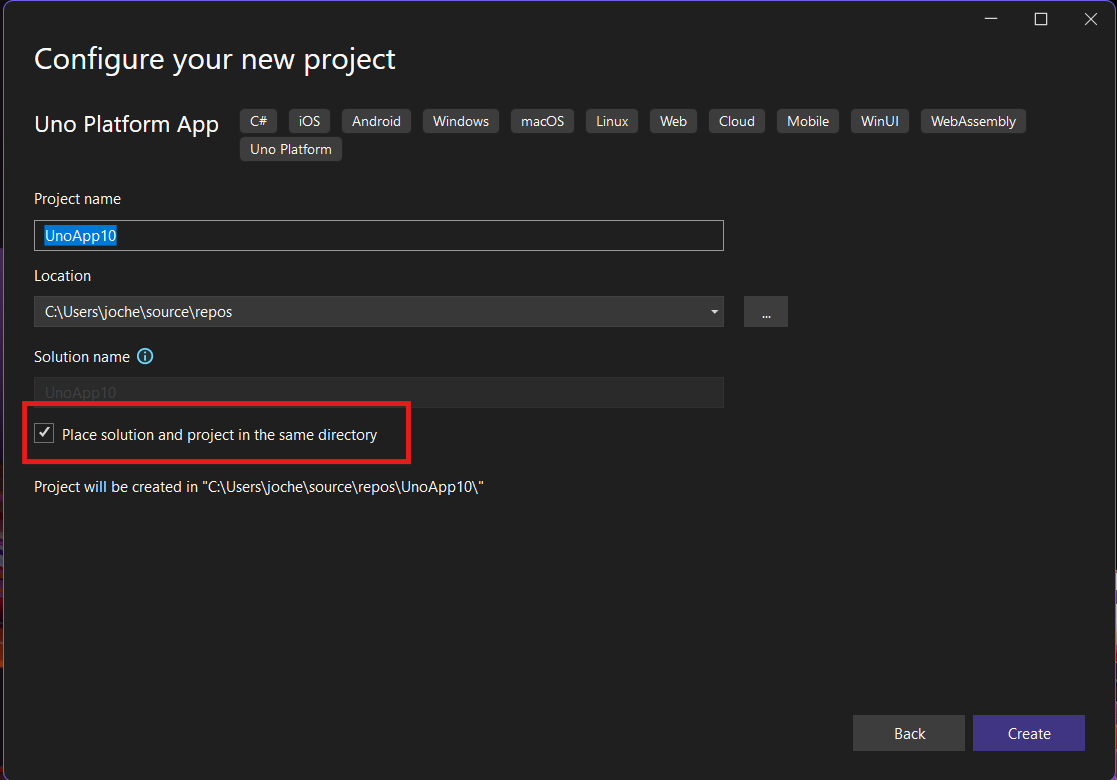

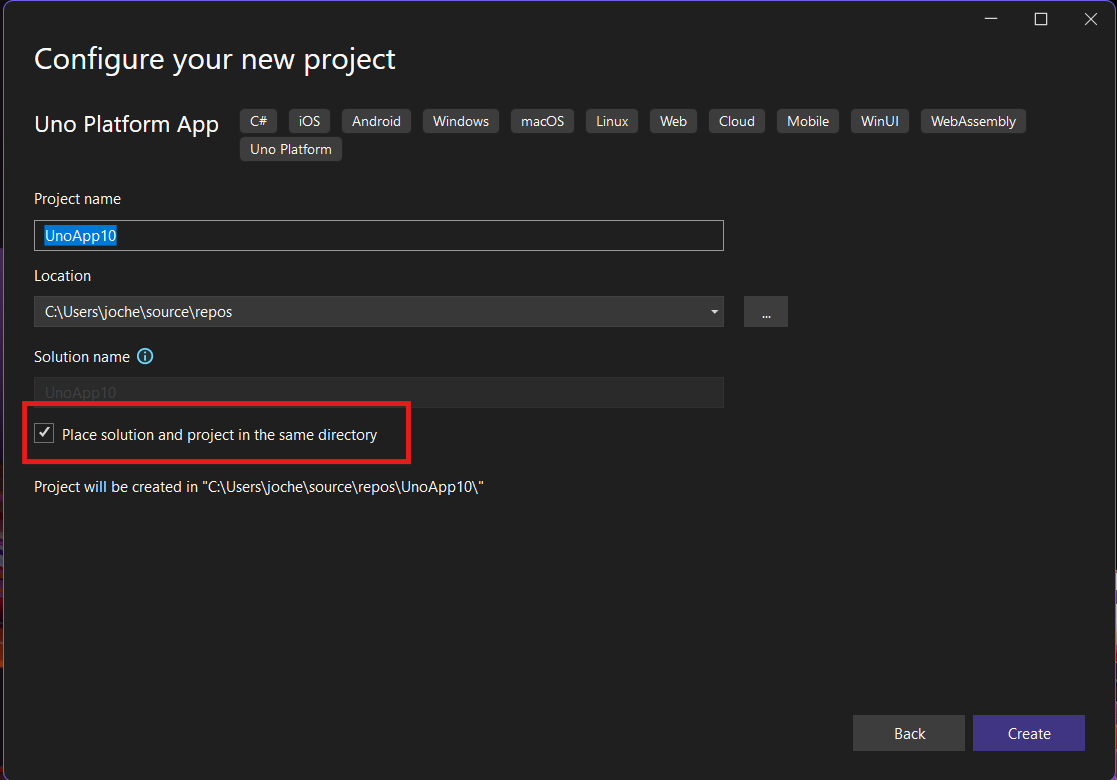

Step 2: Project Setup

After naming your project, it’s important to select “Place solution and project in the same directory” because of the solution layout requirements. You need the directory properties file to move forward. I’ll talk more about the solution structure in a future post, but for now, know that without checking this option, you won’t be able to proceed properly.

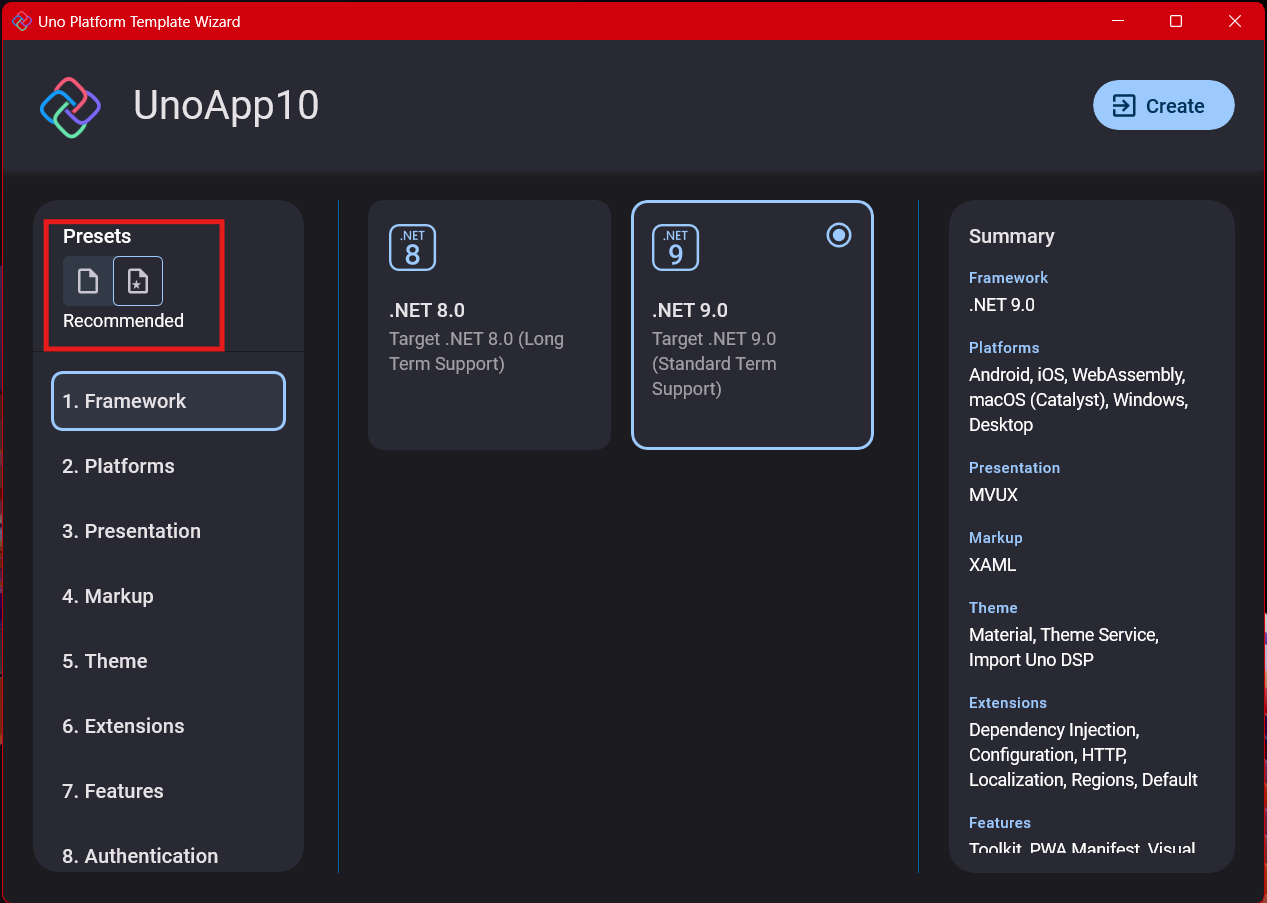

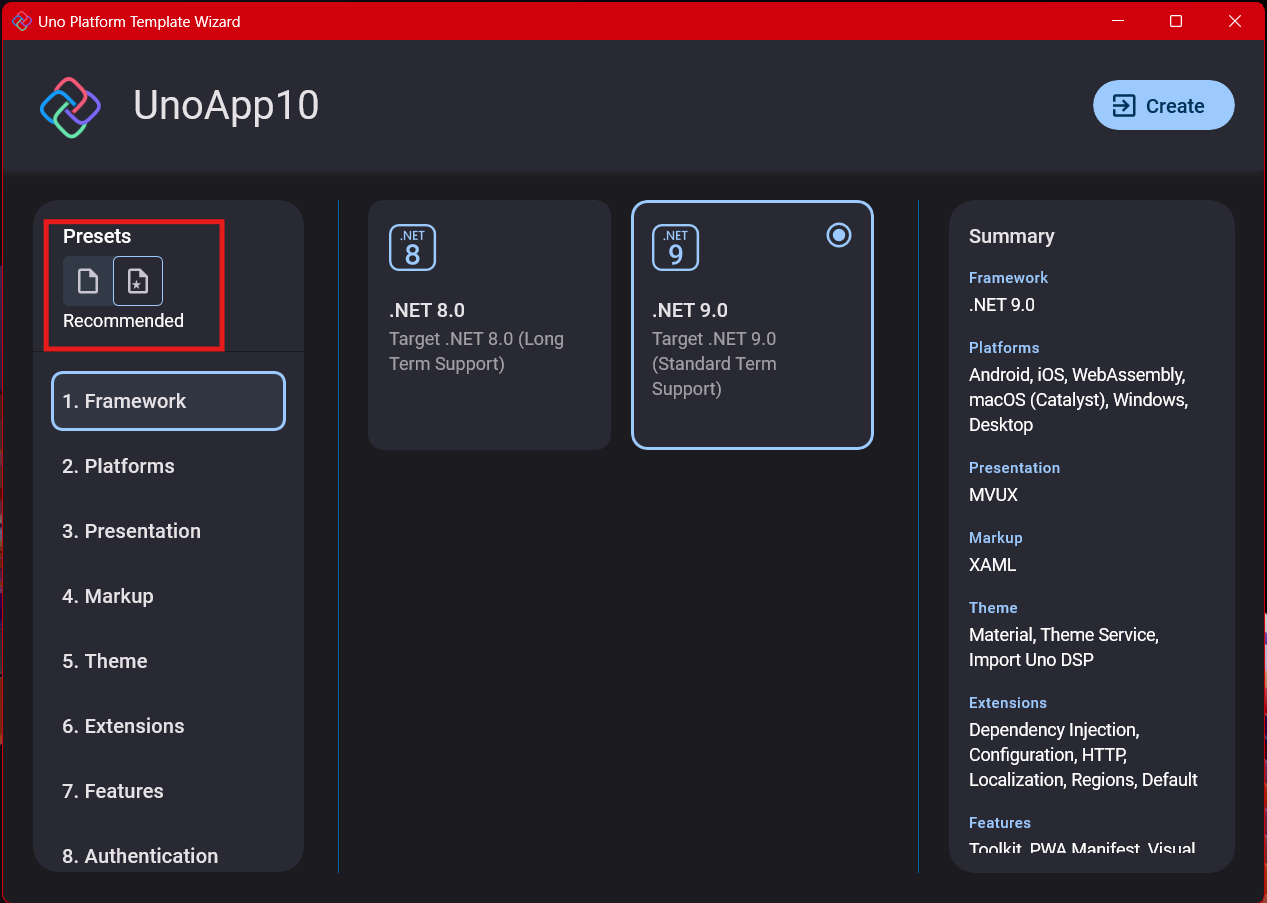

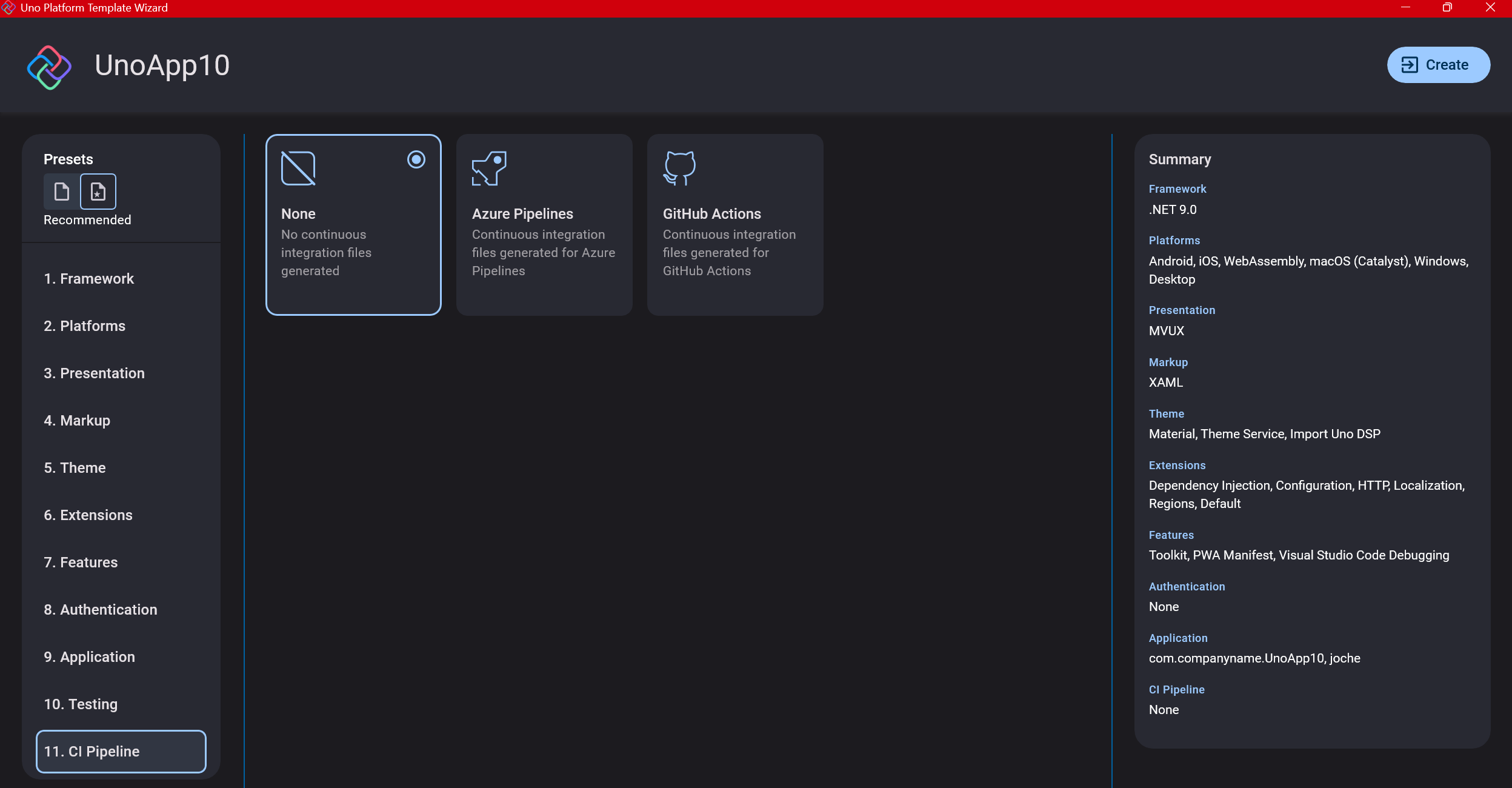

Step 3: The Configuration Wizard

The Uno Platform team has created a comprehensive wizard that guides you through various configuration options. It might seem overwhelming at first, but it’s better to have this guided approach where you can make one decision at a time.

Your first decision is which target framework to use. They recommend .NET 9, which I like, but in my test project, I’m working with .NET 8 because I’m primarily focused on WebAssembly output. Uno offers multi-threading in Web Assembly with .NET 8, which is why I chose it, but for new projects, .NET 9 is likely the better choice.

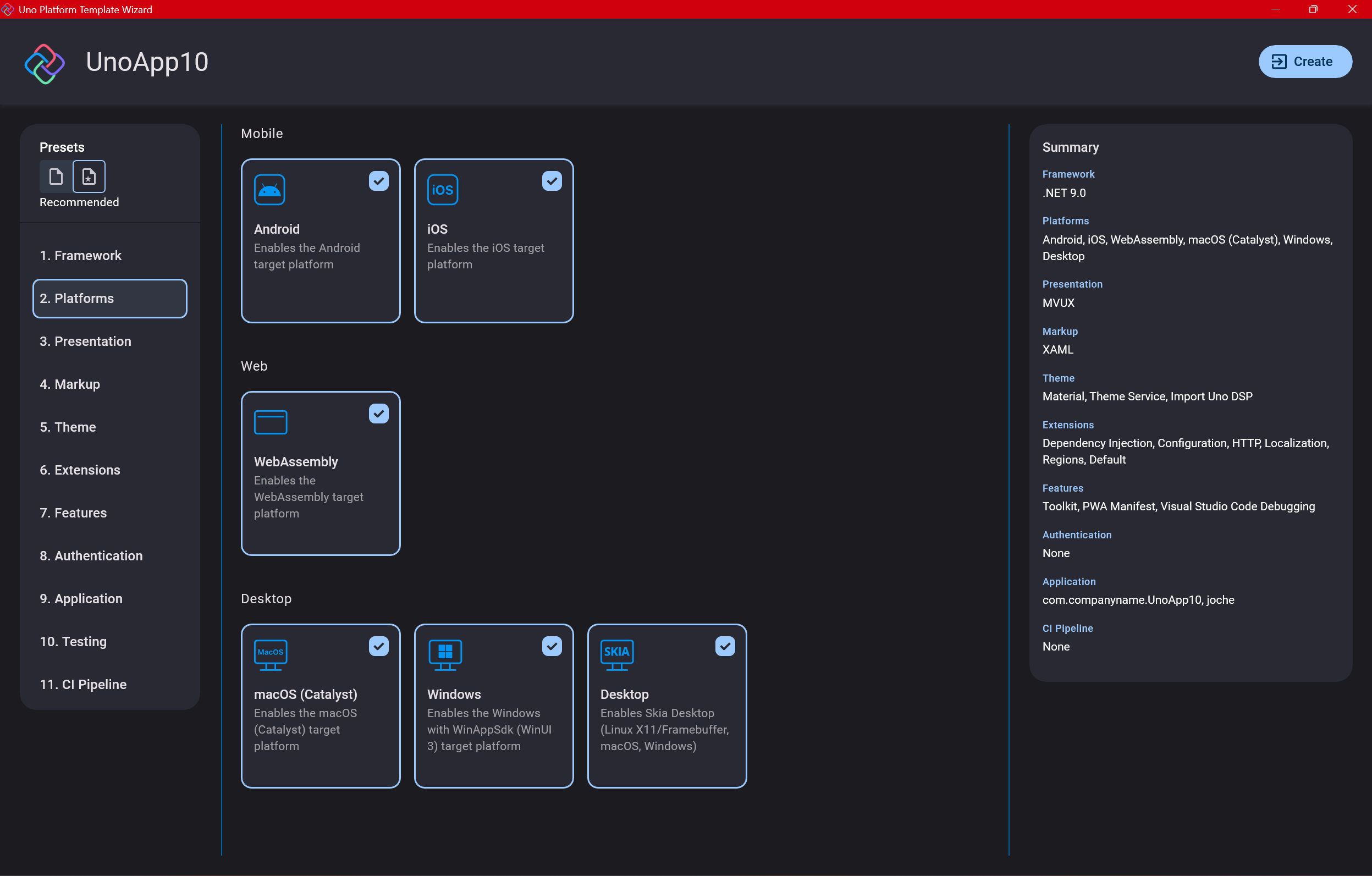

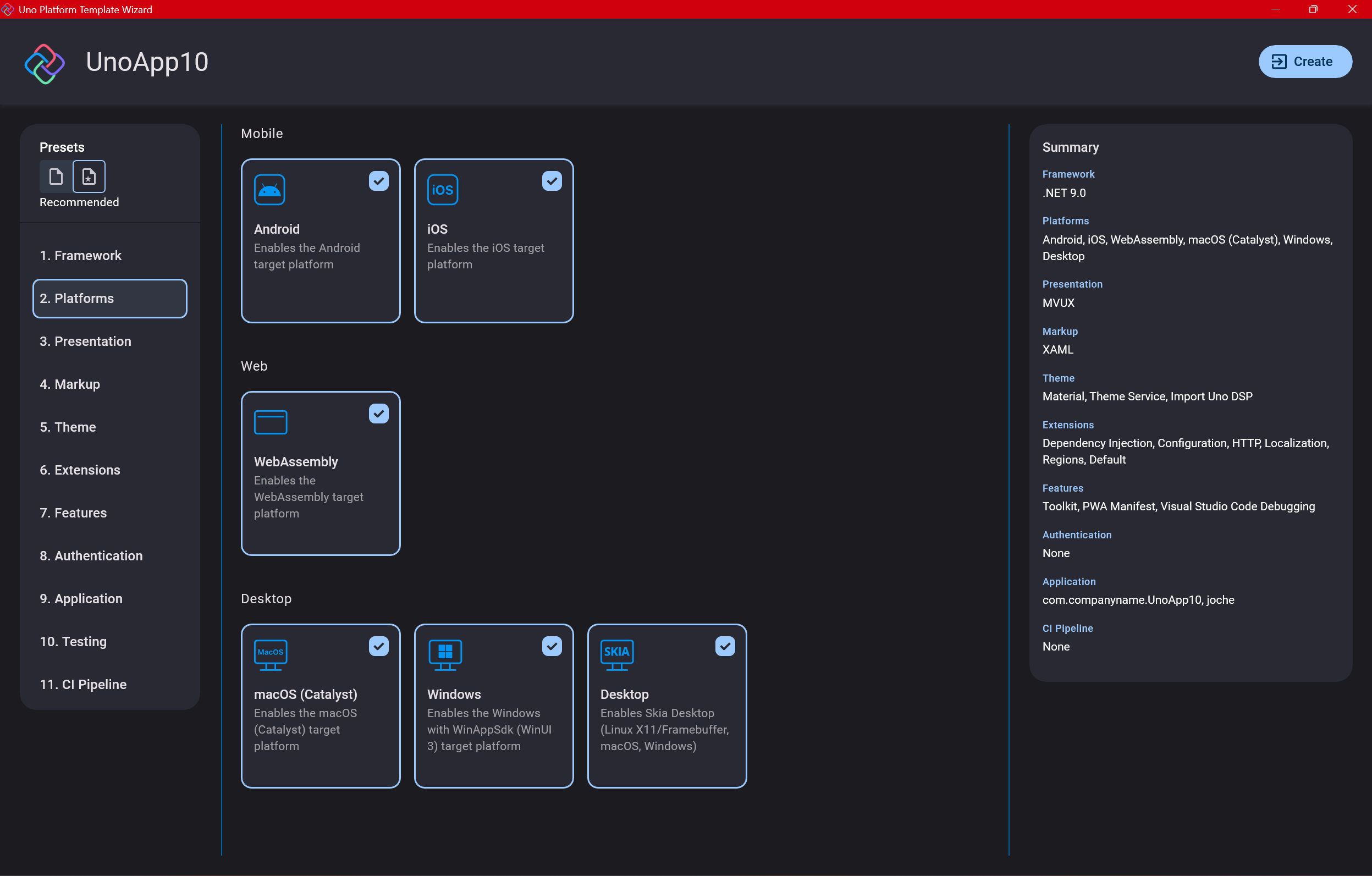

Step 4: Target Platforms

Next, you need to select which platforms you want to target. I always select all of them because the most beautiful aspect of the Uno Platform is true multi-targeting with a single codebase.

In the past (during the Xamarin era), you needed multiple projects with a complex directory structure. With Uno, it’s actually a single unified project, creating a clean solution layout. So while you can select just WebAssembly if that’s your only focus, I think you get the most out of Uno by multi-targeting.

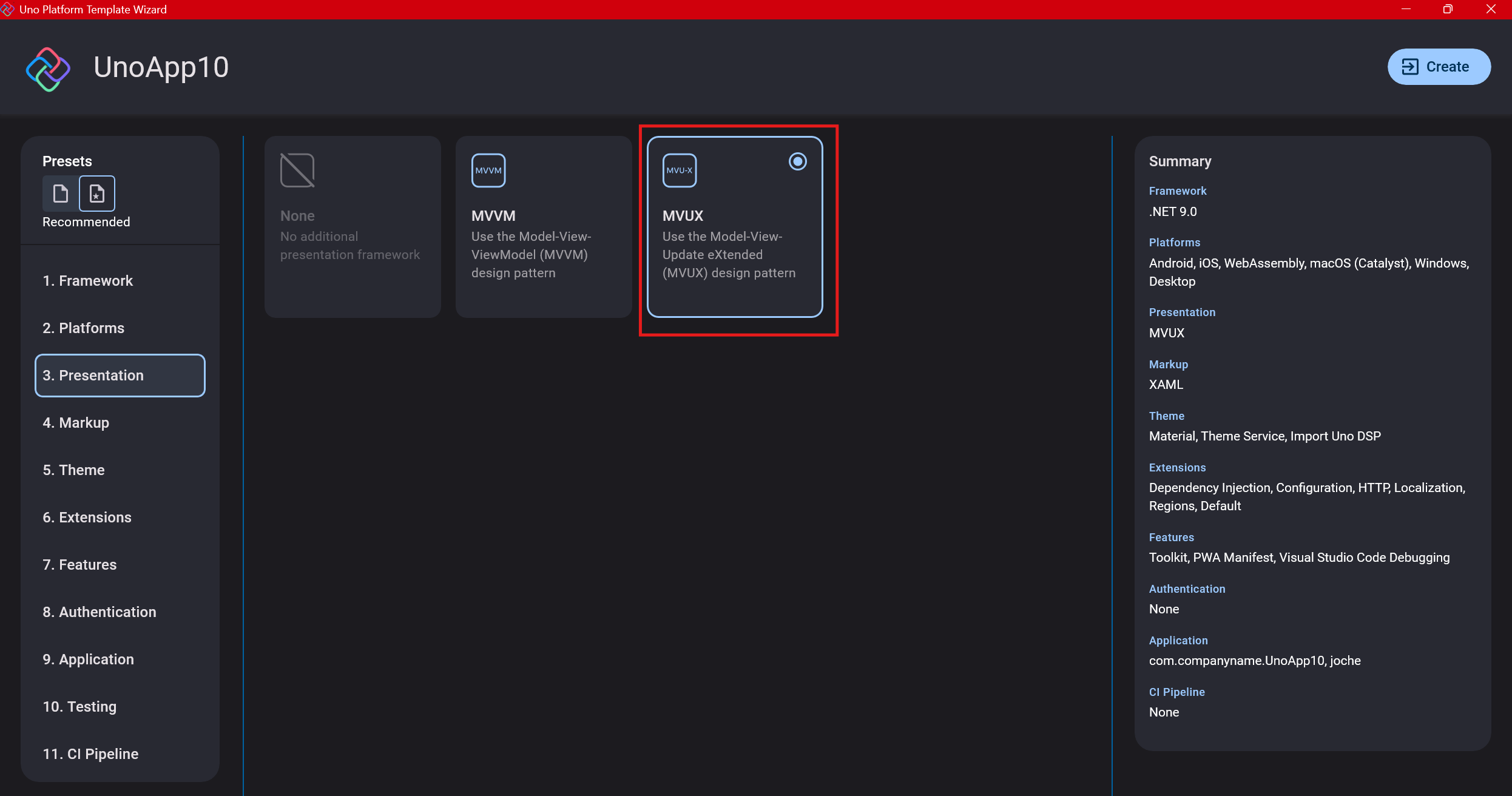

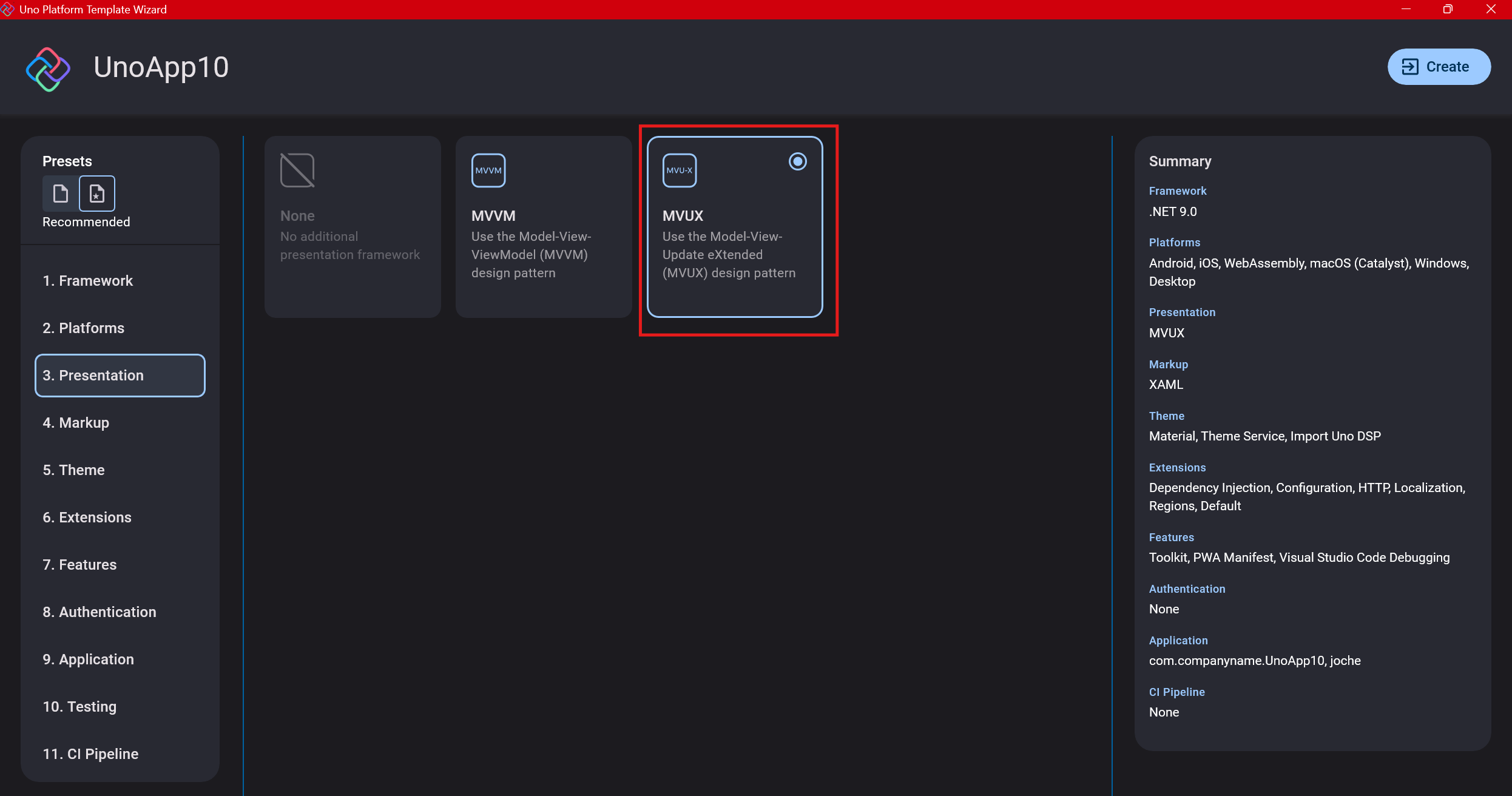

Step 5: Presentation Pattern

The next question is which presentation pattern you want to use. I would suggest MVUX, though I still have some doubts as I haven’t tried MVVM with Uno yet. MVVM is the more common pattern that most programmers understand, while MVUX is the new approach.

One challenge is that when you check the official Uno sample repository, the examples come in every presentation pattern flavor. Sometimes you’ll find a solution for your task in one pattern but not another, so you may need to translate between them. You’ll likely find more examples using MVVM.

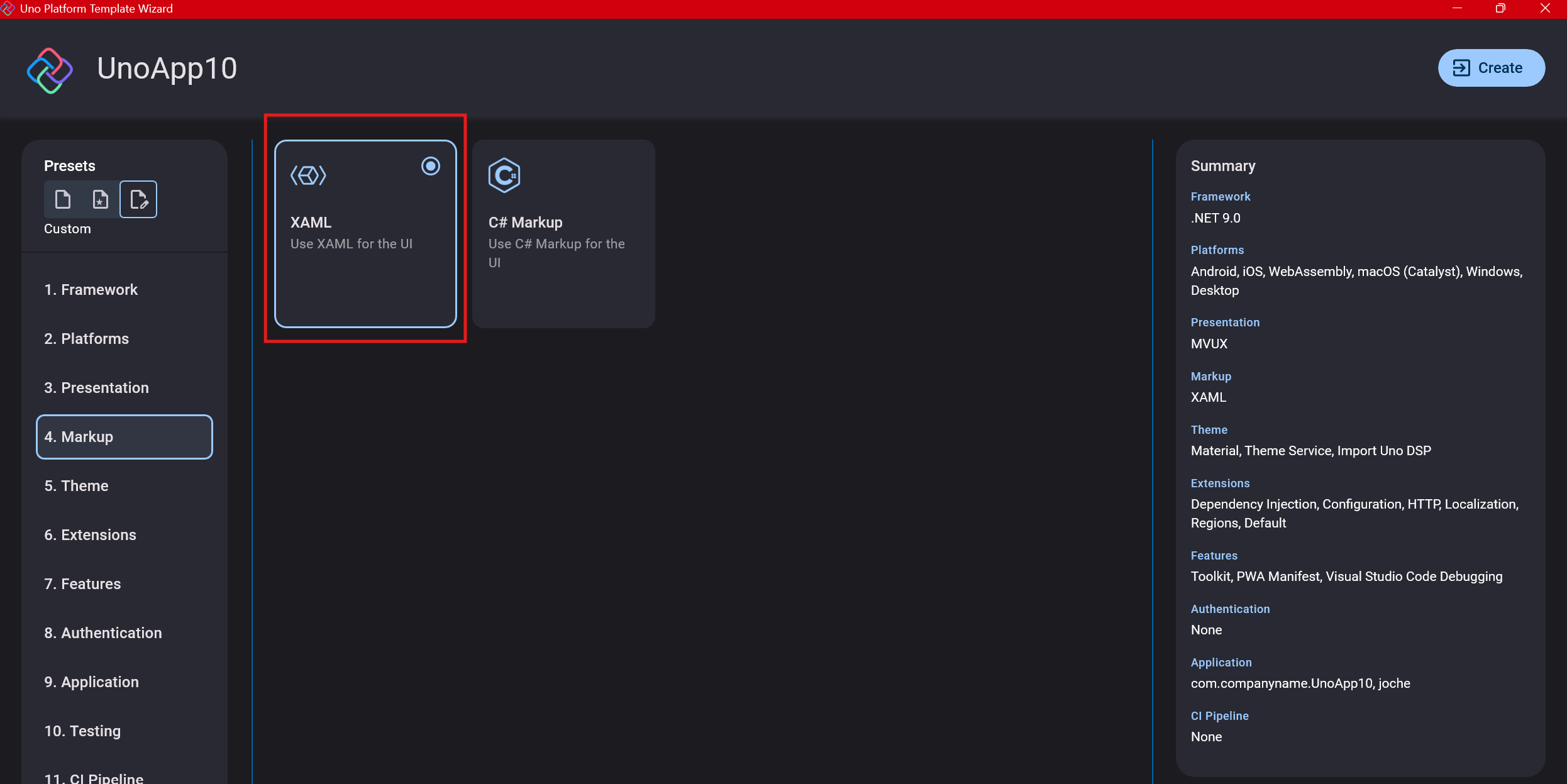

Step 6: Markup Language

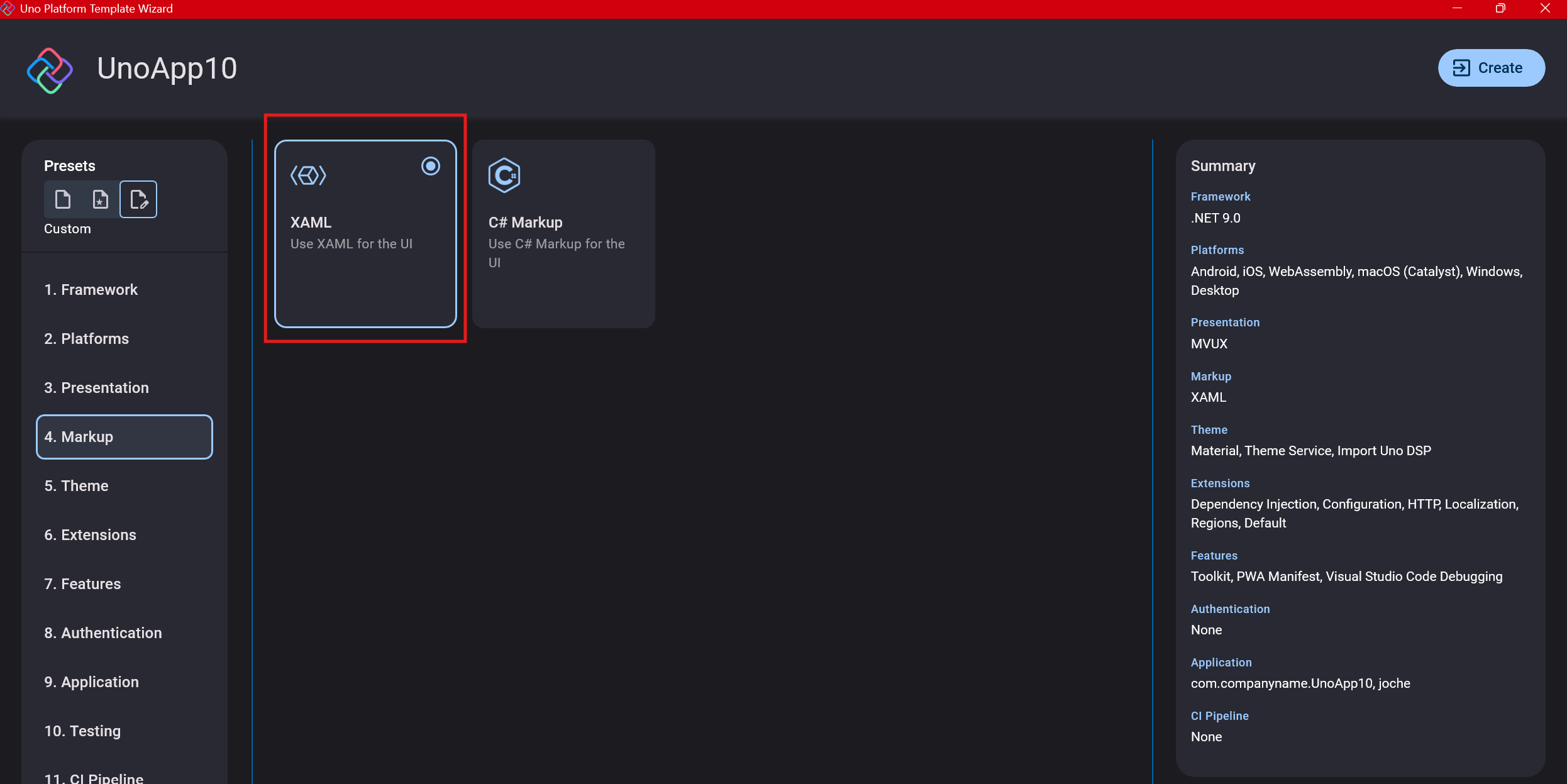

For markup, I recommend selecting XAML. In my first project, I tried using C# markup, which worked well until I reached some roadblocks I couldn’t overcome. I didn’t want to get stuck trying to solve one specific layout issue, so I switched. For beginners, I suggest starting with XAML.

Step 7: Theming

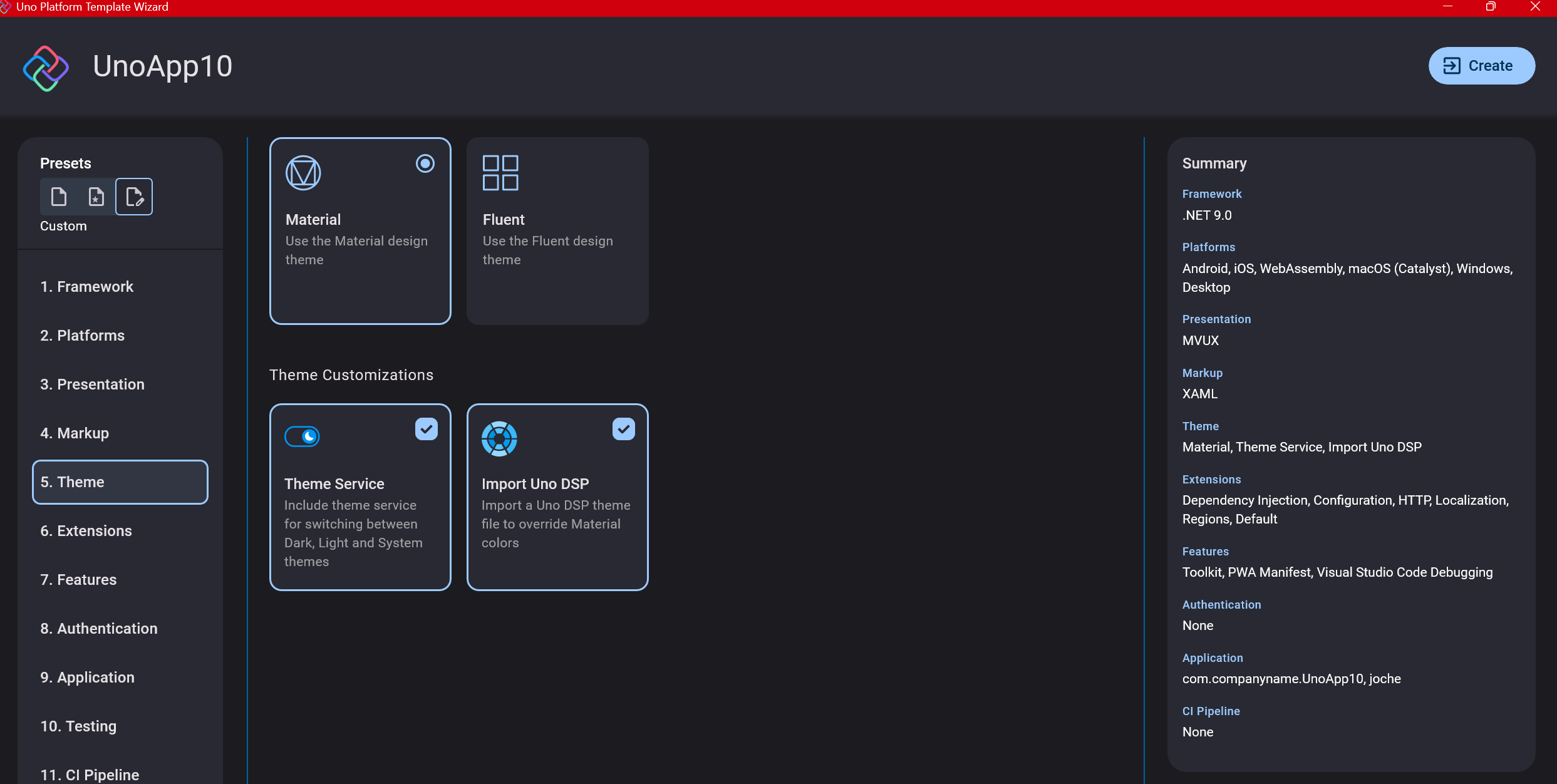

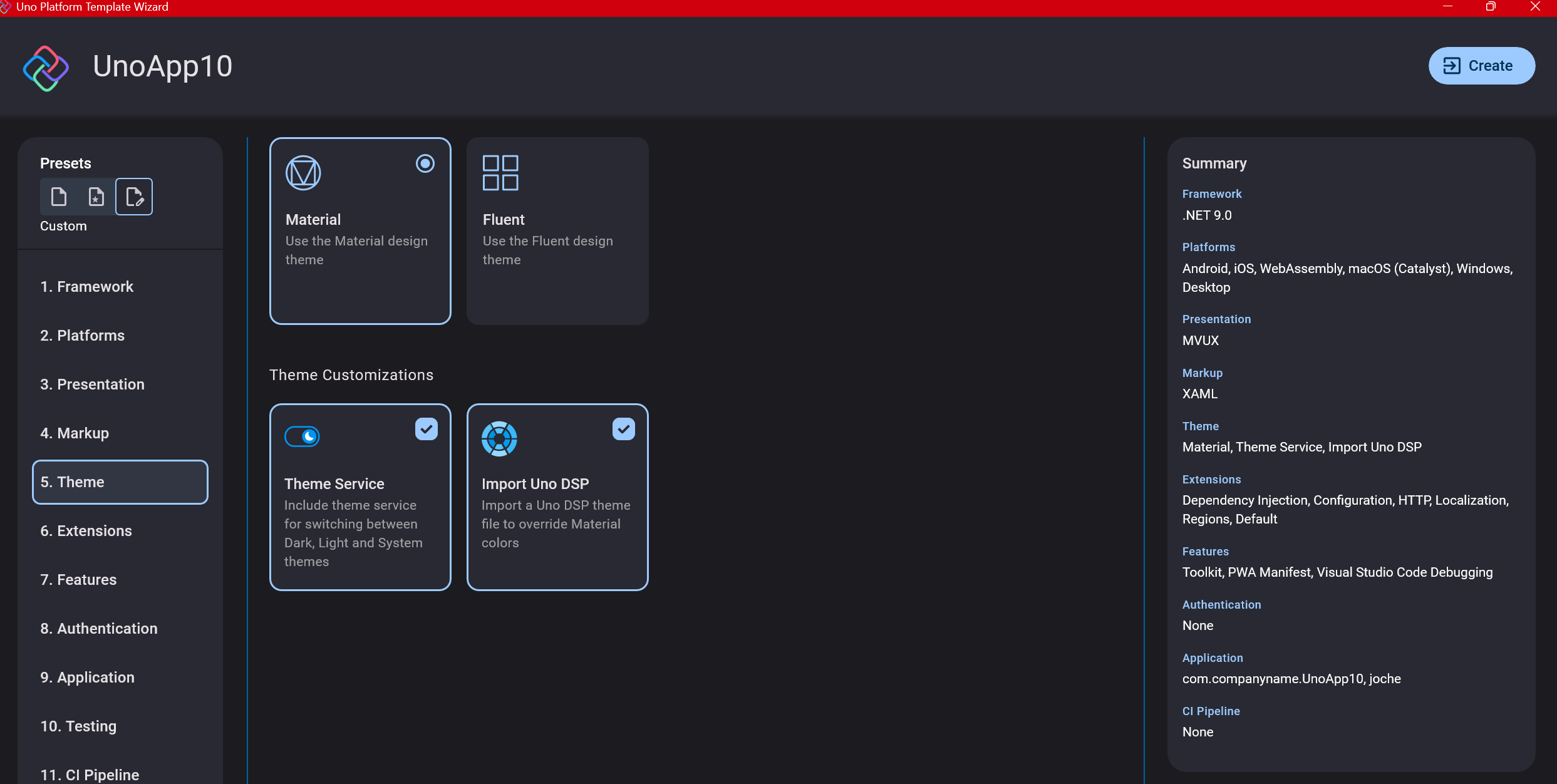

For theming, you’ll need to select a UI theme. I don’t have a strong preference here and typically stick with the defaults: using Material Design, the theme service, and importing Uno DSP.

Step 8: Extensions

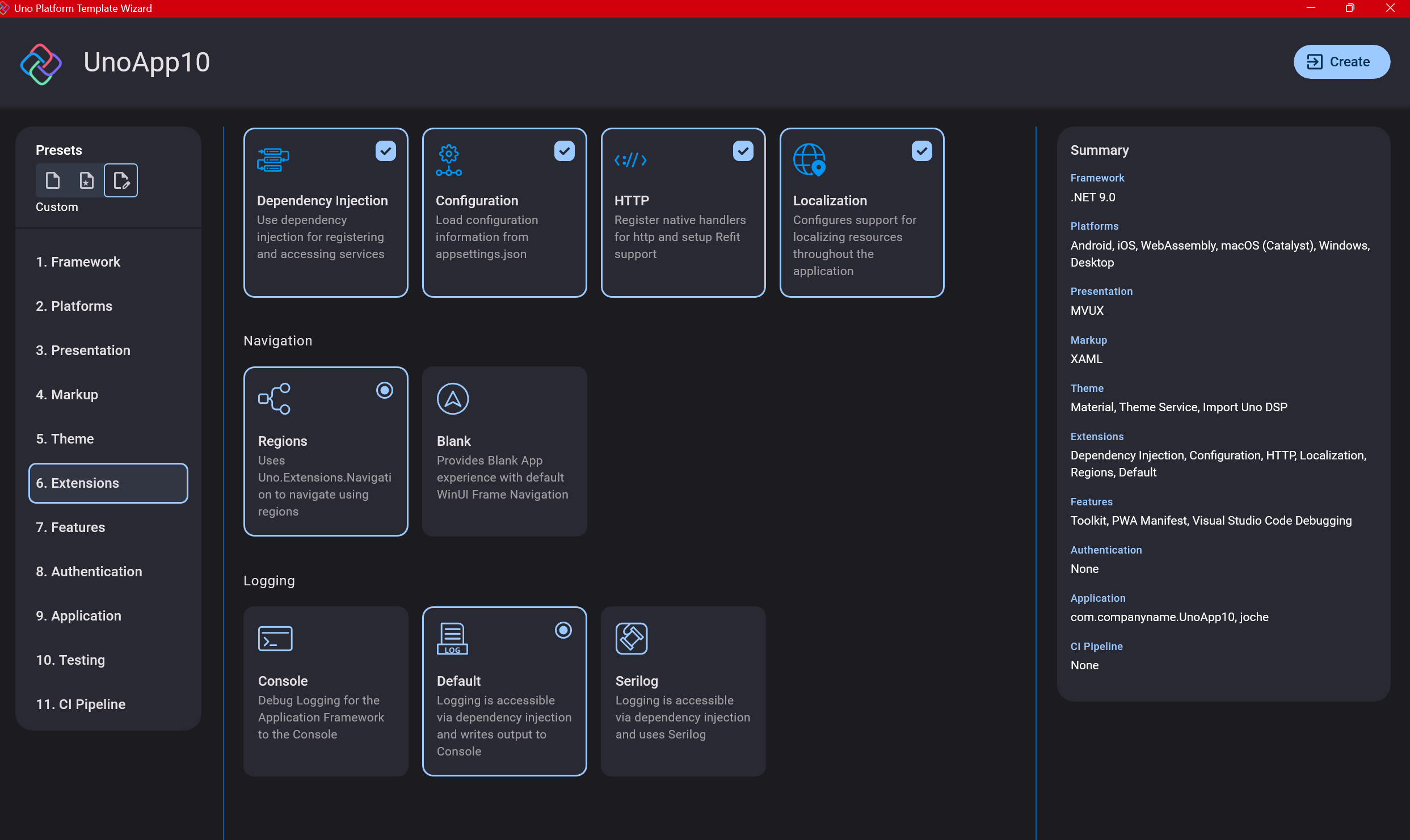

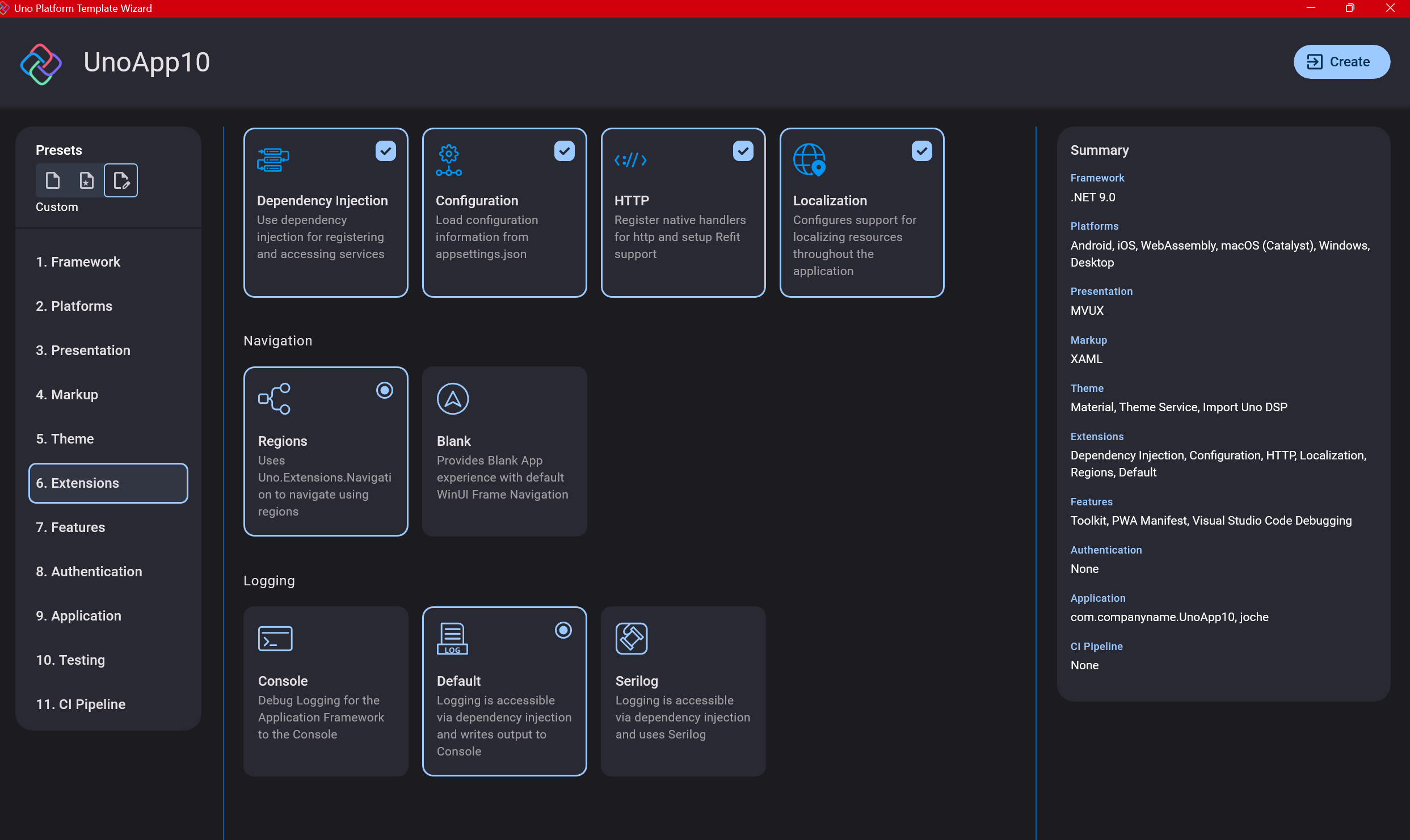

When selecting extensions to include, I recommend choosing almost all of them as they’re useful for modern application development. The only thing you might want to customize is the logging type (Console, Debug, or Serilog), depending on your previous experience. Generally, most applications will benefit from all the extensions offered.

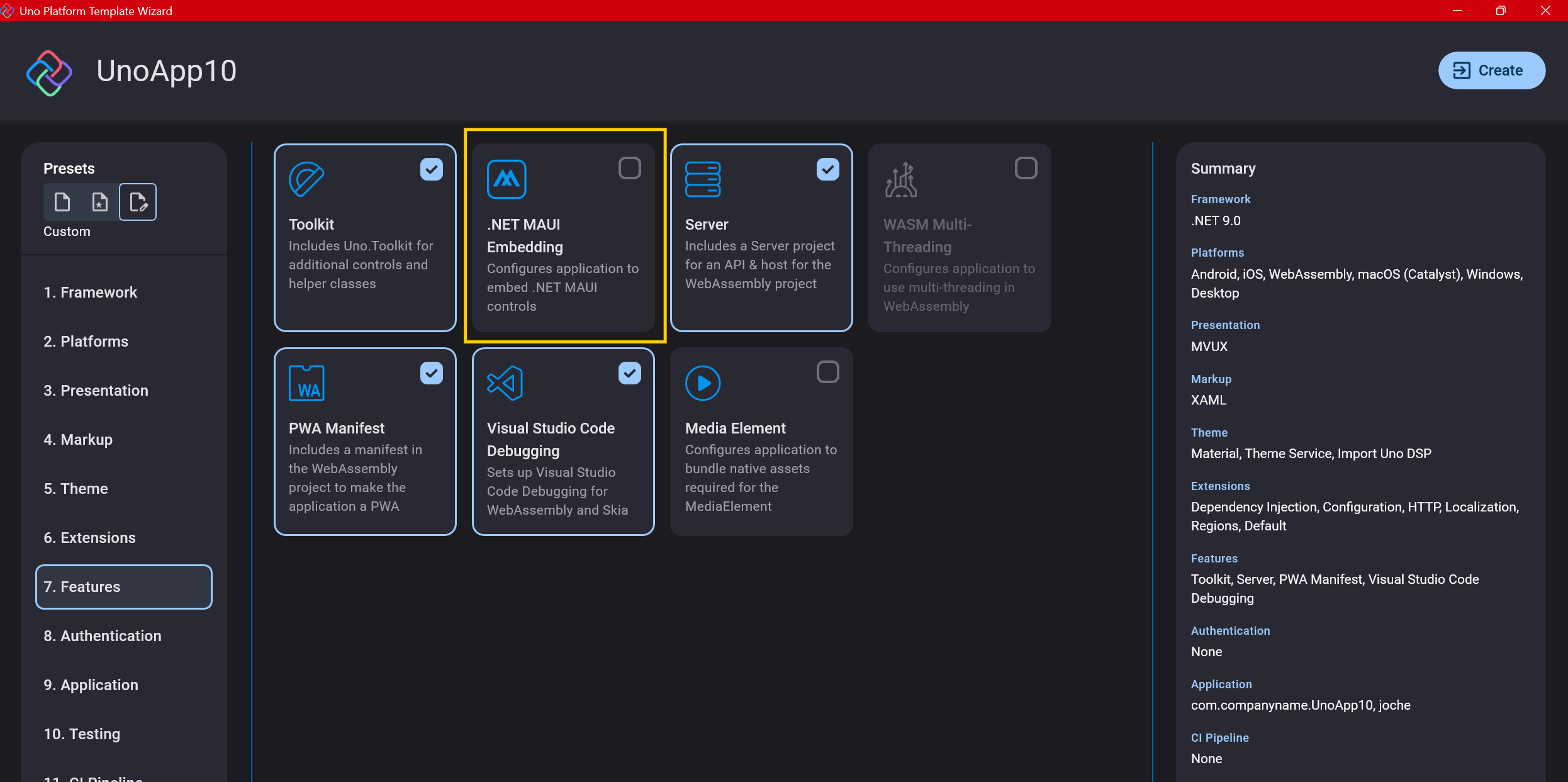

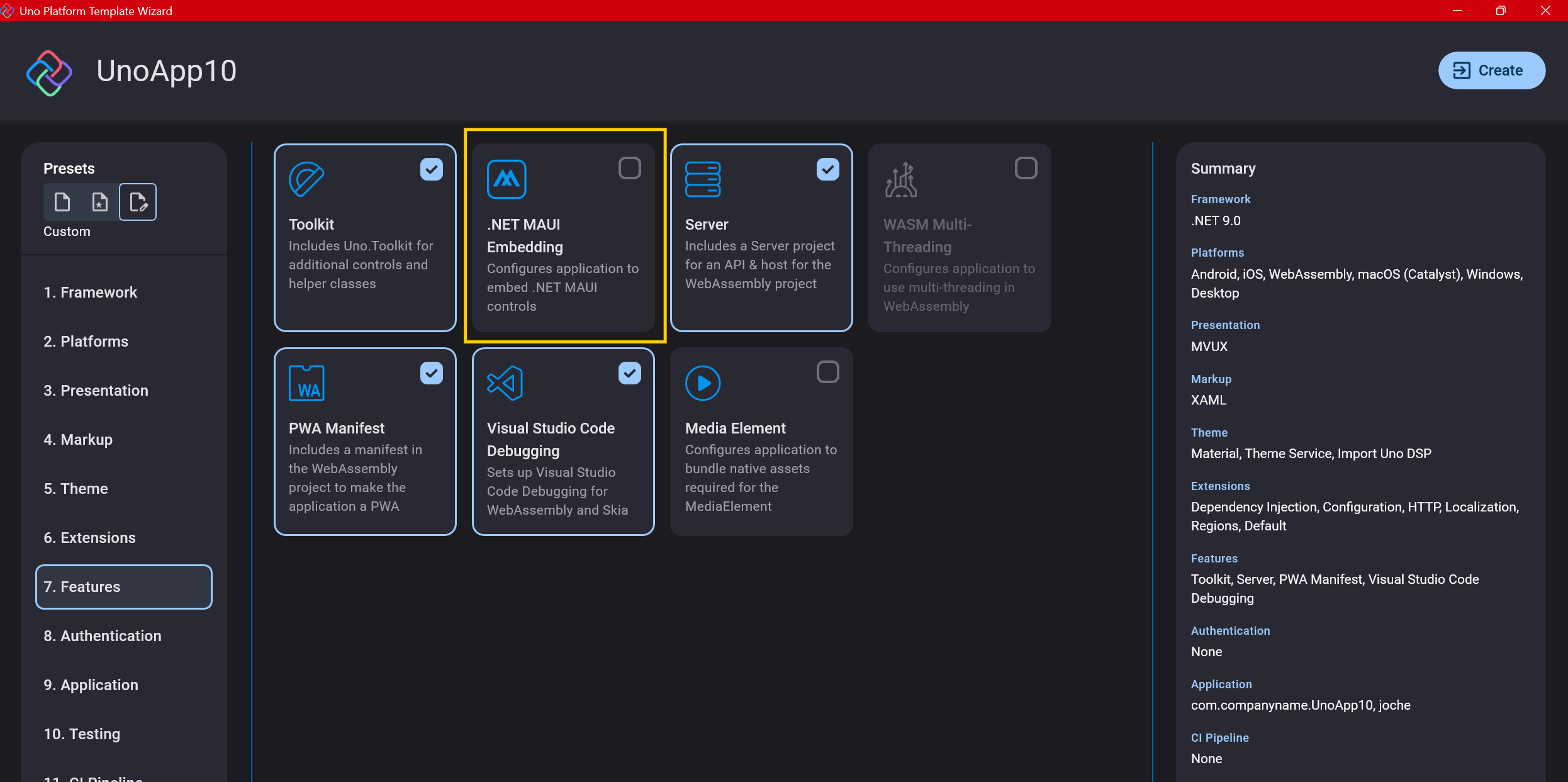

Step 9: Features

Next, you’ll select which features to include in your application. For my tests, I include everything except the MAUI embedding and the media element. Most features can be useful, and I’ll show in a future post how to set them up when discussing the solution structure.

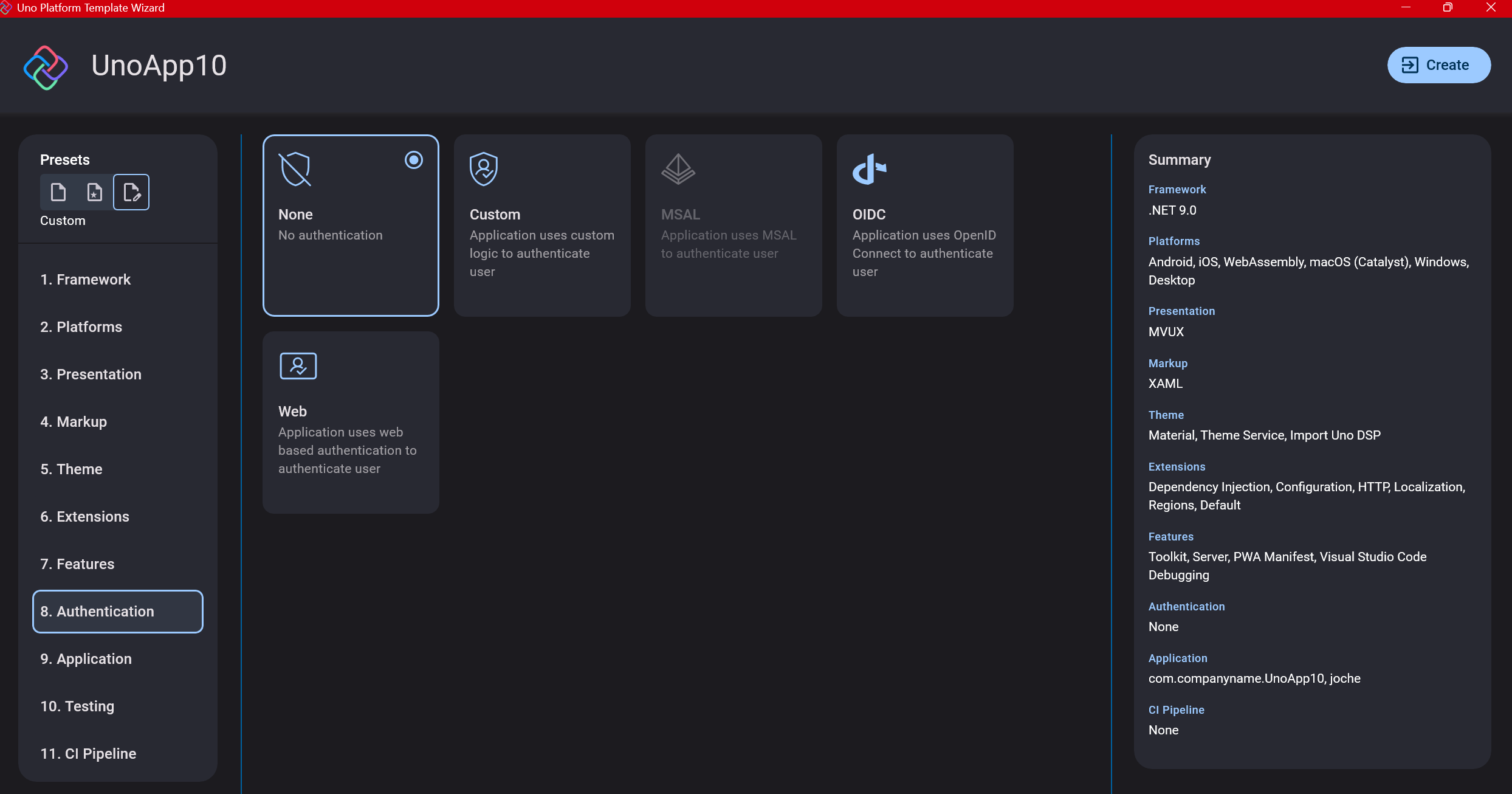

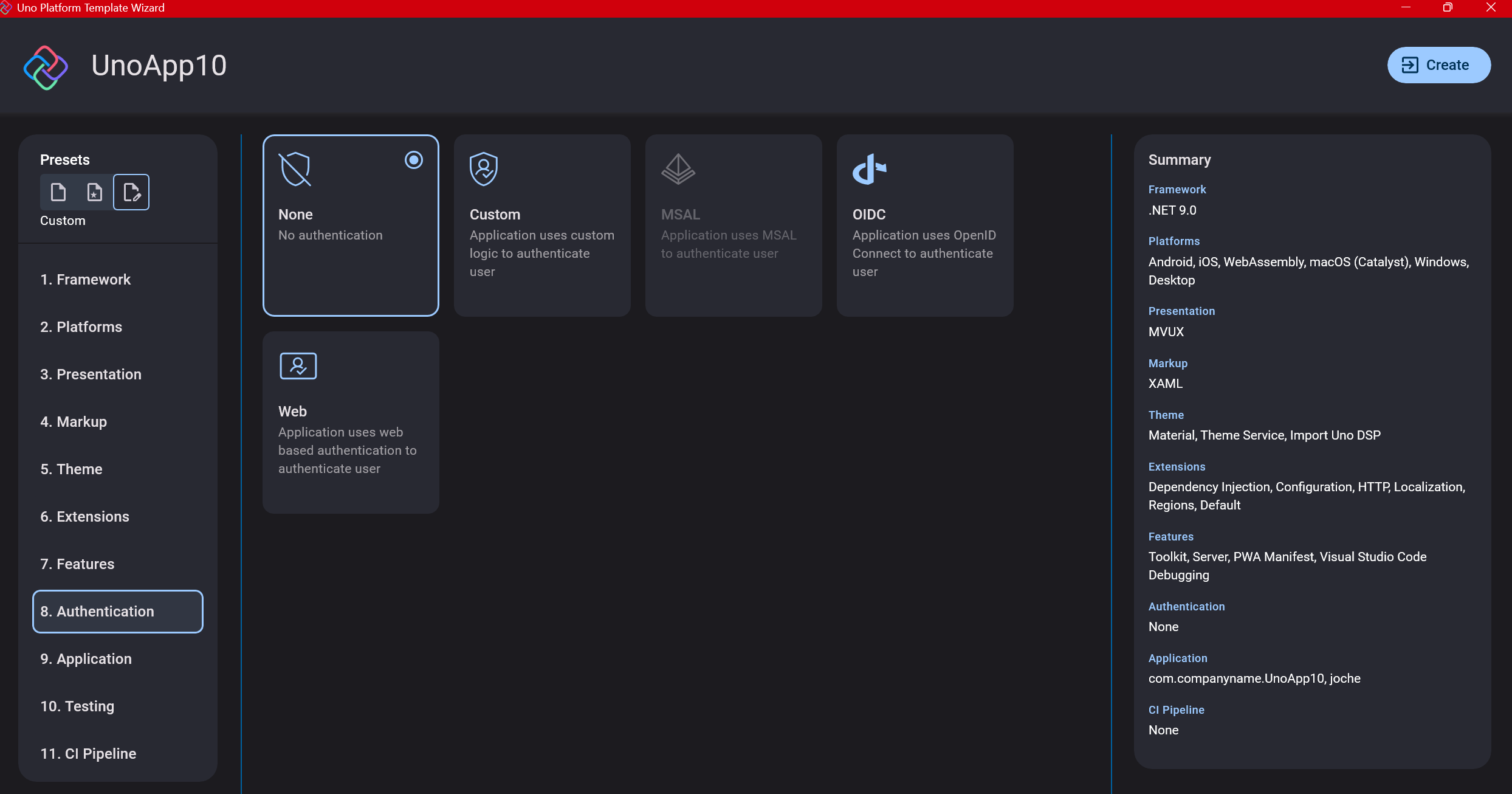

Step 10: Authentication

You can select “None” for authentication if you’re building test projects, but I chose “Custom” because I wanted to see how it works. In my case, I’m authenticating against DevExpress XAF REST API, but I’m also interested in connecting my test project to Azure B2C.

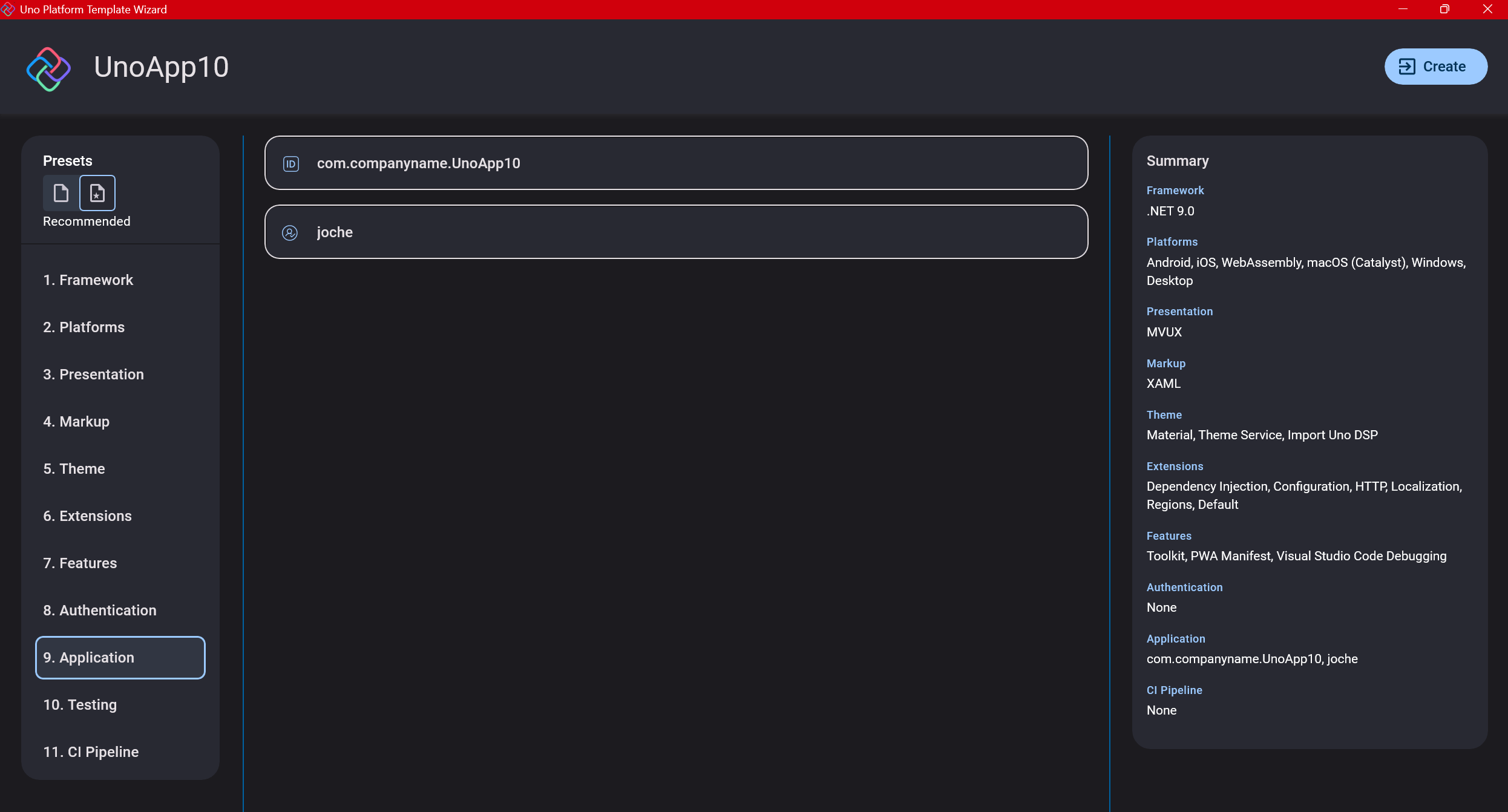

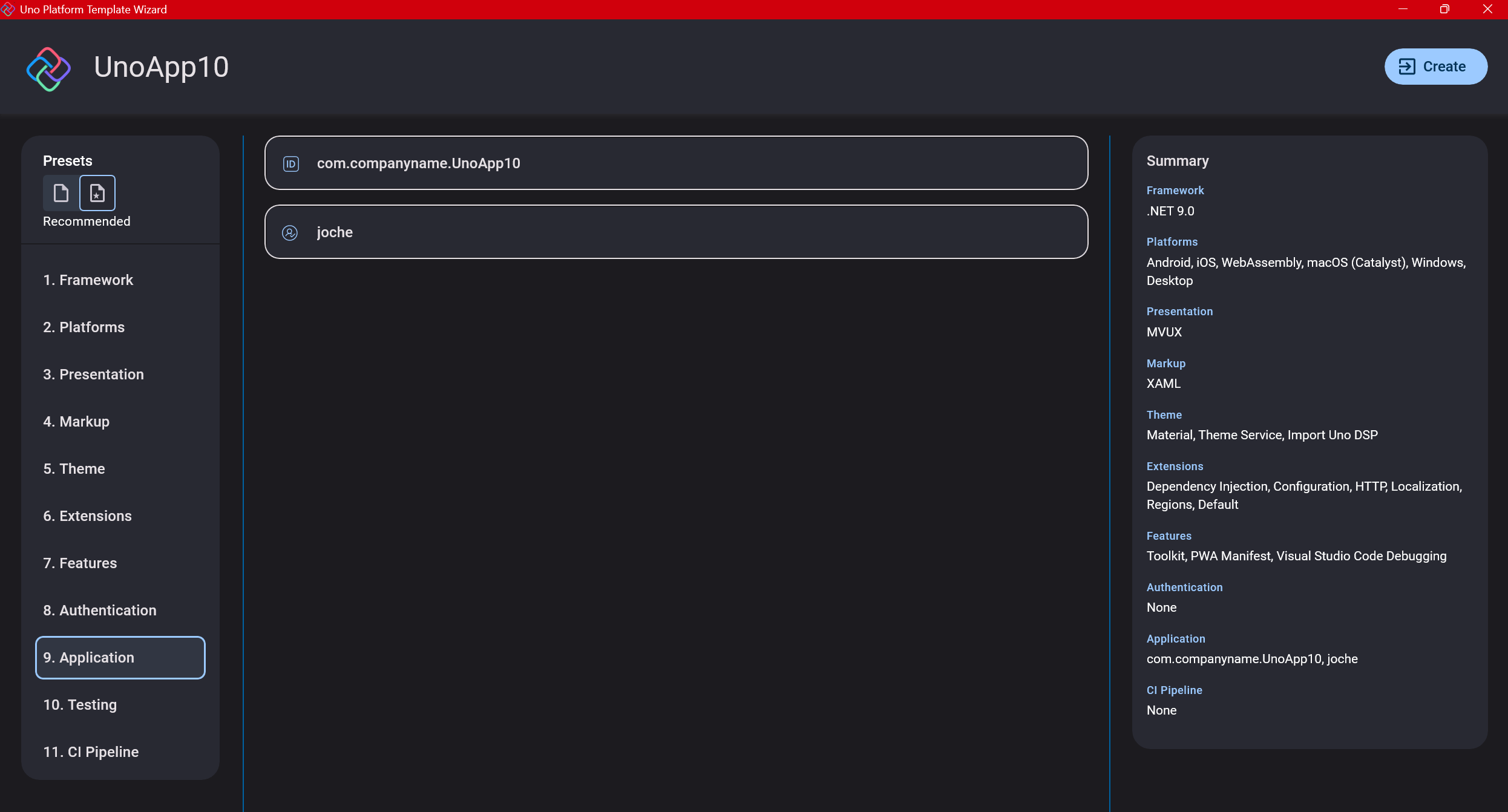

Step 11: Application ID

Next, you’ll need to provide an application ID. While I haven’t fully explored the purpose of this ID yet, I believe it’s needed when publishing applications to app stores like Google Play and the Apple App Store.

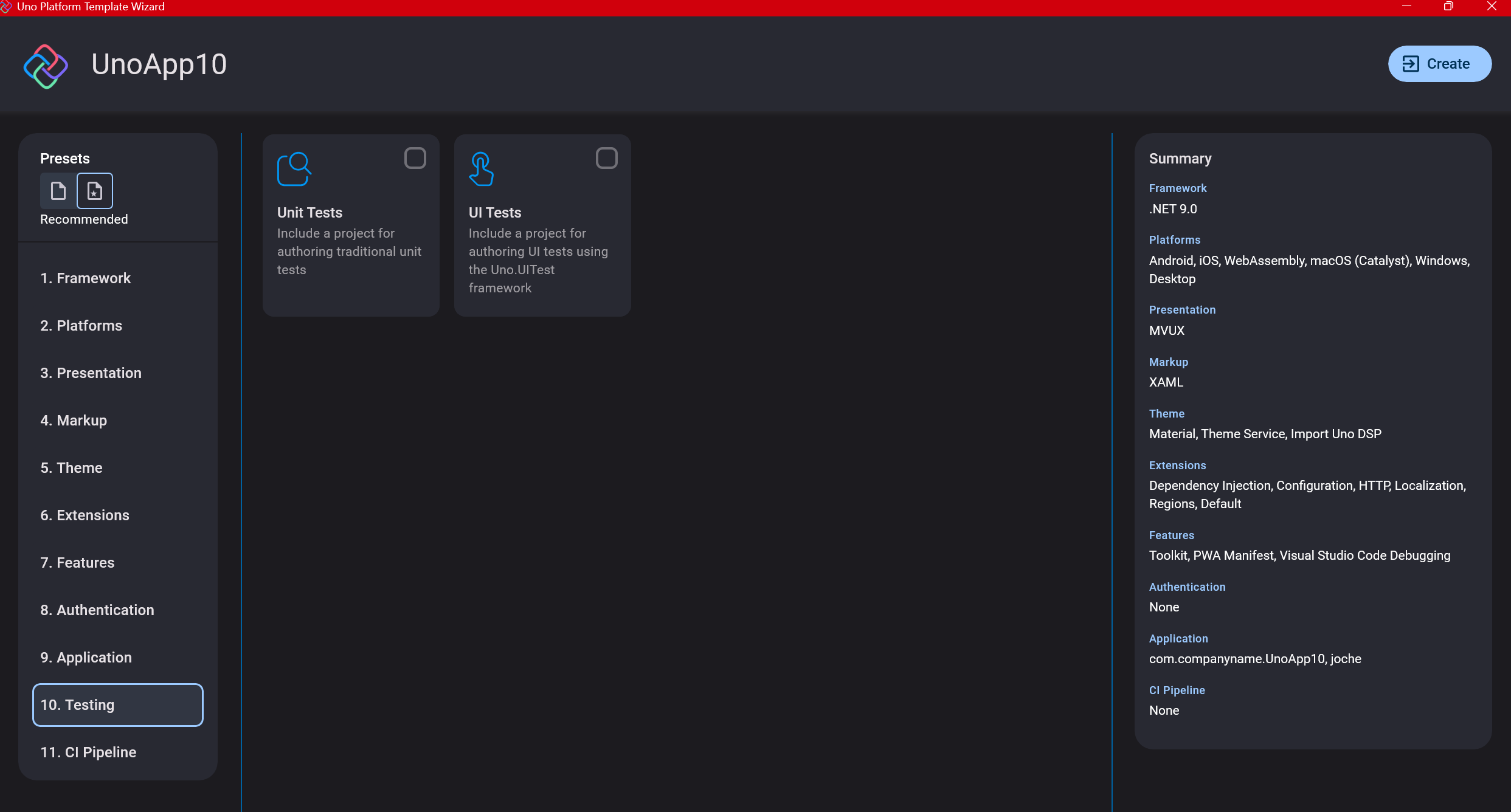

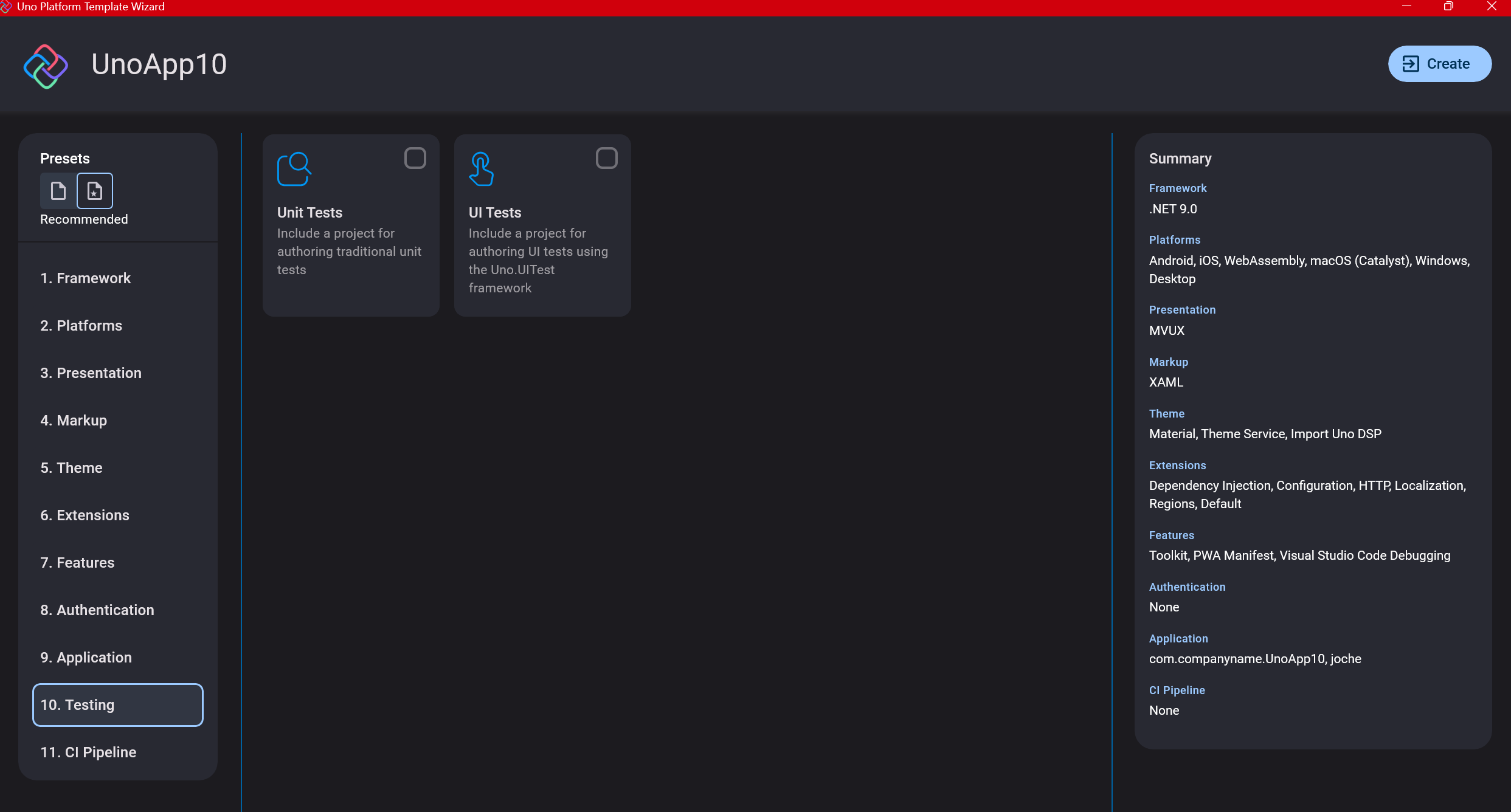

Step 12: Testing

I’m a big fan of testing, particularly integration tests. While unit tests are essential when developing components, for business applications, integration tests that verify the flow are often sufficient.

Uno also offers UI testing capabilities, which I haven’t tried yet but am looking forward to exploring. In platform UI development, there aren’t many choices for UI testing, so having something built-in is fantastic.

Testing might seem like a waste of time initially, but once you have tests in place, you’ll save time in the future. With each iteration or new release, you can run all your tests to ensure everything works correctly. The time invested in creating tests upfront pays off during maintenance and updates.

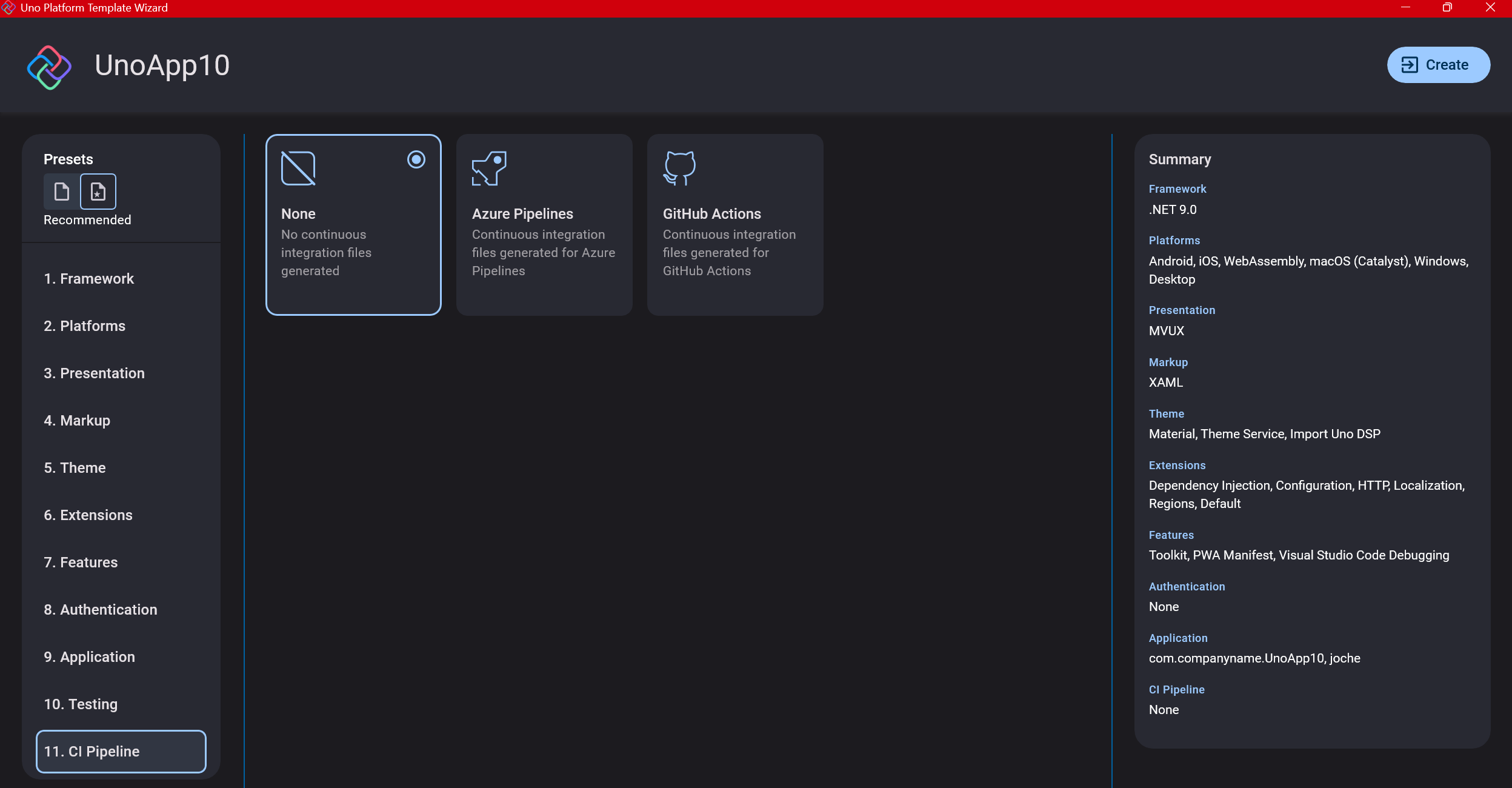

Step 13: CI Pipelines

The final step is about CI pipelines. If you’re building a test application, you don’t need to select anything. For production applications, you can choose Azure Pipelines or GitHub Actions based on your preferences. In my case, I’m not involved with CI pipeline configuration at my workplace, so I have limited experience in this area.

Conclusion

If you’ve made it this far, congratulations! You should now have a shiny new Uno Platform application in your IDE.

This post only covers the initial setup choices when creating a new Uno application. Your development path will differ based on the selections you’ve made, which can significantly impact how you write your code. Choose wisely and experiment with different combinations to see what works best for your needs.

During my learning journey with the Uno Platform, I’ve tried various settings—some worked well, others didn’t, but most will function if you understand what you’re doing. I’m still learning and taking a hands-on approach, relying on trial and error, occasional documentation checks, and GitHub Copilot assistance.

Thanks for reading and see you in the next post!

About Us

YouTube

https://www.youtube.com/c/JocheOjedaXAFXAMARINC

Our sites

Let’s discuss your XAF

https://www.udemy.com/course/microsoft-ai-extensions/

Our free A.I courses on Udemy

by Joche Ojeda | Mar 7, 2025 | Uncategorized, Uno Platform

This year I decided to learn something new, specifically something UI-related. Usually, I only do back-end type of code. Most of my code has no UI representation, and as you might know, that’s why I love XAF from Developer Express so much—because I don’t have to write a UI. I only have to define the business model and the actions, and then I’m good to go.

But this time, I wanted to challenge myself, so I said, “OK, let’s learn something that is UI-related.” I’ve been using .NET for about 18 years already, so I wanted to branch out while still leveraging my existing knowledge.

I was trying to decide which technology to go with, so I checked with the people in my office (XARI). We have the .NET team, which is like 99% of the people, and then we have one React person and a couple of other developers using different frameworks. They suggested Flutter, and I thought, “Well, maybe.”

I checked the setup and tried to do it on my new Surface computer, but it just didn’t work. Even though Flutter looks fine, moving from .NET (which I’ve been writing since day one in 2002) to Dart is a big challenge. I mean, writing code in any case is a challenge, but I realized that Flutter was so far away from my current infrastructure and setup that I would likely learn it and then forget it because I wouldn’t use it regularly.

Then I thought about checking React, but it was kind of the same idea. I could go deep into this for like one month, and then I would totally forget it because I wouldn’t update the tooling, and so on.

So I decided to take another look at Uno Platform. We’ve used Uno Platform in the office before, and I love this multi-platform development approach. The only problem I had at that time was that the tooling wasn’t quite there yet. Sometimes it would compile, sometimes you’d get a lot of errors, and the static analysis would throw a lot of errors too. It was kind of hard—you’d spend a lot of time setting up your environment, and compilation was kind of slow.

But when I decided to take a look again recently, I remembered that about a year ago they released new project templates and platform extensions that help with the setup of your environment. So I tried it, and it worked well! I have two clean setups right now: my new Surface computer that I reset maybe three weeks ago, and my old MSI computer with 64 gigabytes of RAM. These gave me good places to test.

I decided to go to the Uno Platform page and follow the “Getting Started” guide. The first thing you need to do is use some commands to install a tool that checks your setup to see if you have all the necessary workloads. That was super simple. Then you have to add the extension to Visual Studio—I’m using Visual Studio in this case just to add the project templates. You can do this in Rider or Visual Studio Code as well, but the traditional Visual Studio is my tool of preference.

Uno Platform – Visual Studio Marketplace

Setup your environment with uno check

After completing all the setup, you get a menu with a lot of choices, but they give you a set of recommended options that follow best practices. That’s really nice because you don’t have to think too much about it. After that, I created a few projects. The first time I compiled them, it took a little bit, but then it was just like magic—they compiled extremely fast!

You have all these choices to run your app on: WebAssembly, Windows UI, Android, and iOS, and it works perfectly. I fell in love again, especially because the tooling is actually really solid right now. You don’t have to struggle to make it work.

Since then, I’ve been checking the examples and trying to write some code, and so far, so good. I guess my new choice for a UI framework will be Uno because it builds on my current knowledge of .NET and C#. I can take advantage of the tools I already have, and I don’t have to switch languages. I just need to learn a new paradigm.

I will write a series of articles about all my adventures with Uno Platform. I’ll share links about getting started, and after this, I’ll create some sample applications addressing the challenges that app developers face: how to implement navigation, how to register services, how to work with the Model-View-ViewModel pattern, and so on.

I would like to document every challenge I encounter, and I hope that you can join me in these Uno adventures!

About Us

YouTube

https://www.youtube.com/c/JocheOjedaXAFXAMARINC

Our sites

Let’s discuss your XAF

https://calendly.com/bitframeworks/bitframeworks-free-xaf-support-hour/

Our free A.I courses on Udemy