by Joche Ojeda | Jun 26, 2025 | EfCore

What is the N+1 Problem?

Imagine you’re running a blog website and want to display a list of all blogs along with how many posts each one has. The N+1 problem is a common database performance issue that happens when your application makes way too many database trips to get this simple information.

Our Test Database Setup

Our test suite creates a realistic blog scenario with:

- 3 different blogs

- Multiple posts for each blog

- Comments on posts

- Tags associated with blogs

This mirrors real-world applications where data is interconnected and needs to be loaded efficiently.

Test Case 1: The Classic N+1 Problem (Lazy Loading)

What it does: This test demonstrates how “lazy loading” can accidentally create the N+1 problem. Lazy loading sounds helpful – it automatically fetches related data when you need it. But this convenience comes with a hidden cost.

The Code:

[Test]

public void Test_N_Plus_One_Problem_With_Lazy_Loading()

{

var blogs = _context.Blogs.ToList(); // Query 1: Load blogs

foreach (var blog in blogs)

{

var postCount = blog.Posts.Count; // Each access triggers a query!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {postCount}");

}

}

The SQL Queries Generated:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load posts for Blog 1 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Load posts for Blog 2 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- Query 4: Load posts for Blog 3 (triggered by lazy loading)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 3

The Problem: 4 total queries (1 + 3) – Each time you access blog.Posts.Count, lazy loading triggers a separate database trip.

Test Case 2: Alternative N+1 Demonstration

What it does: This test manually recreates the N+1 pattern to show exactly what’s happening, even if lazy loading isn’t working properly.

The Code:

[Test]

public void Test_N_Plus_One_Problem_Alternative_Approach()

{

var blogs = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogs)

{

// This explicitly loads posts for THIS blog only (simulates lazy loading)

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loaded {posts.Count} posts for blog {blog.Id}");

}

}

The Lesson: This explicitly demonstrates the N+1 pattern with manual queries. The result is identical to lazy loading – one query per blog plus the initial blogs query.

Test Case 3: N+1 vs Include() – Side by Side Comparison

What it does: This is the money shot – a direct comparison showing the dramatic difference between the problematic approach and the solution.

The Bad Code (N+1):

// BAD: N+1 Problem

var blogsN1 = _context.Blogs.ToList(); // Query 1

foreach (var blog in blogsN1)

{

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList(); // Queries 2,3,4...

}

The Good Code (Include):

// GOOD: Include() Solution

var blogsInclude = _context.Blogs

.Include(b => b.Posts)

.ToList(); // Single query with JOIN

foreach (var blog in blogsInclude)

{

// No additional queries needed - data is already loaded!

var postCount = blog.Posts.Count;

}

The SQL Queries:

Bad Approach (Multiple Queries):

-- Same 4 separate queries as shown in Test Case 1

Good Approach (Single Query):

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

Results from our test:

- Bad approach: 4 total queries (1 + 3)

- Good approach: 1 total query

- Performance improvement: 75% fewer database round trips!

Test Case 4: Guaranteed N+1 Problem

What it does: This test removes any doubt by explicitly demonstrating the N+1 pattern with clear step-by-step output.

The Code:

[Test]

public void Test_Guaranteed_N_Plus_One_Problem()

{

var blogs = _context.Blogs.ToList(); // Query 1

int queryCount = 1;

foreach (var blog in blogs)

{

queryCount++;

// This explicitly demonstrates the N+1 pattern

var posts = _context.Posts.Where(p => p.BlogId == blog.Id).ToList();

TestLogger.WriteLine($"Loading posts for blog '{blog.Title}' (Query #{queryCount})");

}

}

Why it’s useful: This ensures we can always see the problem clearly by manually executing the problematic pattern, making it impossible to miss.

Test Case 5: Eager Loading with Include()

What it does: Shows the correct way to load related data upfront using Include().

The Code:

[Test]

public void Test_Eager_Loading_With_Include()

{

var blogsWithPosts = _context.Blogs

.Include(b => b.Posts)

.ToList();

foreach (var blog in blogsWithPosts)

{

// No additional queries - data already loaded!

TestLogger.WriteLine($"Blog: {blog.Title} - Posts: {blog.Posts.Count}");

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

The Benefit: One database trip loads everything. When you access blog.Posts.Count, the data is already there.

Test Case 6: Multiple Includes with ThenInclude()

What it does: Demonstrates loading deeply nested data – blogs → posts → comments – all in one query.

The Code:

[Test]

public void Test_Multiple_Includes_With_ThenInclude()

{

var blogsWithPostsAndComments = _context.Blogs

.Include(b => b.Posts)

.ThenInclude(p => p.Comments)

.ToList();

foreach (var blog in blogsWithPostsAndComments)

{

foreach (var post in blog.Posts)

{

// All data loaded in one query!

TestLogger.WriteLine($"Post: {post.Title} - Comments: {post.Comments.Count}");

}

}

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title",

"c"."Id", "c"."Author", "c"."Content", "c"."CreatedDate", "c"."PostId"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

LEFT JOIN "Comments" AS "c" ON "p"."Id" = "c"."PostId"

ORDER BY "b"."Id", "p"."Id"

The Challenge: Loading three levels of data in one optimized query instead of potentially hundreds of separate queries.

Test Case 7: Projection with Select()

What it does: Shows how to load only the specific data you actually need instead of entire objects.

The Code:

[Test]

public void Test_Projection_With_Select()

{

var blogData = _context.Blogs

.Select(b => new

{

BlogTitle = b.Title,

PostCount = b.Posts.Count(),

RecentPosts = b.Posts

.OrderByDescending(p => p.PublishedDate)

.Take(2)

.Select(p => new { p.Title, p.PublishedDate })

})

.ToList();

}

The SQL Query (from our test output):

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId"), "b"."Id", "t0"."Title", "t0"."PublishedDate", "t0"."Id"

FROM "Blogs" AS "b"

LEFT JOIN (

SELECT "t"."Title", "t"."PublishedDate", "t"."Id", "t"."BlogId"

FROM (

SELECT "p0"."Title", "p0"."PublishedDate", "p0"."Id", "p0"."BlogId",

ROW_NUMBER() OVER(PARTITION BY "p0"."BlogId" ORDER BY "p0"."PublishedDate" DESC) AS "row"

FROM "Posts" AS "p0"

) AS "t"

WHERE "t"."row" <= 2

) AS "t0" ON "b"."Id" = "t0"."BlogId"

ORDER BY "b"."Id", "t0"."BlogId", "t0"."PublishedDate" DESC

Why it matters: This query only loads the specific fields needed, uses window functions for efficiency, and calculates counts in the database rather than loading full objects.

Test Case 8: Split Query Strategy

What it does: Demonstrates an alternative approach where one large JOIN is split into multiple optimized queries.

The Code:

[Test]

public void Test_Split_Query()

{

var blogs = _context.Blogs

.AsSplitQuery()

.Include(b => b.Posts)

.Include(b => b.Tags)

.ToList();

}

The SQL Queries (from our test output):

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

ORDER BY "b"."Id"

-- Query 2: Load posts (automatically generated)

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId"

ORDER BY "b"."Id"

-- Query 3: Load tags (automatically generated)

SELECT "t"."Id", "t"."Name", "b"."Id"

FROM "Blogs" AS "b"

INNER JOIN "BlogTag" AS "bt" ON "b"."Id" = "bt"."BlogsId"

INNER JOIN "Tags" AS "t" ON "bt"."TagsId" = "t"."Id"

ORDER BY "b"."Id"

When to use it: When JOINing lots of related data creates one massive, slow query. Split queries break this into several smaller, faster queries.

Test Case 9: Filtered Include()

What it does: Shows how to load only specific related data – in this case, only recent posts from the last 15 days.

The Code:

[Test]

public void Test_Filtered_Include()

{

var cutoffDate = DateTime.Now.AddDays(-15);

var blogsWithRecentPosts = _context.Blogs

.Include(b => b.Posts.Where(p => p.PublishedDate > cutoffDate))

.ToList();

}

The SQL Query:

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title",

"p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Blogs" AS "b"

LEFT JOIN "Posts" AS "p" ON "b"."Id" = "p"."BlogId" AND "p"."PublishedDate" > @cutoffDate

ORDER BY "b"."Id"

The Efficiency: Only loads posts that meet the criteria, reducing data transfer and memory usage.

Test Case 10: Explicit Loading

What it does: Demonstrates manually controlling when related data gets loaded.

The Code:

[Test]

public void Test_Explicit_Loading()

{

var blogs = _context.Blogs.ToList(); // Load blogs only

// Now explicitly load posts for all blogs

foreach (var blog in blogs)

{

_context.Entry(blog)

.Collection(b => b.Posts)

.Load();

}

}

The SQL Queries:

-- Query 1: Load blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Explicitly load posts for blog 1

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 1

-- Query 3: Explicitly load posts for blog 2

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" = 2

-- ... and so on

When useful: When you sometimes need related data and sometimes don’t. You control exactly when the additional database trip happens.

Test Case 11: Batch Loading Pattern

What it does: Shows a clever technique to avoid N+1 by loading all related data in one query, then organizing it in memory.

The Code:

[Test]

public void Test_Batch_Loading_Pattern()

{

var blogs = _context.Blogs.ToList(); // Query 1

var blogIds = blogs.Select(b => b.Id).ToList();

// Single query to get all posts for all blogs

var posts = _context.Posts

.Where(p => blogIds.Contains(p.BlogId))

.ToList(); // Query 2

// Group posts by blog in memory

var postsByBlog = posts.GroupBy(p => p.BlogId).ToDictionary(g => g.Key, g => g.ToList());

}

The SQL Queries:

-- Query 1: Load all blogs

SELECT "b"."Id", "b"."CreatedDate", "b"."Description", "b"."Title"

FROM "Blogs" AS "b"

-- Query 2: Load ALL posts for ALL blogs in one query

SELECT "p"."Id", "p"."BlogId", "p"."Content", "p"."PublishedDate", "p"."Title"

FROM "Posts" AS "p"

WHERE "p"."BlogId" IN (1, 2, 3)

The Result: Just 2 queries total, regardless of how many blogs you have. Data organization happens in memory.

Test Case 12: Performance Comparison

What it does: Puts all the approaches head-to-head to show their relative performance.

The Code:

[Test]

public void Test_Performance_Comparison()

{

// N+1 Problem (Multiple Queries)

var blogs1 = _context.Blogs.ToList();

foreach (var blog in blogs1)

{

var count = blog.Posts.Count(); // Triggers separate query

}

// Eager Loading (Single Query)

var blogs2 = _context.Blogs

.Include(b => b.Posts)

.ToList();

// Projection (Minimal Data)

var blogSummaries = _context.Blogs

.Select(b => new { b.Title, PostCount = b.Posts.Count() })

.ToList();

}

The SQL Queries Generated:

N+1 Problem: 4 separate queries (as shown in previous examples)

Eager Loading: 1 JOIN query (as shown in Test Case 5)

Projection: 1 optimized query with subquery:

SELECT "b"."Title", (

SELECT COUNT(*)

FROM "Posts" AS "p"

WHERE "b"."Id" = "p"."BlogId") AS "PostCount"

FROM "Blogs" AS "b"

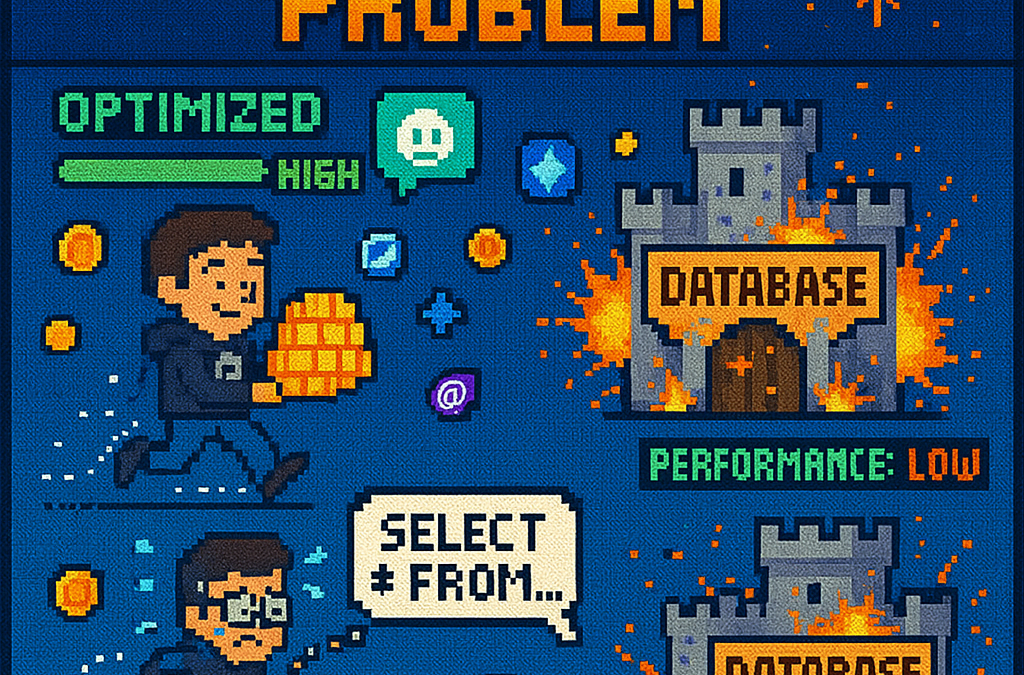

Real-World Performance Impact

Let’s scale this up to see why it matters:

Small Application (10 blogs):

- N+1 approach: 11 queries (≈110ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 100ms

Medium Application (100 blogs):

- N+1 approach: 101 queries (≈1,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 1 second

Large Application (1000 blogs):

- N+1 approach: 1001 queries (≈10,010ms)

- Optimized approach: 1 query (≈10ms)

- Time saved: 10 seconds

Key Takeaways

- The N+1 problem gets exponentially worse as your data grows

- Lazy loading is convenient but dangerous – it can hide performance problems

- Include() is your friend for loading related data efficiently

- Projection is powerful when you only need specific fields

- Different problems need different solutions – there’s no one-size-fits-all approach

- SQL query inspection is crucial – always check what queries your ORM generates

The Bottom Line

This test suite shows that small changes in how you write database queries can transform a slow, database-heavy operation into a fast, efficient one. The difference between a frustrated user waiting 10 seconds for a page to load and a happy user getting instant results often comes down to understanding and avoiding the N+1 problem.

The beauty of these tests is that they use real database queries with actual SQL output, so you can see exactly what’s happening under the hood. Understanding these patterns will make you a more effective developer and help you build applications that stay fast as they grow.

You can find the source for this article in my here

by Joche Ojeda | May 12, 2025 | C#, SivarErp

Welcome back to our ERP development series! In previous days, we’ve covered the foundational architecture, database design, and core entity structures for our accounting system. Today, we’re tackling an essential but often overlooked aspect of any enterprise software: data import and export capabilities.

Why is this important? Because no enterprise system exists in isolation. Companies need to move data between systems, migrate from legacy software, or simply handle batch data operations. In this article, we’ll build robust import/export services for the Chart of Accounts, demonstrating principles you can apply to any part of your ERP system.

The Importance of Data Exchange

Before diving into the code, let’s understand why dedicated import/export functionality matters:

- Data Migration – When companies adopt your ERP, they need to transfer existing data

- System Integration – ERPs need to exchange data with other business systems

- Batch Processing – Accountants often prepare data in spreadsheets before importing

- Backup & Transfer – Provides a simple way to backup or transfer configurations

- User Familiarity – Many users are comfortable working with CSV files

CSV (Comma-Separated Values) is our format of choice because it’s universally supported and easily edited in spreadsheet applications like Excel, which most business users are familiar with.

Our Implementation Approach

For our Chart of Accounts module, we’ll create:

- A service interface defining import/export operations

- A concrete implementation handling CSV parsing/generation

- Unit tests verifying all functionality

Our goal is to maintain clean separation of concerns, robust error handling, and clear validation rules.

Defining the Interface

First, we define a clear contract for our import/export service:

/// <summary>

/// Interface for chart of accounts import/export operations

/// </summary>

public interface IAccountImportExportService

{

/// <summary>

/// Imports accounts from a CSV file

/// </summary>

/// <param name="csvContent">Content of the CSV file as a string</param>

/// <param name="userName">User performing the operation</param>

/// <returns>Collection of imported accounts and any validation errors</returns>

Task<(IEnumerable<IAccount> ImportedAccounts, IEnumerable<string> Errors)> ImportFromCsvAsync(string csvContent, string userName);

/// <summary>

/// Exports accounts to a CSV format

/// </summary>

/// <param name="accounts">Accounts to export</param>

/// <returns>CSV content as a string</returns>

Task<string> ExportToCsvAsync(IEnumerable<IAccount> accounts);

}

Notice how we use C# tuples to return both the imported accounts and any validation errors from the import operation. This gives callers full insight into the operation’s results.

Implementing CSV Import

The import method is the more complex of the two, requiring:

- Parsing and validating the CSV structure

- Converting CSV data to domain objects

- Validating the created objects

- Reporting any errors along the way

Here’s our implementation approach:

public async Task<(IEnumerable<IAccount> ImportedAccounts, IEnumerable<string> Errors)> ImportFromCsvAsync(string csvContent, string userName)

{

List<AccountDto> importedAccounts = new List<AccountDto>();

List<string> errors = new List<string>();

if (string.IsNullOrEmpty(csvContent))

{

errors.Add("CSV content is empty");

return (importedAccounts, errors);

}

try

{

// Split the CSV into lines

string[] lines = csvContent.Split(new[] { "\r\n", "\r", "\n" }, StringSplitOptions.RemoveEmptyEntries);

if (lines.Length <= 1)

{

errors.Add("CSV file contains no data rows");

return (importedAccounts, errors);

}

// Assume first line is header

string[] headers = ParseCsvLine(lines[0]);

// Validate headers

if (!ValidateHeaders(headers, errors))

{

return (importedAccounts, errors);

}

// Process data rows

for (int i = 1; i < lines.Length; i++)

{

string[] fields = ParseCsvLine(lines[i]);

if (fields.Length != headers.Length)

{

errors.Add($"Line {i + 1}: Column count mismatch. Expected {headers.Length}, got {fields.Length}");

continue;

}

var account = CreateAccountFromCsvFields(headers, fields);

// Validate account

if (!_accountValidator.ValidateAccount(account))

{

errors.Add($"Line {i + 1}: Account validation failed for account {account.AccountName}");

continue;

}

// Set audit information

_auditService.SetCreationAudit(account, userName);

importedAccounts.Add(account);

}

return (importedAccounts, errors);

}

catch (Exception ex)

{

errors.Add($"Error importing CSV: {ex.Message}");

return (importedAccounts, errors);

}

}

Key aspects of this implementation:

- Early validation – We quickly detect and report basic issues like empty input

- Row-by-row processing – Each line is processed independently, allowing partial success

- Detailed error reporting – We collect specific errors with line numbers

- Domain validation – We apply business rules from

AccountValidator

- Audit trail – We set audit fields for each imported account

The ParseCsvLine method handles the complexities of CSV parsing, including quoted fields that may contain commas:

private string[] ParseCsvLine(string line)

{

List<string> fields = new List<string>();

bool inQuotes = false;

int startIndex = 0;

for (int i = 0; i < line.Length; i++)

{

if (line[i] == '"')

{

inQuotes = !inQuotes;

}

else if (line[i] == ',' && !inQuotes)

{

fields.Add(line.Substring(startIndex, i - startIndex).Trim().TrimStart('"').TrimEnd('"'));

startIndex = i + 1;

}

}

// Add the last field

fields.Add(line.Substring(startIndex).Trim().TrimStart('"').TrimEnd('"'));

return fields.ToArray();

}

Implementing CSV Export

The export method is simpler, converting domain objects to CSV format:

public Task<string> ExportToCsvAsync(IEnumerable<IAccount> accounts)

{

if (accounts == null || !accounts.Any())

{

return Task.FromResult(GetCsvHeader());

}

StringBuilder csvBuilder = new StringBuilder();

// Add header

csvBuilder.AppendLine(GetCsvHeader());

// Add data rows

foreach (var account in accounts)

{

csvBuilder.AppendLine(GetCsvRow(account));

}

return Task.FromResult(csvBuilder.ToString());

}

We take special care to handle edge cases like null or empty collections, making the API robust against improper usage.

Testing the Implementation

Our test suite verifies both the happy paths and various error conditions:

- Import validation – Tests for empty content, missing headers, etc.

- Export formatting – Tests for proper CSV generation, handling of special characters

- Round-trip integrity – Tests exporting and re-importing preserves data integrity

For example, here’s a round-trip test to verify data integrity:

[Test]

public async Task RoundTrip_ExportThenImport_PreservesAccounts()

{

// Arrange

var originalAccounts = new List<IAccount>

{

new AccountDto

{

Id = Guid.NewGuid(),

AccountName = "Cash",

OfficialCode = "11000",

AccountType = AccountType.Asset,

// other properties...

},

new AccountDto

{

Id = Guid.NewGuid(),

AccountName = "Accounts Receivable",

OfficialCode = "12000",

AccountType = AccountType.Asset,

// other properties...

}

};

// Act

string csv = await _importExportService.ExportToCsvAsync(originalAccounts);

var (importedAccounts, errors) = await _importExportService.ImportFromCsvAsync(csv, "Test User");

// Assert

Assert.That(errors, Is.Empty);

Assert.That(importedAccounts.Count(), Is.EqualTo(originalAccounts.Count));

// Check first account

var firstOriginal = originalAccounts[0];

var firstImported = importedAccounts.First();

Assert.That(firstImported.AccountName, Is.EqualTo(firstOriginal.AccountName));

Assert.That(firstImported.OfficialCode, Is.EqualTo(firstOriginal.OfficialCode));

Assert.That(firstImported.AccountType, Is.EqualTo(firstOriginal.AccountType));

// Check second account similarly...

}

Integration with the Broader System

This service isn’t meant to be used in isolation. In a complete ERP system, you’d typically:

- Add a controller to expose these operations via API endpoints

- Create UI components for file upload/download

- Implement progress reporting for larger imports

- Add transaction support to make imports atomic

- Include validation rules specific to your business domain

Design Patterns and Best Practices

Our implementation exemplifies several important patterns:

- Interface Segregation – The service has a focused, cohesive purpose

- Dependency Injection – We inject the

IAuditService rather than creating it

- Early Validation – We validate input before processing

- Detailed Error Reporting – We collect and return specific errors

- Defensive Programming – We handle edge cases and exceptions gracefully

Future Extensions

This pattern can be extended to other parts of your ERP system:

- Customer/Vendor Data – Import/export contact information

- Inventory Items – Handle product catalog updates

- Journal Entries – Process batch financial transactions

- Reports – Export financial data for external analysis

Conclusion

Data import/export capabilities are a critical component of any enterprise system. They bridge the gap between systems, facilitate migration, and support batch operations. By implementing these services with careful error handling and validation, we’ve added significant value to our ERP system.

In the next article, we’ll explore building financial reporting services to generate balance sheets, income statements, and other critical financial reports from our accounting data.

Stay tuned, and happy coding!

About Us

YouTube

https://www.youtube.com/c/JocheOjedaXAFXAMARINC

Our sites

Let’s discuss your XAF

This call/zoom will give you the opportunity to define the roadblocks in your current XAF solution. We can talk about performance, deployment or custom implementations. Together we will review you pain points and leave you with recommendations to get your app back in track

https://calendly.com/bitframeworks/bitframeworks-free-xaf-support-hour

Our free A.I courses on Udemy

by Joche Ojeda | Apr 28, 2024 | A.I

Introduction to Semantic Kernel

Hey there, fellow curious minds! Let’s talk about something exciting today—Semantic Kernel. But don’t worry, we’ll keep it as approachable as your favorite coffee shop chat.

What Exactly Is Semantic Kernel?

Imagine you’re in a magical workshop, surrounded by tools. Well, Semantic Kernel is like that workshop, but for developers. It’s an open-source Software Development Kit (SDK) that lets you create AI agents. These agents aren’t secret spies; they’re little programs that can answer questions, perform tasks, and generally make your digital life easier.

Here’s the lowdown:

- Open-Source: Think of it as a community project. People from all walks of tech life contribute to it, making it better and more powerful.

- Software Development Kit (SDK): Fancy term, right? But all it means is that it’s a set of tools for building software. Imagine it as your AI Lego set.

- Agents: Nope, not James Bond. These are like your personal AI sidekicks. They’re here to assist you, not save the world (although that would be cool).

A Quick History Lesson

About a year ago, Semantic Kernel stepped onto the stage. Since then, it’s been striding confidently, like a seasoned performer. Here are some backstage highlights:

- GitHub Stardom: On March 17th, 2023, it made its grand entrance on GitHub. And guess what? It got more than 17,000 stars! (Around 18.2. right now) That’s like being the coolest kid in the coding playground.

- Downloads Galore: The C# kernel (don’t worry, we’ll explain what that is) had 1000000+ NuGet downloads. It’s like everyone wanted a piece of the action.

- VS Code Extension: Over 25,000 downloads! Imagine it as a magical wand for your code editor.

And hey, the .Net kernel even threw a party—it reached a 1.0 release! The Python and Java kernels are close behind with their 1.0 Release Candidates. It’s like they’re all graduating from AI university.

Why Should You Care?

Now, here’s the fun part. Why should you, someone with a lifetime of wisdom and curiosity, care about this?

- Microsoft Magic: Semantic Kernel loves hanging out with Microsoft products. It’s like they’re best buddies. So, when you use it, you get to tap into the power of Microsoft’s tech universe. Fancy, right? Learn more

- No Code Rewrite Drama: Imagine you have a favorite recipe (let’s say it’s your grandma’s chocolate chip cookies). Now, imagine you want to share it with everyone. Semantic Kernel lets you do that without rewriting the whole recipe. You just add a sprinkle of AI magic! Check it out

- LangChain vs. Semantic Kernel: These two are like rival chefs. Both want to cook up AI goodness. But while LangChain (built around Python and JavaScript) comes with a full spice rack of tools, Semantic Kernel is more like a secret ingredient. It’s lightweight and includes not just Python but also C#. Plus, it’s like the Assistant API—no need to fuss over memory and context windows. Just cook and serve!

So, my fabulous friend, whether you’re a seasoned developer or just dipping your toes into the AI pool, Semantic Kernel has your back. It’s like having a friendly AI mentor who whispers, “You got this!” And with its growing community and constant updates, Semantic Kernel is leading the way in AI development.

Remember, you don’t need a PhD in computer science to explore this—it’s all about curiosity, creativity, and a dash of Semantic Kernel magic. ?✨

Ready to dive in? Check out the Semantic Kernel GitHub repository for the latest updates

by Joche Ojeda | Apr 23, 2024 | C#, Uncategorized

Castle.Core: A Favourite Among C# Developers

Castle.Core, a component of the Castle Project, is an open-source project that provides common abstractions, including logging services. It has garnered popularity in the .NET community, boasting over 88 million downloads.

Dynamic Proxies: Acting as Stand-Ins

In the realm of programming, a dynamic proxy is a stand-in or surrogate for another object, controlling access to it. This proxy object can introduce additional behaviours such as logging, caching, or thread-safety before delegating the call to the original object.

The Impact of Dynamic Proxies

Dynamic proxies are instrumental in intercepting method calls and implementing aspect-oriented programming. This aids in managing cross-cutting concerns like logging and transaction management.

Castle DynamicProxy: Generating Proxies at Runtime

Castle DynamicProxy, a feature of Castle.Core, is a library that generates lightweight .NET proxies dynamically at runtime. It enables operations to be performed before and/or after the method execution on the actual object, without altering the class code.

Dynamic Proxies in the Realm of ORM Libraries

Dynamic proxies find significant application in Object-Relational Mapping (ORM) Libraries. ORM allows you to interact with your database, such as SQL Server, Oracle, or MySQL, in an object-oriented manner. Dynamic proxies are employed in ORM libraries to create lightweight objects that mirror database records, facilitating efficient data manipulation and retrieval.

Here’s a simple example of how to create a dynamic proxy using Castle.Core:

using Castle.DynamicProxy;

public class SimpleInterceptor : IInterceptor

{

public void Intercept(IInvocation invocation)

{

Console.WriteLine("Before target call");

try

{

invocation.Proceed(); //Calls the decorated instance.

}

catch (Exception)

{

Console.WriteLine("Target threw an exception!");

throw;

}

finally

{

Console.WriteLine("After target call");

}

}

}

public class SomeClass

{

public virtual void SomeMethod()

{

Console.WriteLine("SomeMethod in SomeClass called");

}

}

public class Program

{

public static void Main()

{

ProxyGenerator generator = new ProxyGenerator();

SimpleInterceptor interceptor = new SimpleInterceptor();

SomeClass proxy = generator.CreateClassProxy(interceptor);

proxy.SomeMethod();

}

}

Conclusion

Castle.Core and its DynamicProxy feature are invaluable tools for C# programmers, enabling efficient handling of cross-cutting concerns through the creation of dynamic proxies. With over 825.5 million downloads, Castle.Core’s widespread use in the .NET community underscores its utility. Whether you’re a novice or an experienced C# programmer, understanding and utilizing dynamic proxies, particularly in ORM libraries, can significantly boost your programming skills. Dive into Castle.Core and dynamic proxies in your C# projects and take your programming skills to the next level. Happy coding!

by Joche Ojeda | Apr 20, 2024 | C#

Finding Out the Invoking Methods in .NET

In .NET, it’s possible to find out the methods that are invoking a specific method. This can be particularly useful when you don’t have the source code available. One way to achieve this is by throwing an exception and examining the call stack. Here’s how you can do it:

Throwing an Exception

First, within the method of interest, you need to throw an exception. Here’s an example:

public void MethodOfInterest()

{

throw new Exception("MethodOfInterest was called");

}

Catching the Exception

Next, you need to catch the exception in a higher level method that calls the method of interest:

public void InvokingMethod()

{

try

{

MethodOfInterest();

}

catch (Exception ex)

{

Console.WriteLine(ex.StackTrace);

}

}

In the catch block, we print the stack trace of the exception to the console. The stack trace is a string that represents a stack of method calls that leads to the location where the exception was thrown.

Examining the Call Stack

The call stack is a list of all the methods that were in the process of execution at the time the exception was thrown. By examining the call stack, you can see which methods were invoking the method of interest.

Here’s an example of what a call stack might look like:

at Namespace.MethodOfInterest() in C:\Path\To\File.cs:line 10

at Namespace.InvokingMethod() in C:\Path\To\File.cs:line 20

In this example, InvokingMethod was the method that invoked MethodOfInterest.

Conclusion

By throwing an exception and examining the call stack, you can find out which methods are invoking a specific method in .NET. This can be a useful debugging tool, especially when you don’t have the source code available.